Introduction to Deep Learning

While there are Deep Learning courses everywhere, as the tools have become widely used, it is still hard to find a course that builds a solid foundation by a utilizing non-trivial hands-on approach.

This is the reason we have built this course. Learn deep learning profoundly and effectively while still target engineers in the industry.

Overview

The course:

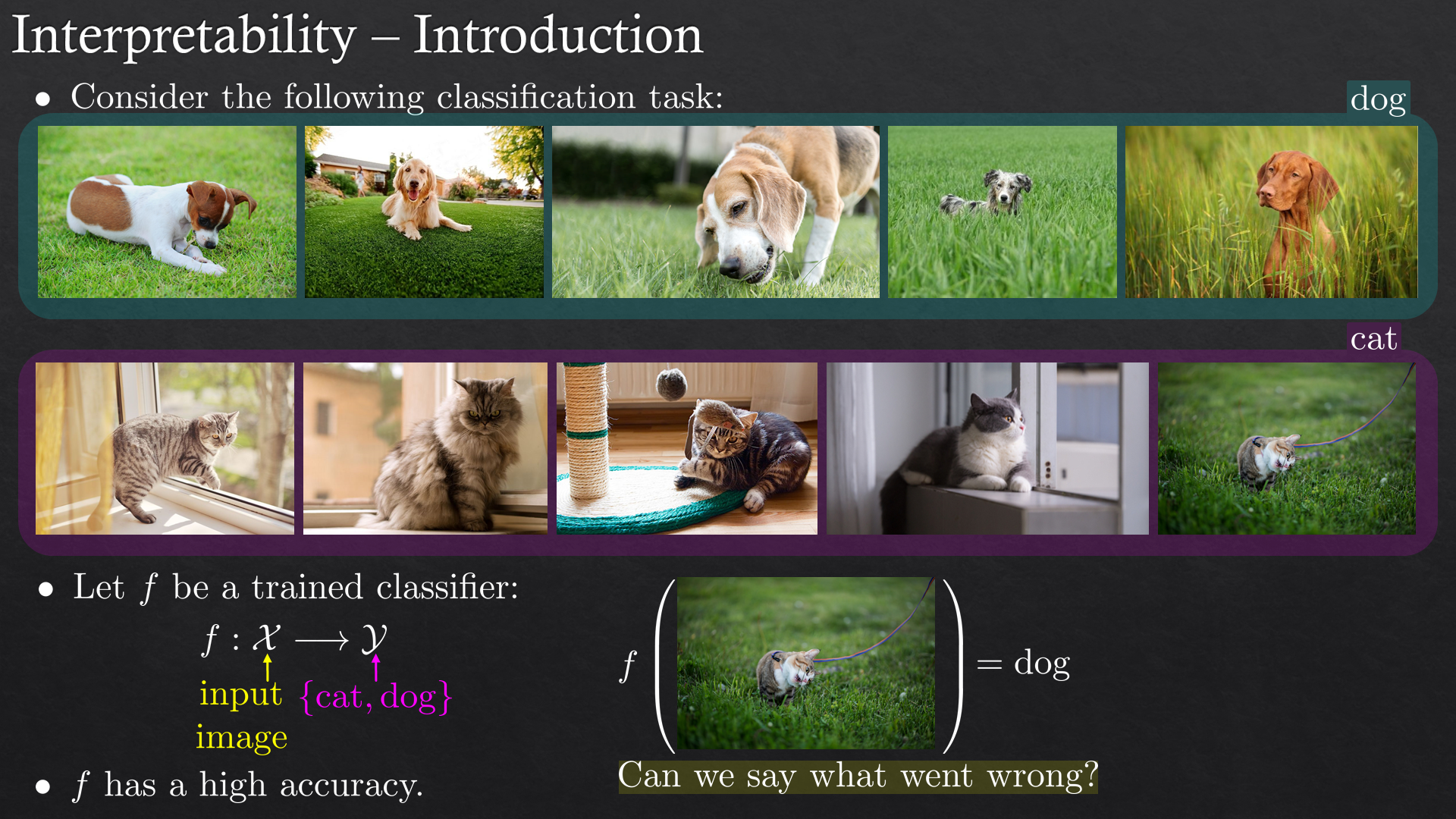

${\color{lime}\surd}$ Covers concepts in Deep Learning and their applications to data science tasks.

${\color{lime}\surd}$ Provides practical knowledge and tools for utilizing deep learning.

${\color{lime}\surd}$ Hands-on experience with emphasis on real world code and intuition by interactive visualization.

${\color{lime}\surd}$ Targets software developers, system engineers and algorithms engineers who are after a first dive into the field.

Main Topics

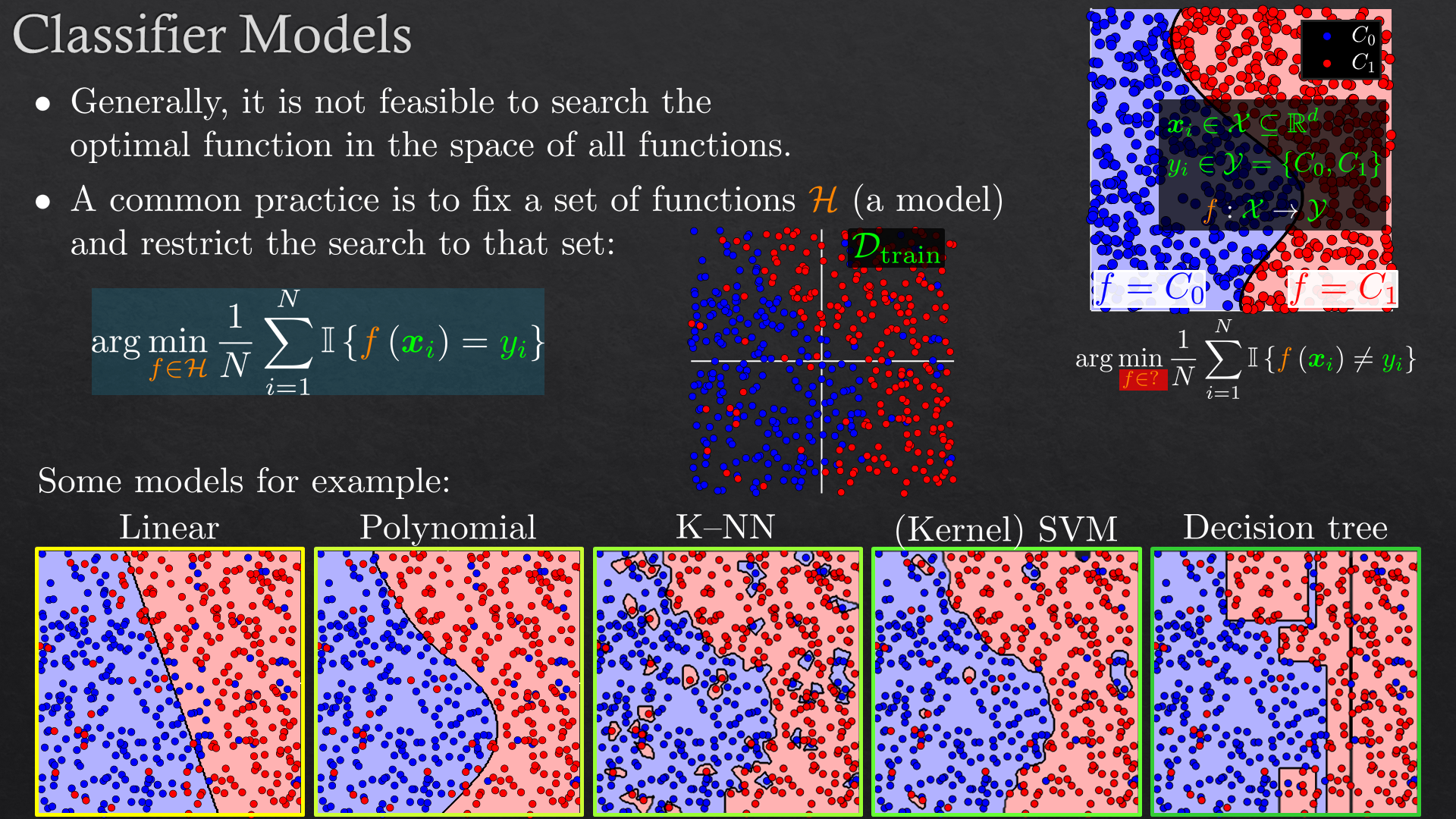

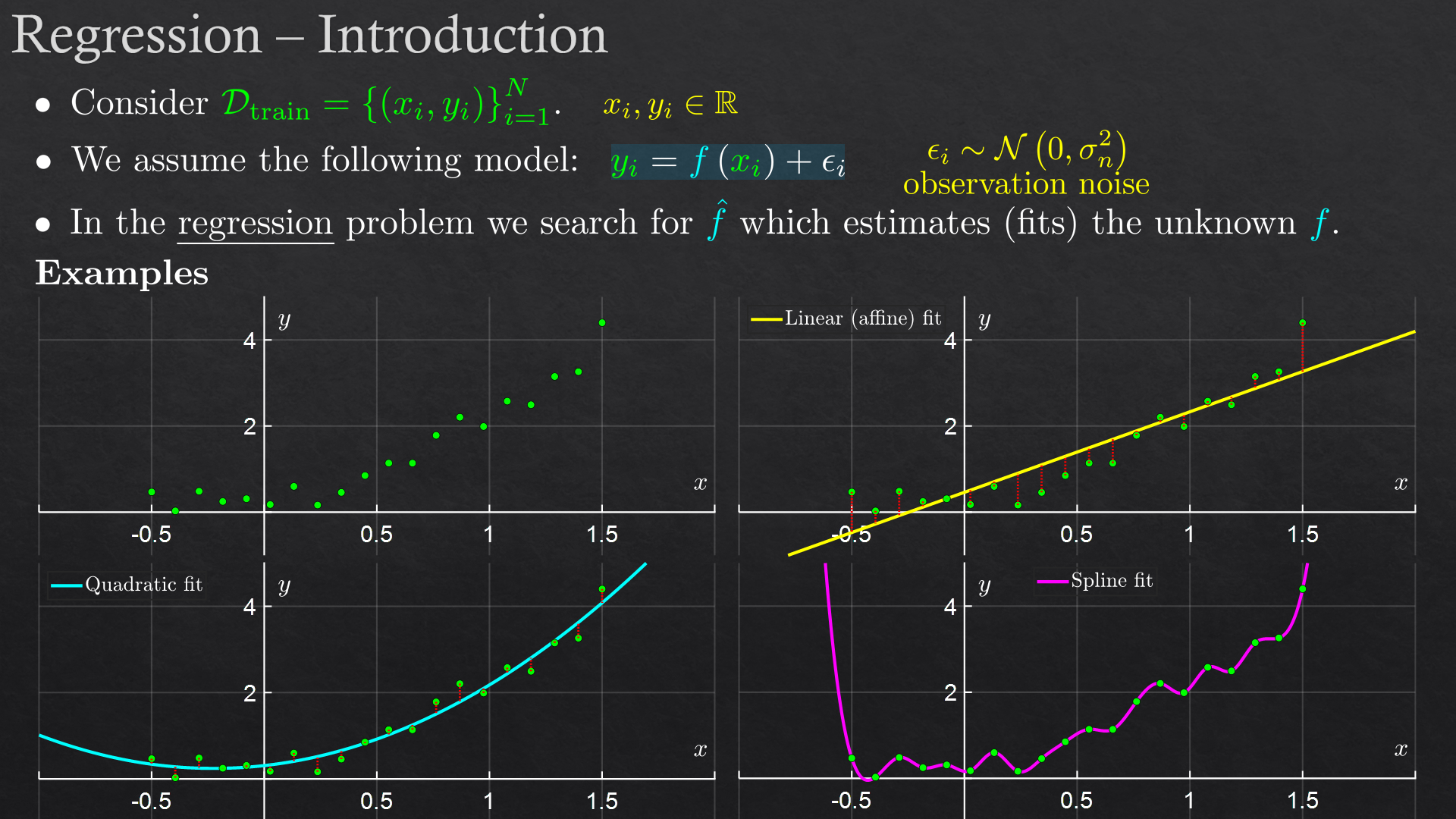

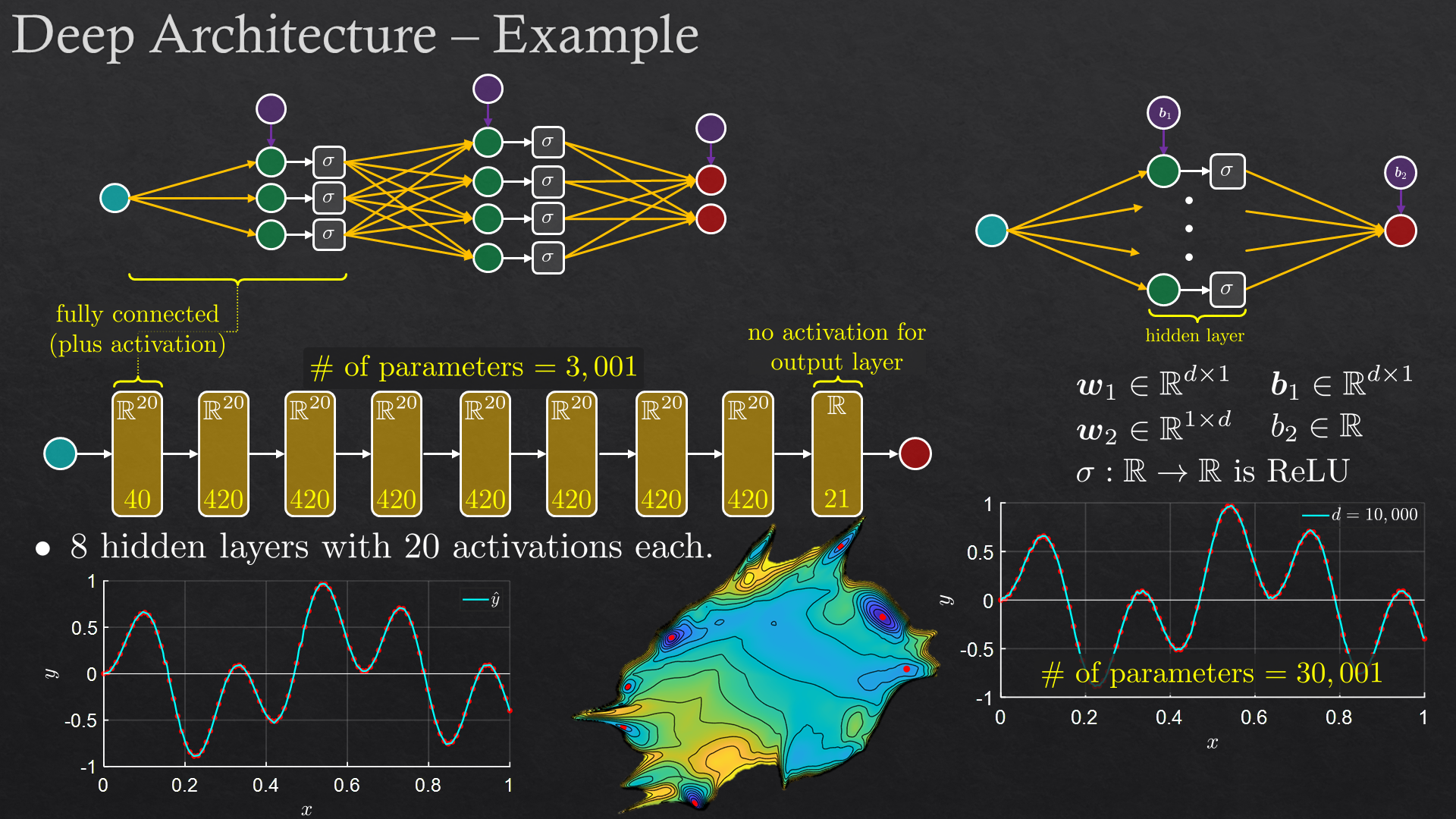

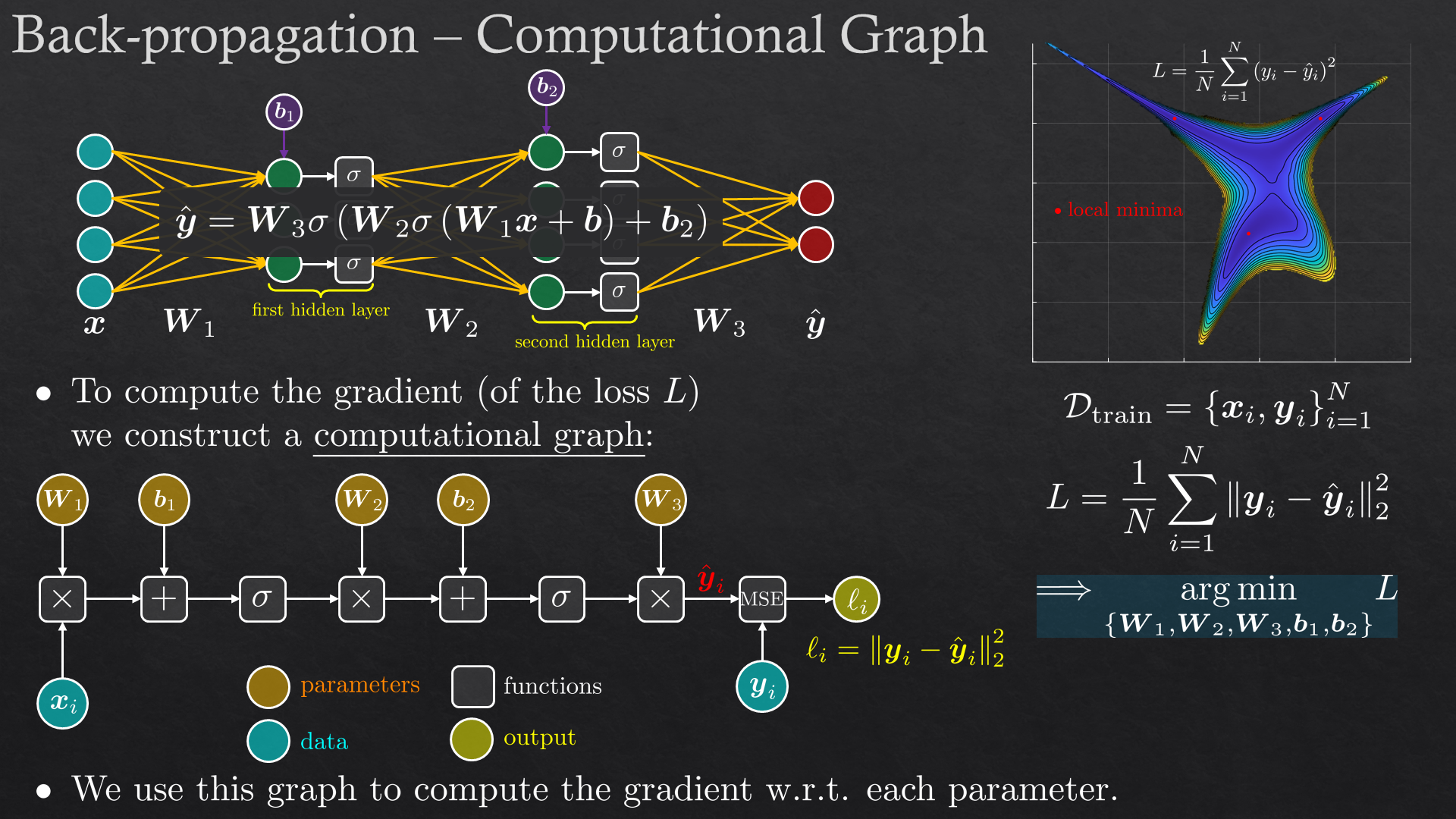

| Deep learning fundamentals | Perceptron, fully connected layers, activations, back-propagation |

| Training a network | Initialization, optimization, regularization |

| Convolutional neural networks | 1D and 2D convolution, layers, pre-trained architectures, transfer learning |

| Recurrent neural networks | |

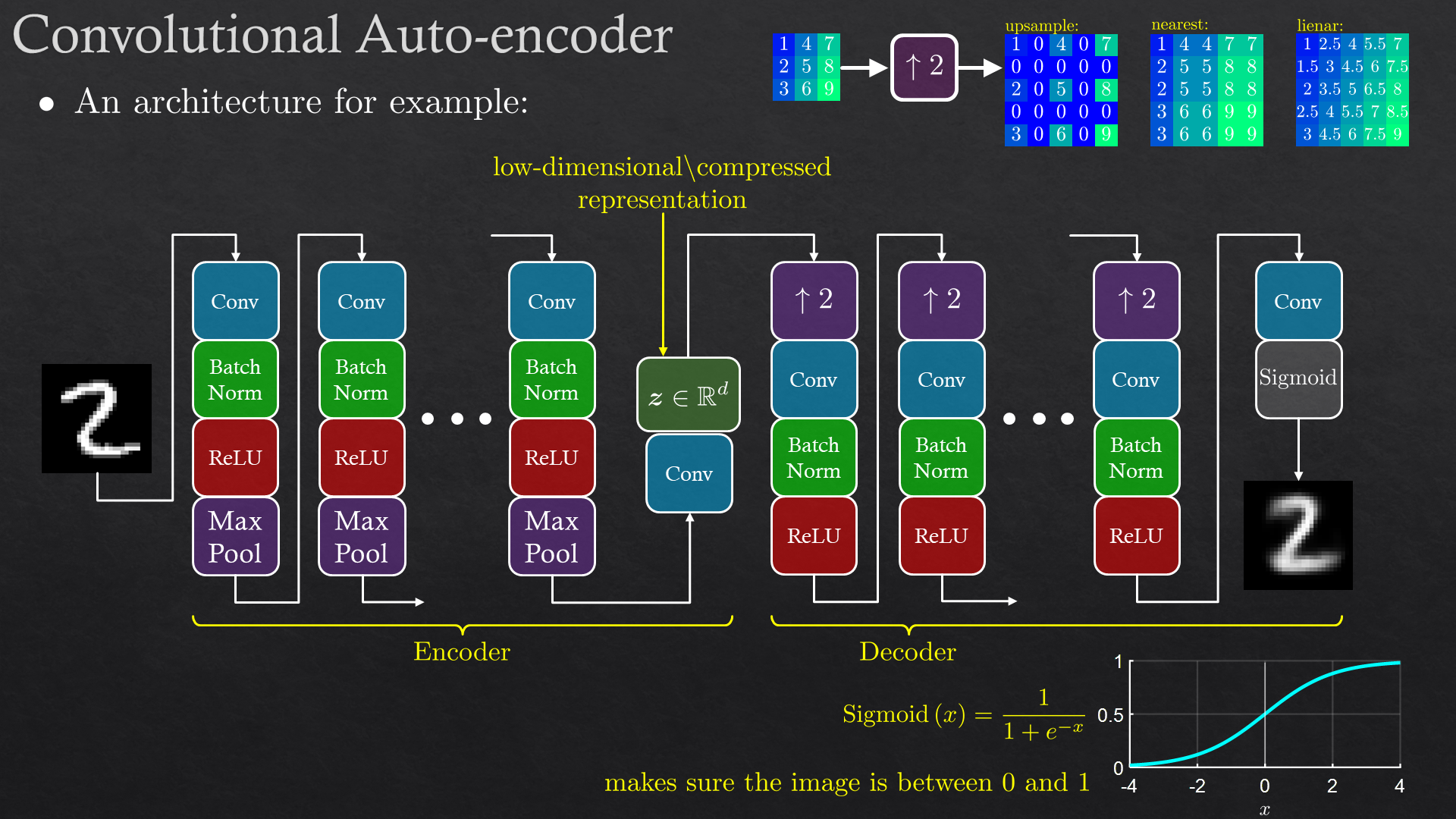

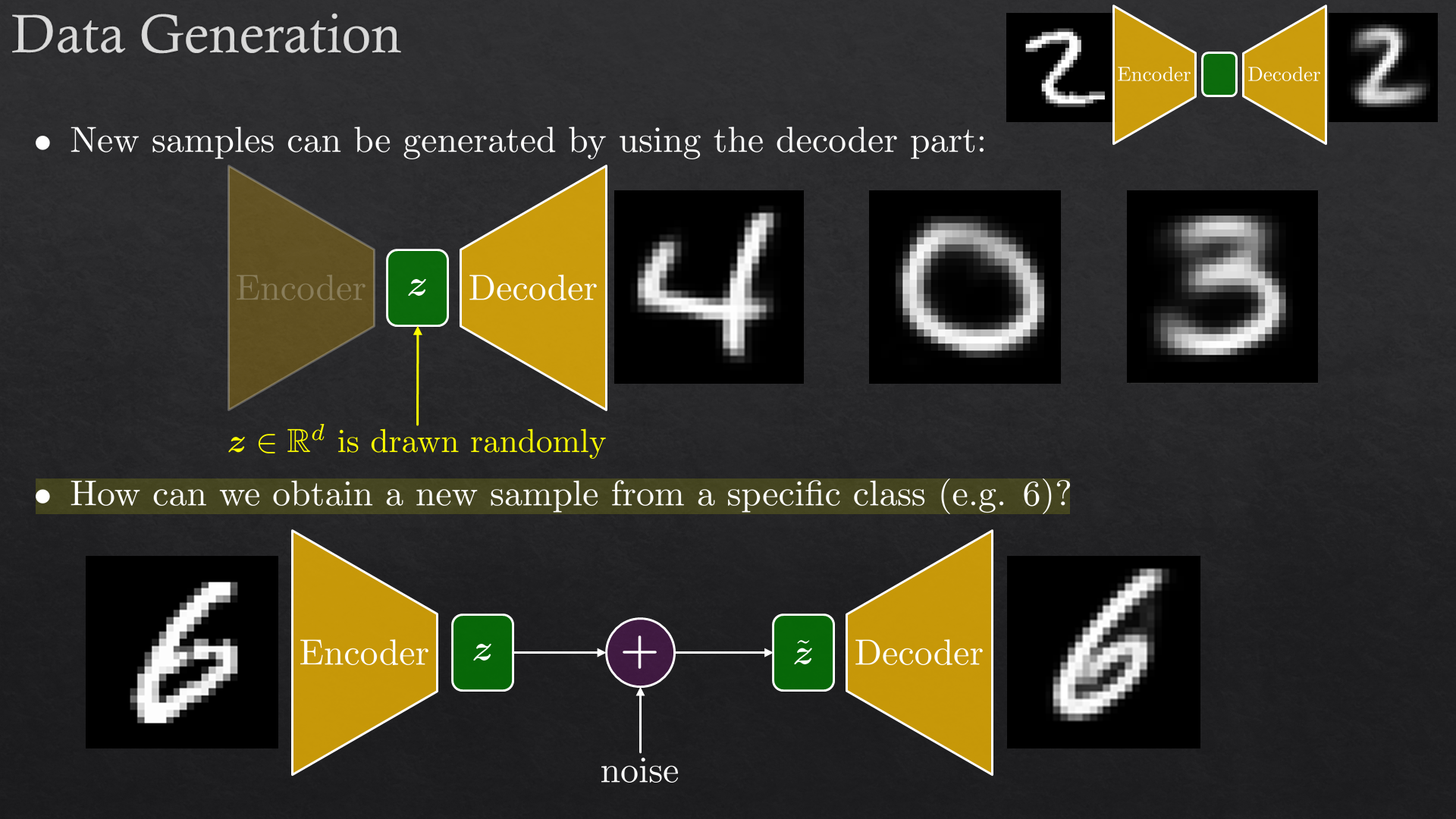

| Unsupervised deep learning | Auto-encodes and GANs |

Slide Samples

Goals

- The participants will be able to match the proper approach and net architecture to a given problem.

- The participants will learn how to use the PyTorch framework.

- The participants will be able to utilize existing nets by transfer learning, retrain them to a specific problem and benchmark results.

Pre Built Syllabus

We have been given this course in various lengths, targeting different audiences. The final syllabus will be decided and customized according to audience, allowed time and other needs.

| Day | Subject | Details |

|---|---|---|

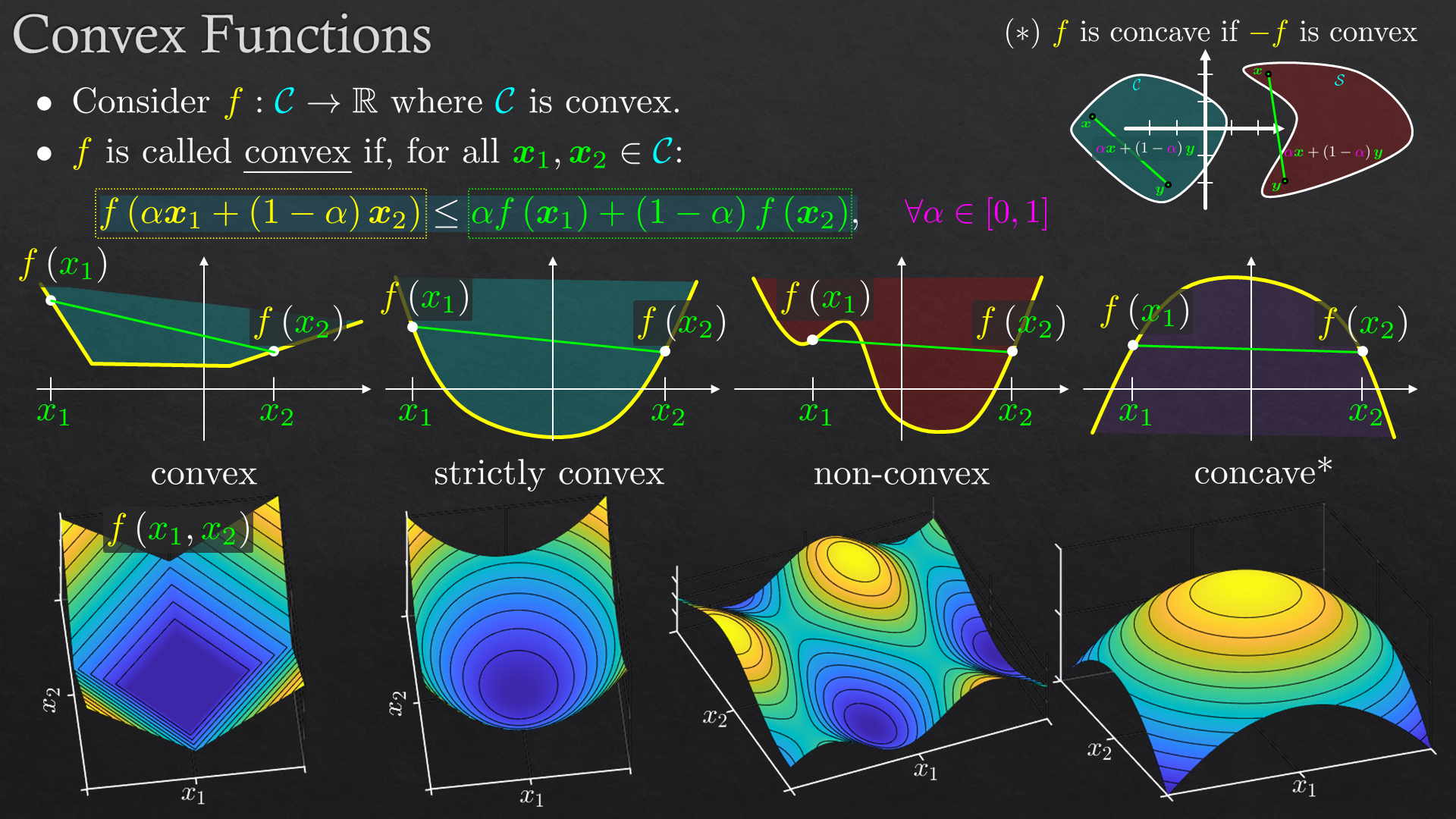

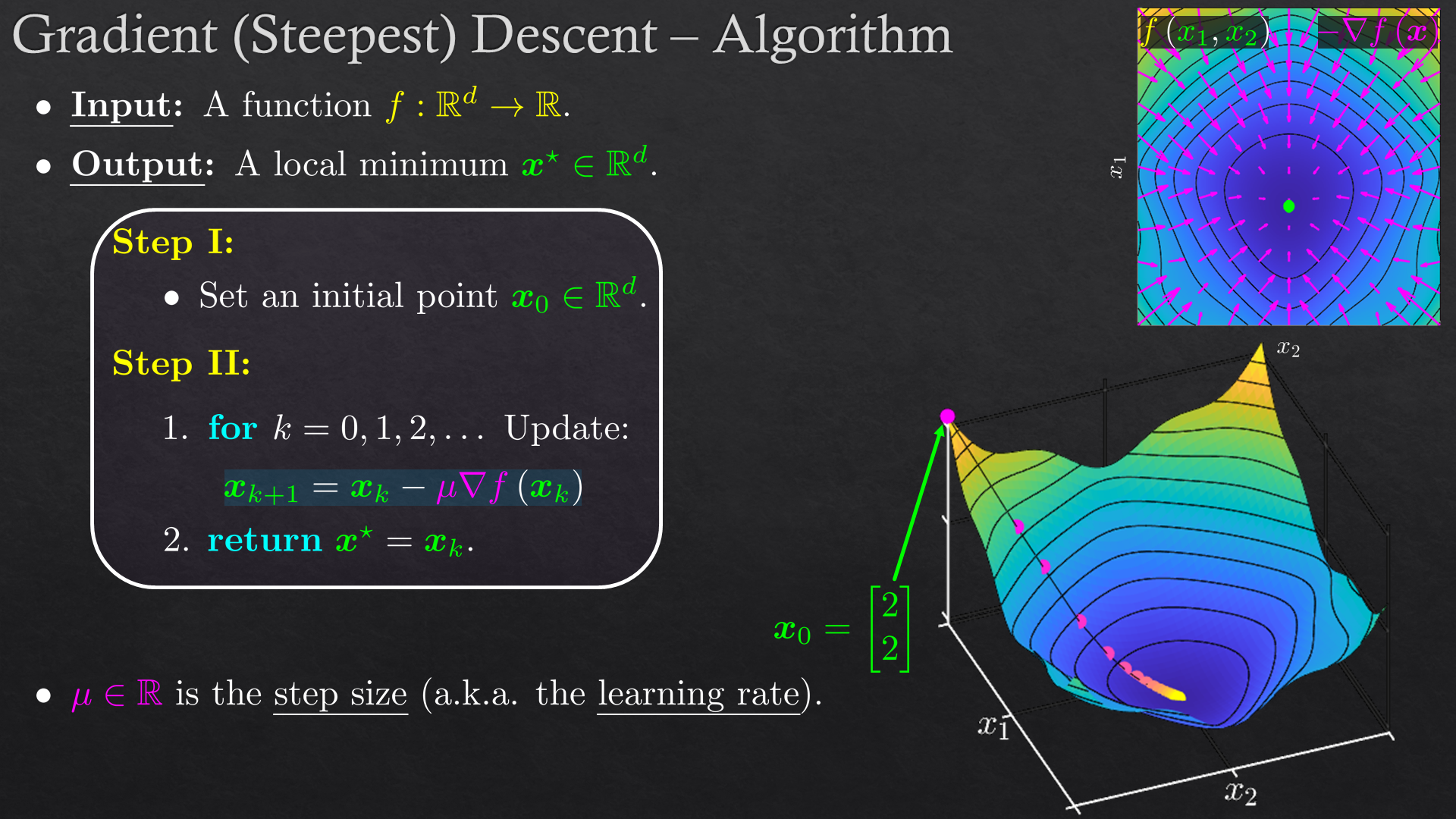

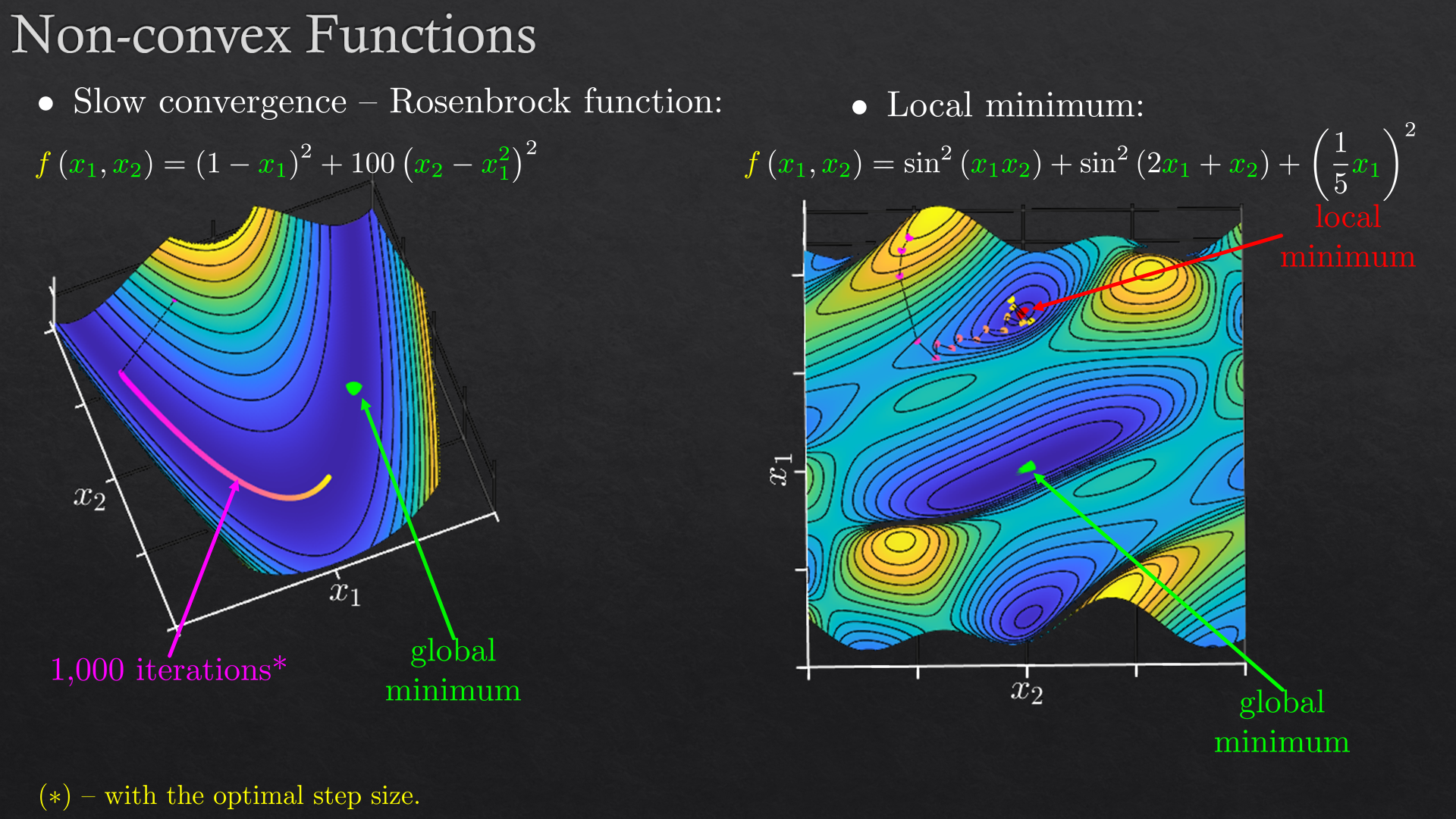

| 1 | Essential machine learning | Regression, classification, optimization (gradient descent) |

| Deep learning fundamentals | Fully connected networks: regression, classification and activation | |

| Back-propagation | Forward pass, backward pass and the chain rule | |

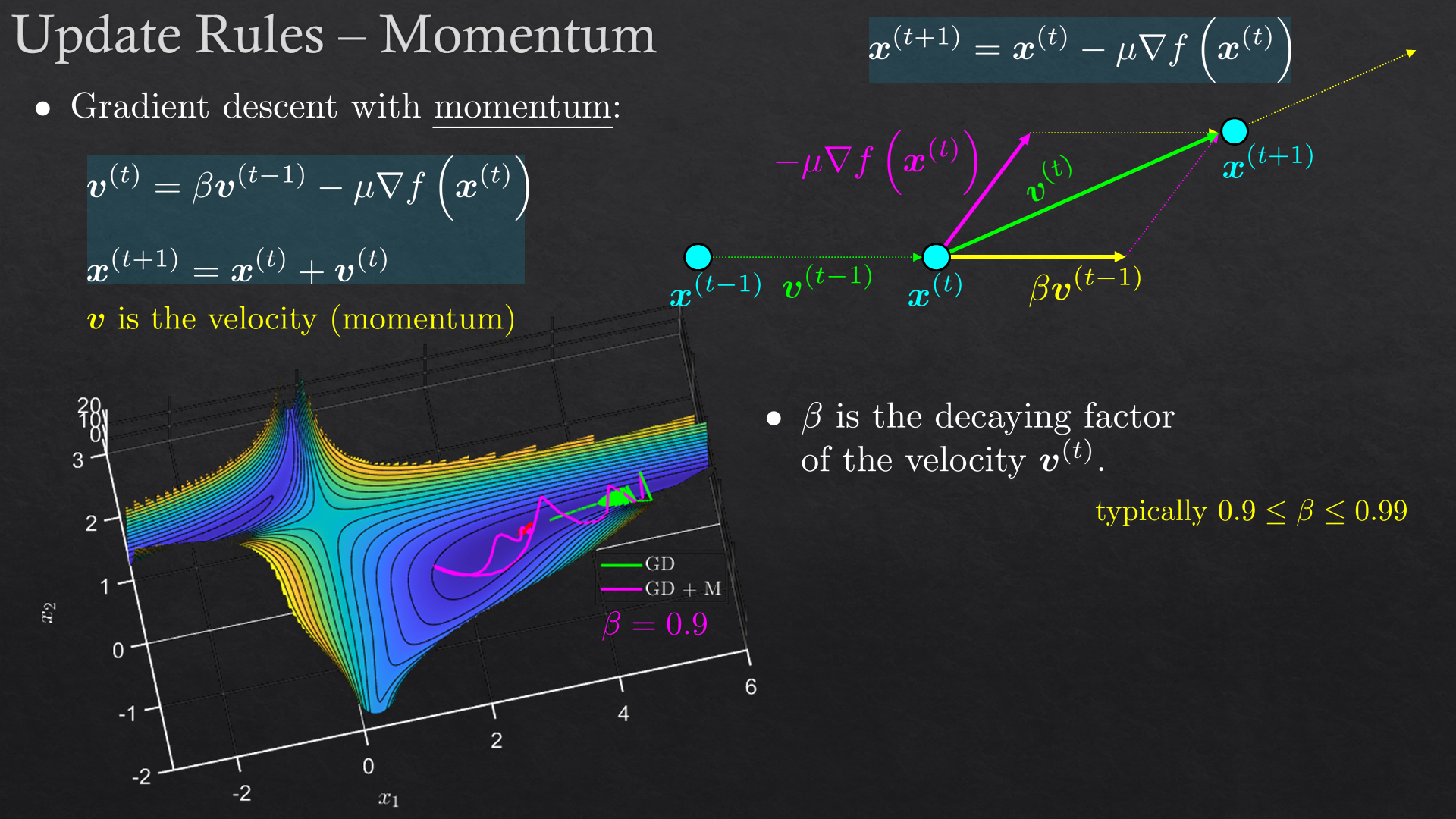

| Initialization and optimization methods | Pre-processing, weights initialization, optimization update rules | |

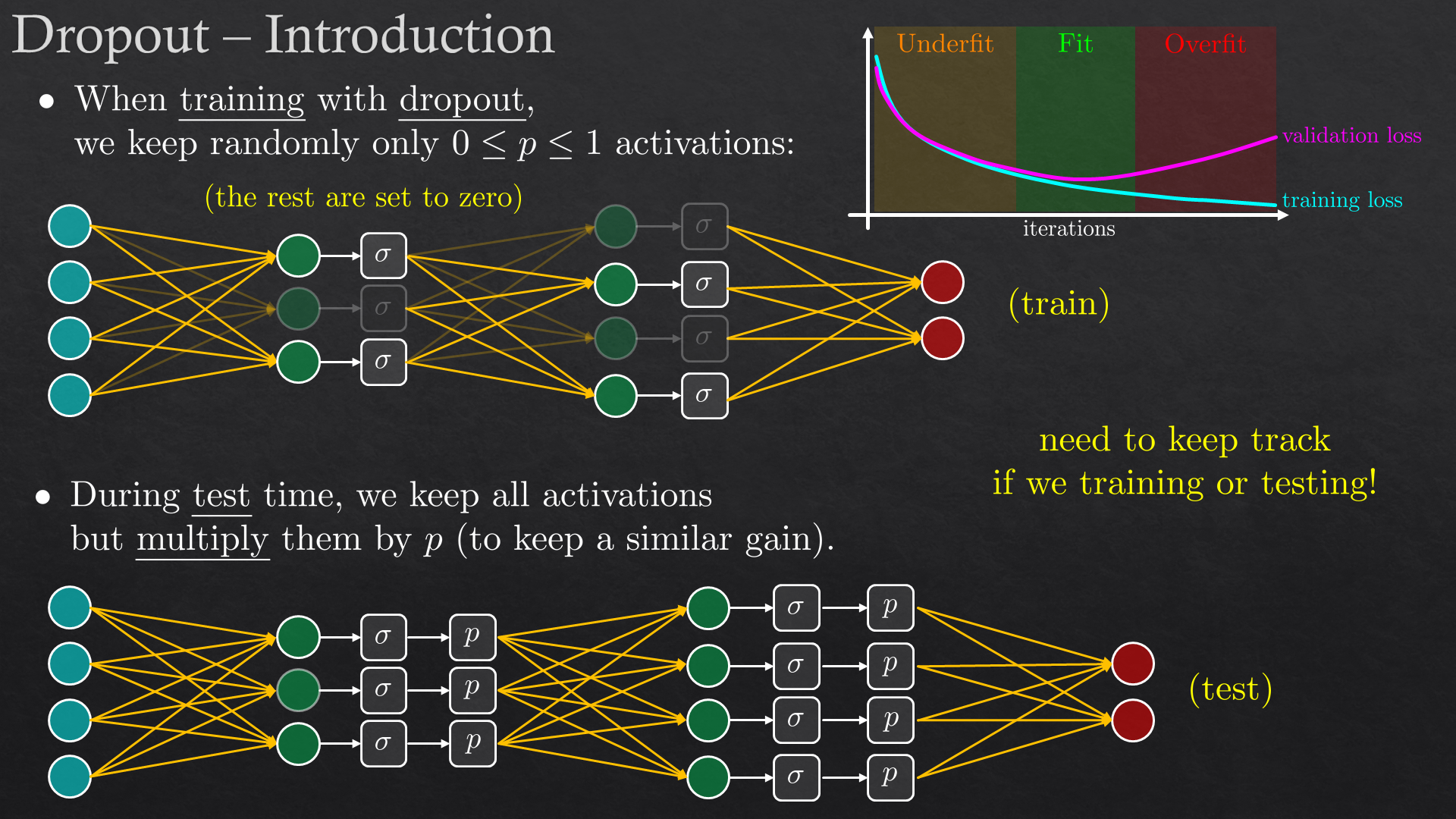

| Regularization methods | Early stopping, weights regularization, weight decay, dropout | |

| Exercise 1 | Regression using a self implemented network (from scratch) | |

| 2 | PyTorch I | Tensors, autograd, modules, GPU |

| PyTorch II | Hooks and callback, activation analysis, learning rate schedulers, TensorBoard | |

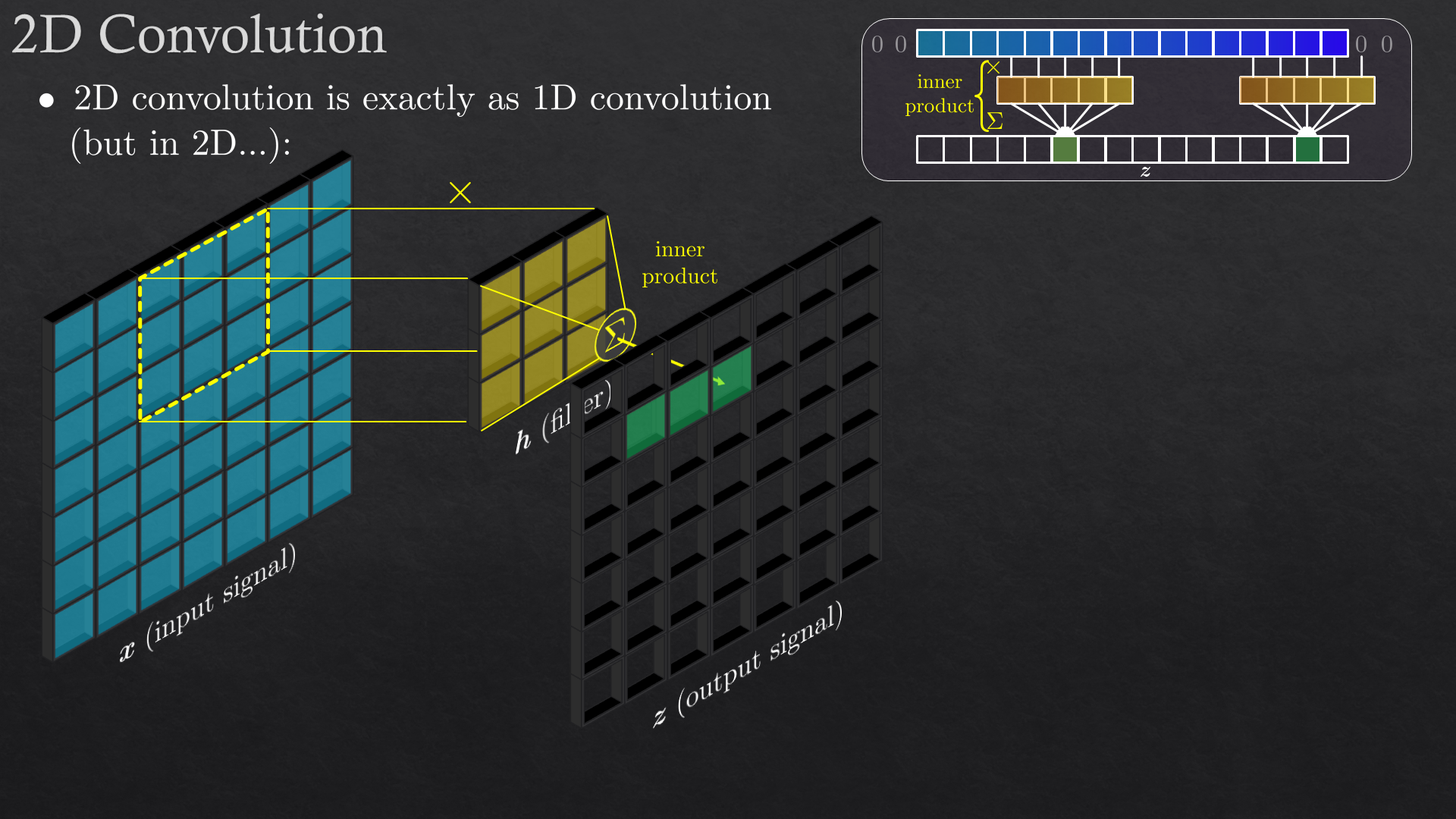

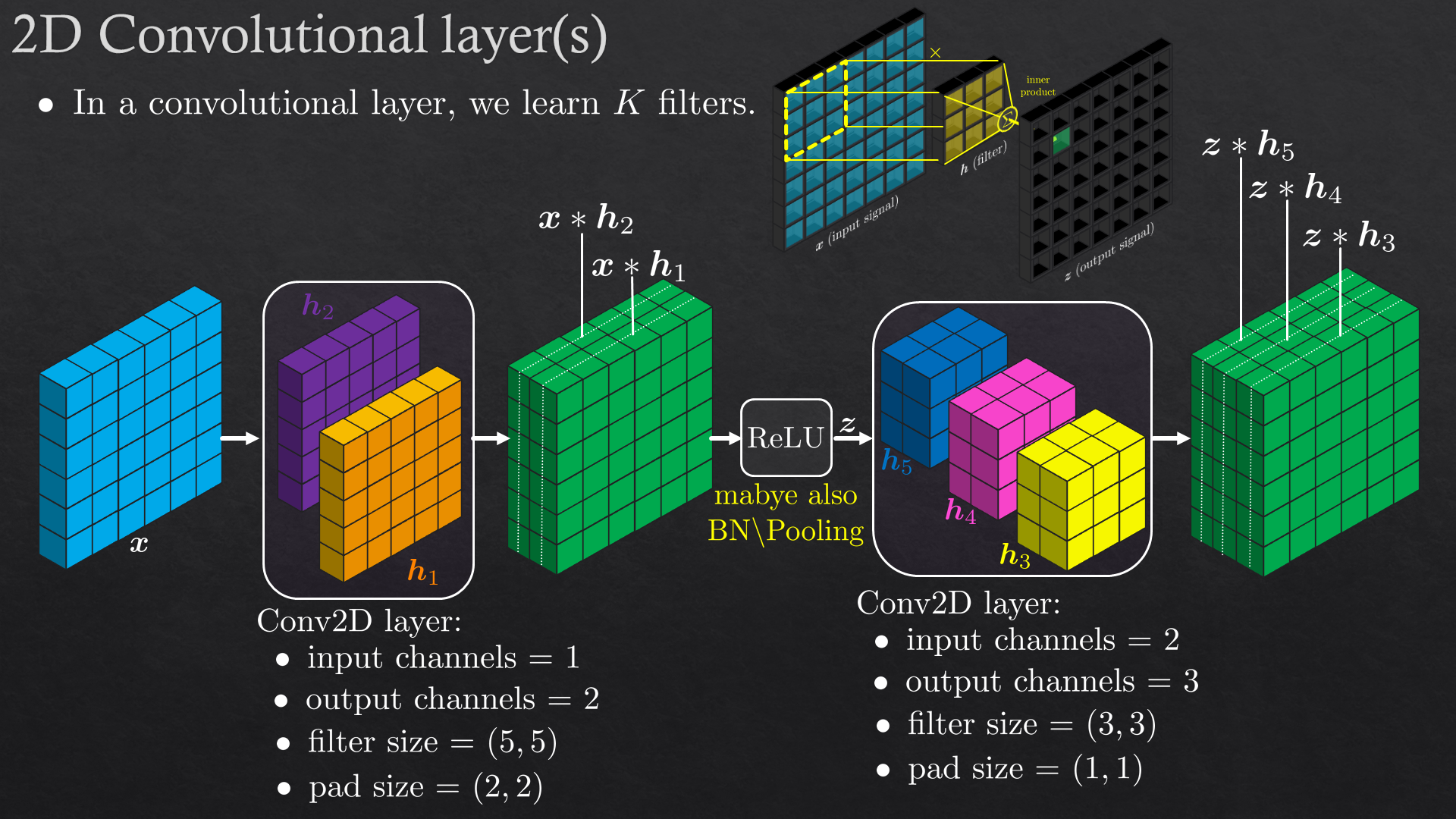

| Convolutional neural network (CNN) | Convolution, convolutional layers, pooling, batch normalization | |

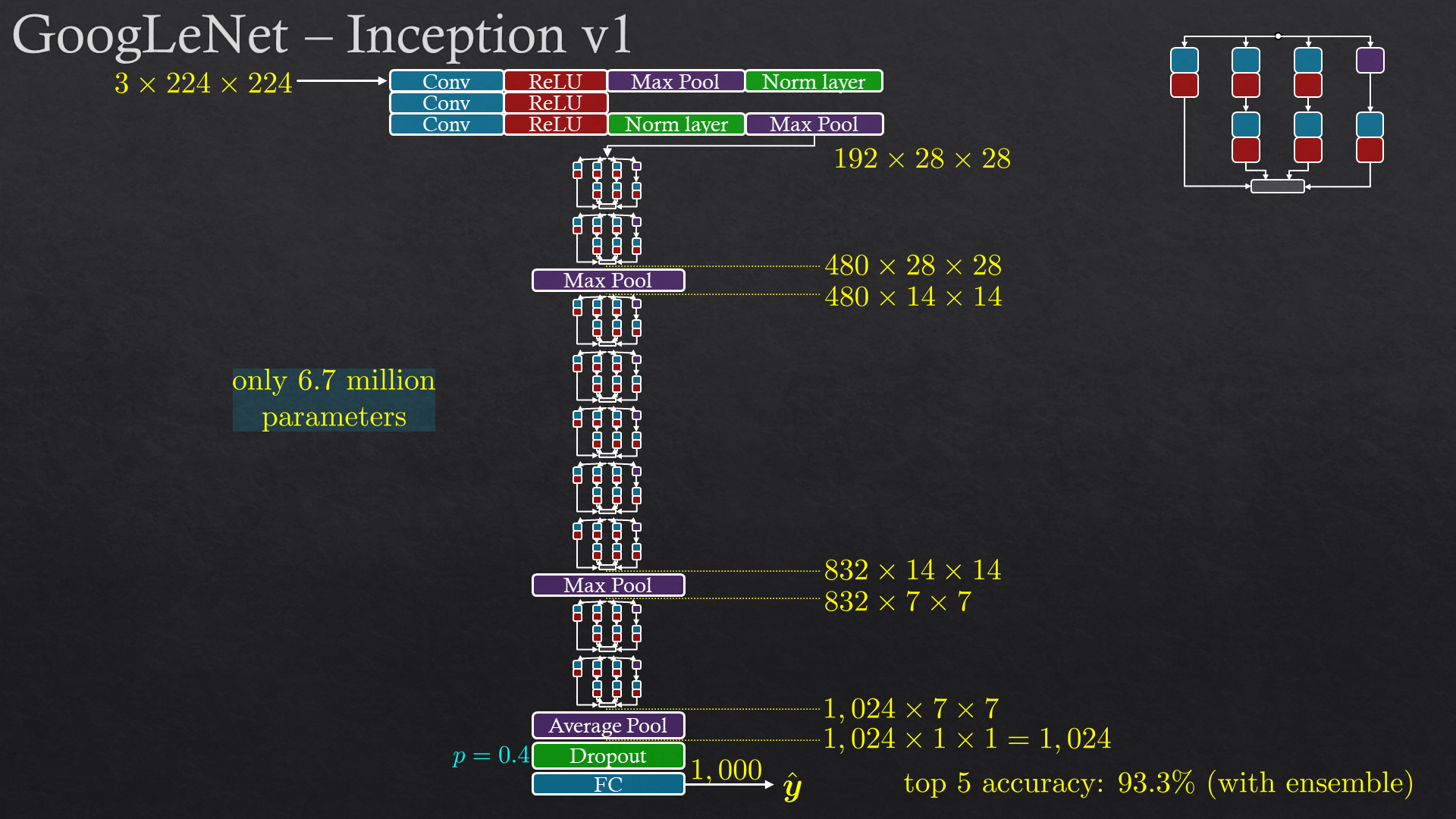

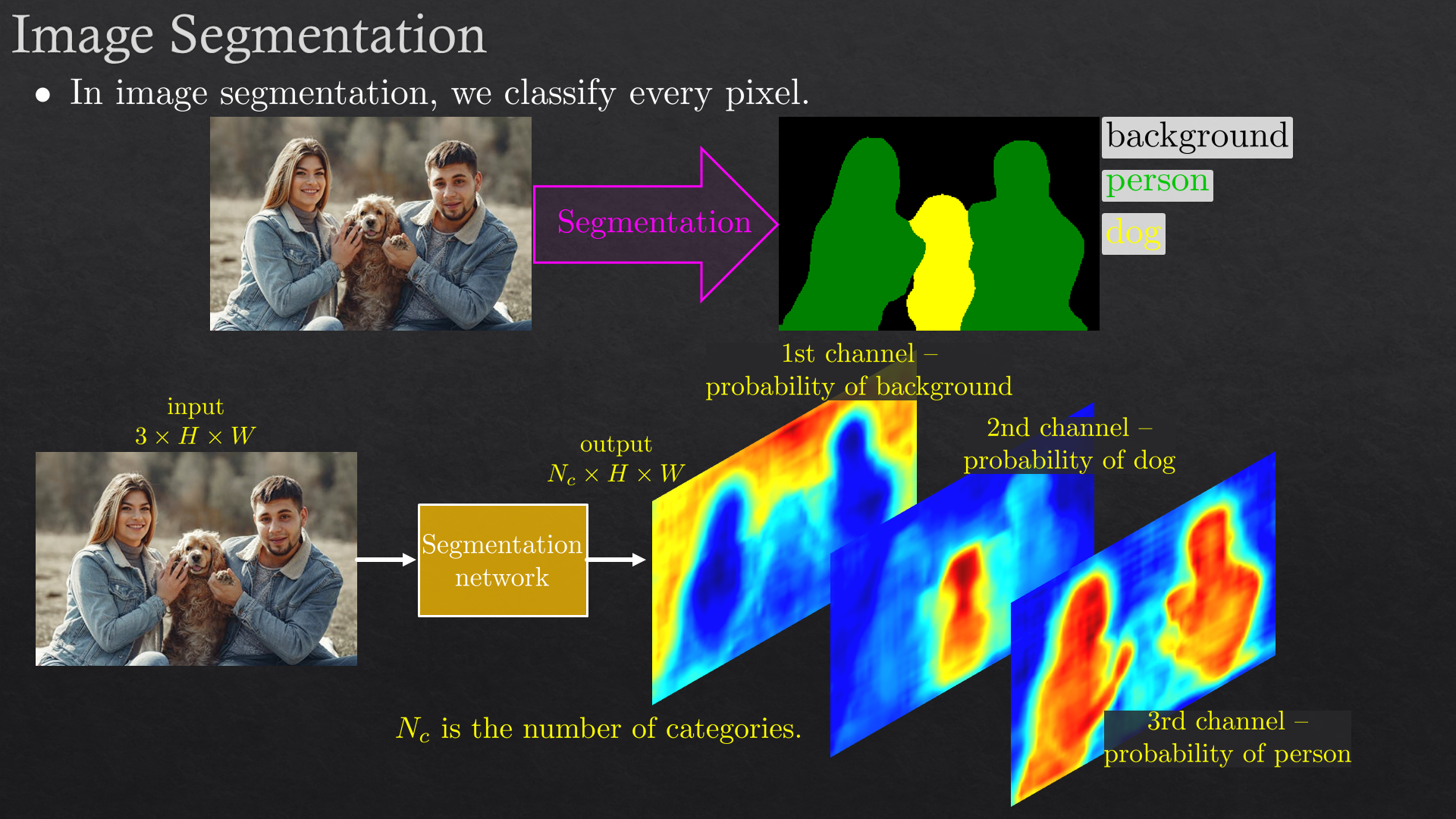

| CNN architectures | Alexnet, VGG, Inception, ResNet, transfer learning, introduction to object detection and segmentation | |

| Hands-on tips | Data augmentation, label smoothing, mixup augmentation, ensembles, 10-crop | |

| Exercise 2 | Classification (Cifar-10) | |

| 3 | Unsupervised (self-supervised) deep learning | Auto-encoders, introduction to GAN |

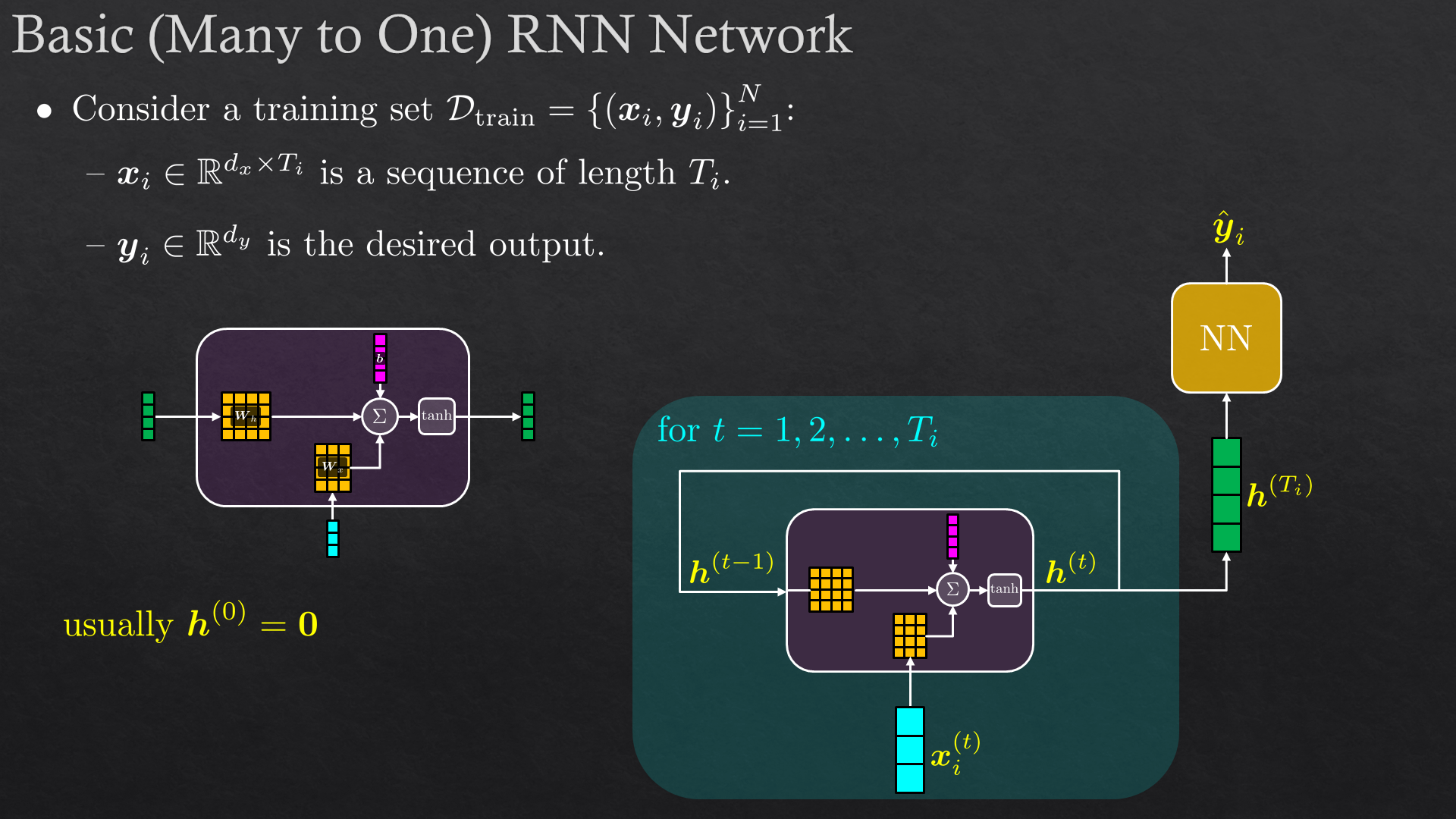

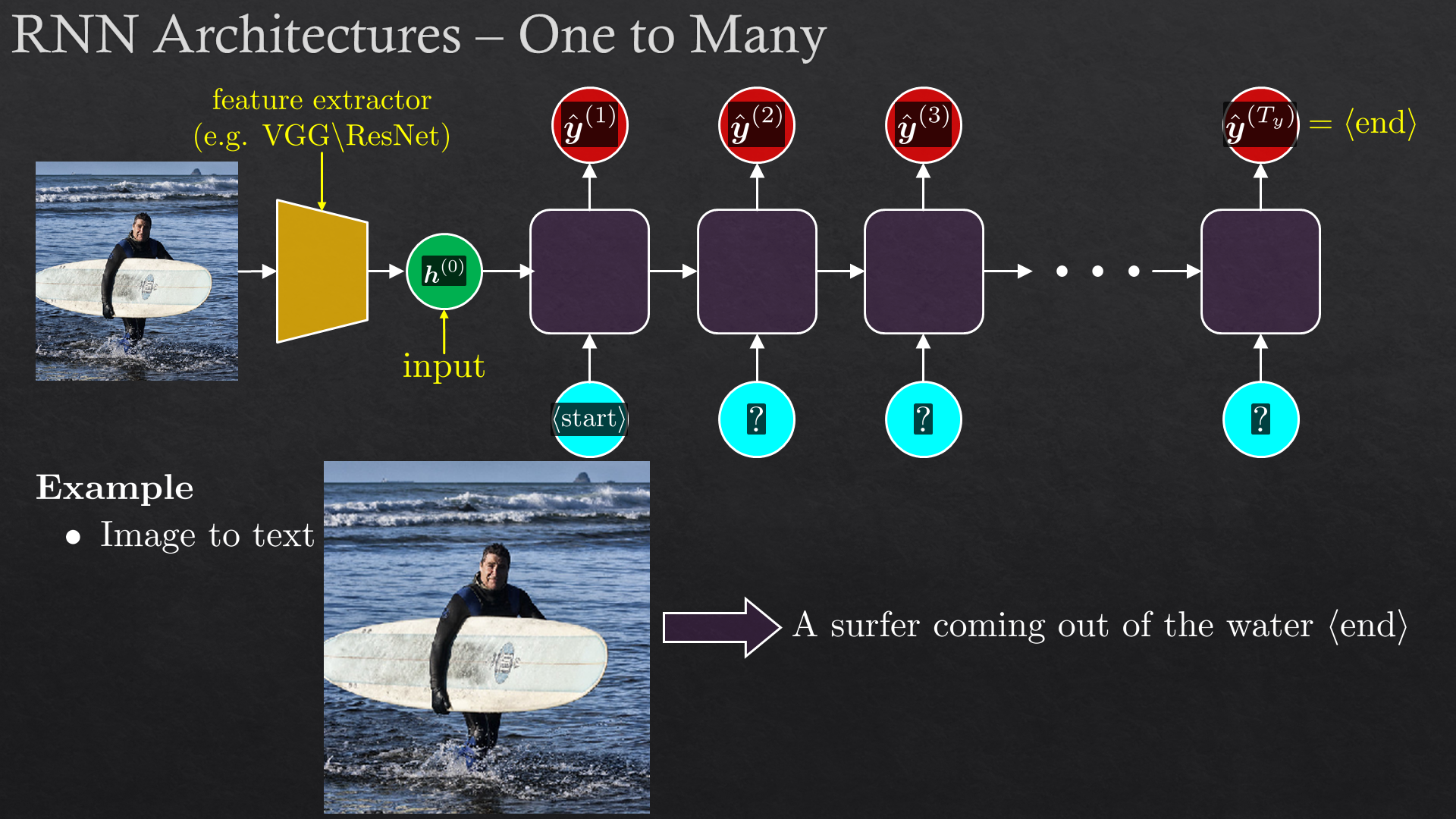

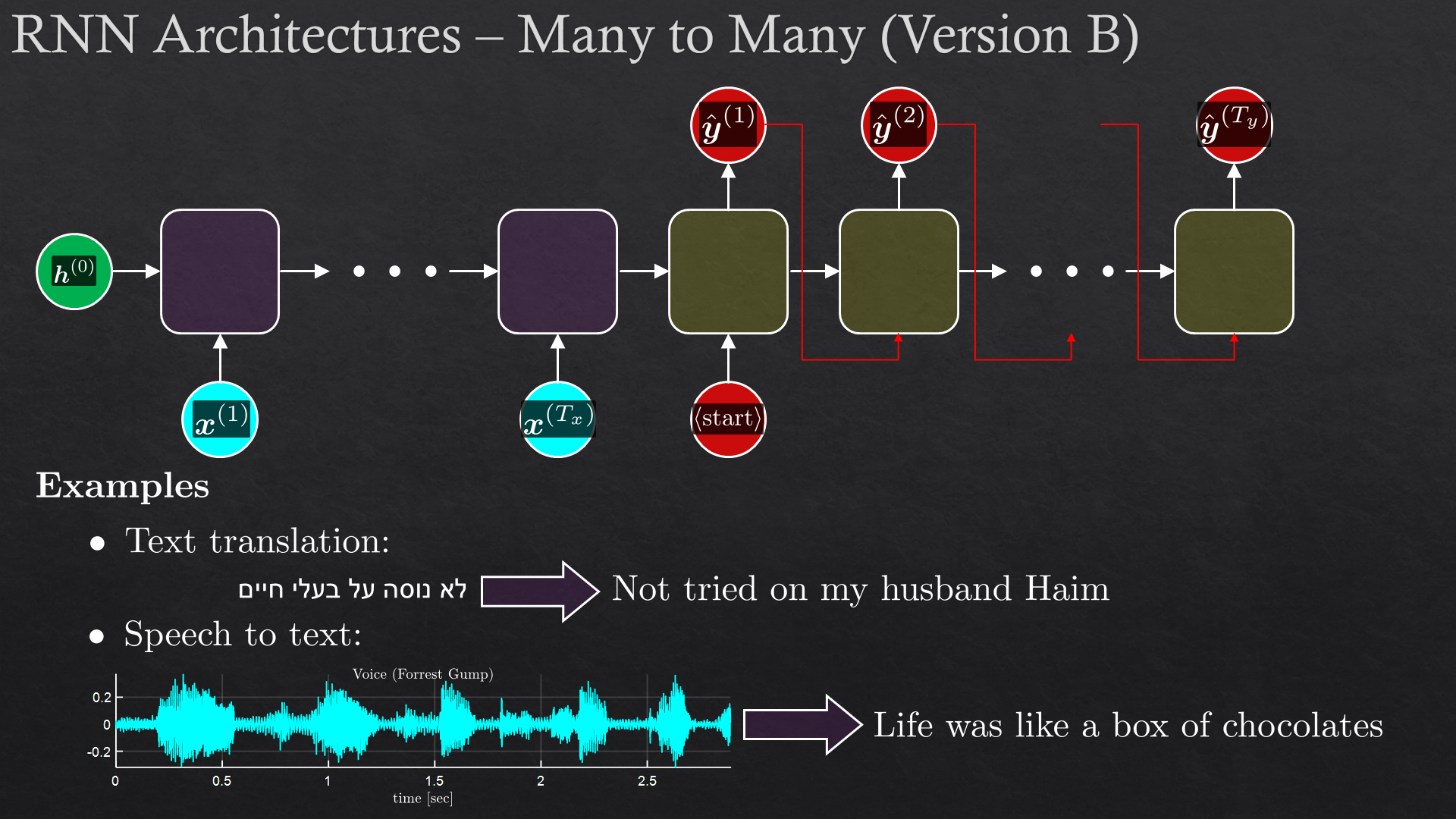

| Recurrent neural network (RNN) | Vanilla RNN, GRU, LSTM, RNN architectures, Sampling from RNN | |

| Exercise 3 | GANs and music generation with RNN |

In addition to the exercises in the syllabus, there are many more mini-exercises (within each topic).

Prerequisites

Knowledge in machine learning is required, if needed, we recommend taking this course after taking (one of) our machine learning courses: Machine Learning Methods or Introduction to Machine Learning.

- Linear algebra

- Basic calculus

- Machine learning

- Experience with a scientific language (Python, Matlab, R, etc')

In any case any of the prerequisites are not met, we can offer a half day sprint on: Linear Algebra, Calculus, Probability and Python.