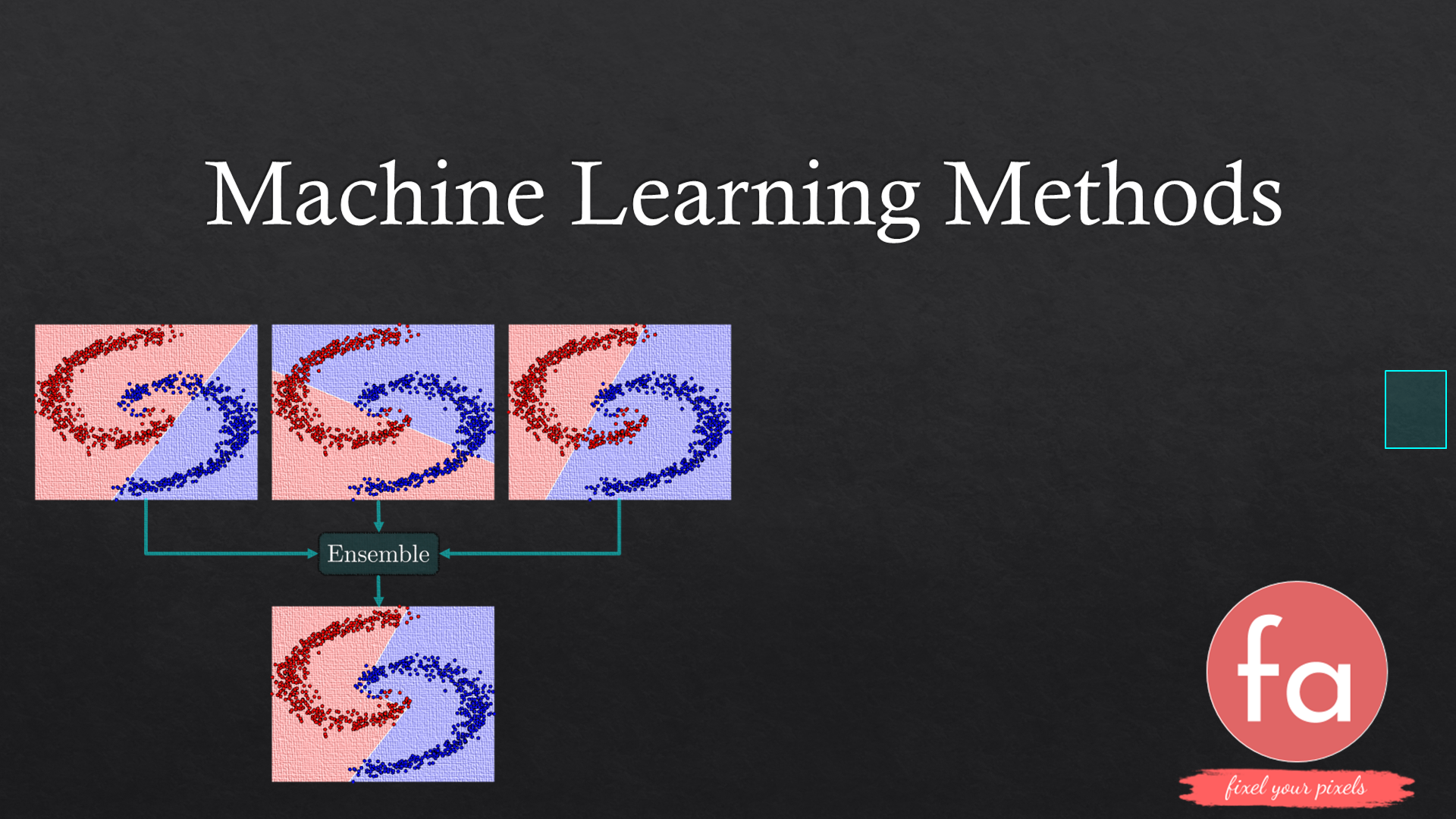

Machine Learning Methods

Modern machine learning methods for solving real-world problems with a hands-on approach.

Overview

The course:

${\color{lime}\surd}$ Covers fundamental and advanced concepts.

${\color{lime}\surd}$ Provides practical tools for solving data science tasks.

${\color{lime}\surd}$ Hands-on experience and intuition by interactive visualization.

${\color{lime}\surd}$ Targets people who are expected to have a deep understanding of ML to use it in their daily tasks.

Main Topics

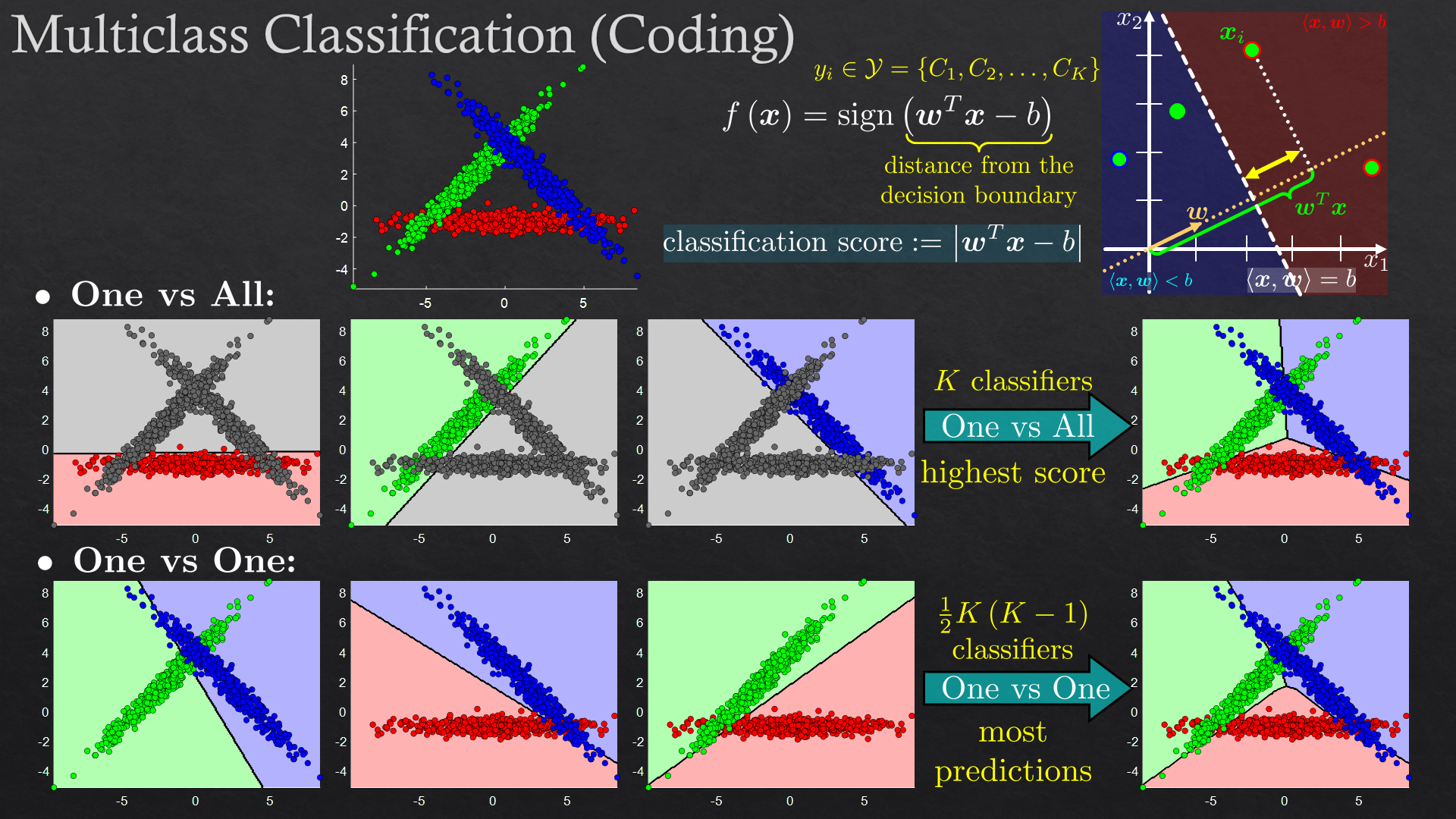

| Supervised Learning | Classification, regression and estimation |

| Unsupervised Learning | Density estimation, clustering and dimensionality reduction |

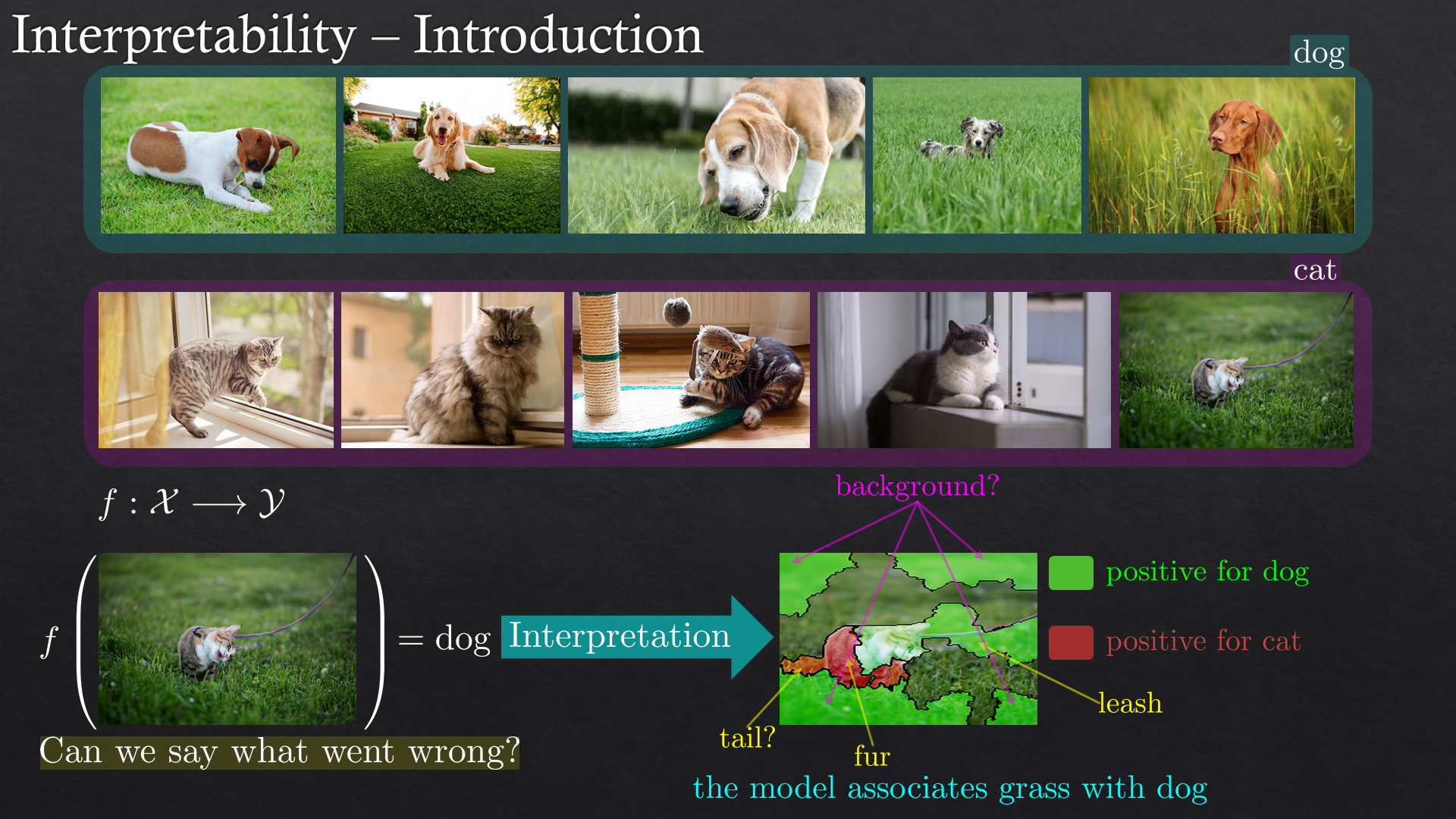

| Methods | Boosting, interpretability, NLP and time series forecasting |

| Introduction to Deep Learning | FCN and CNN, supervised and unsupervised, framework and tools |

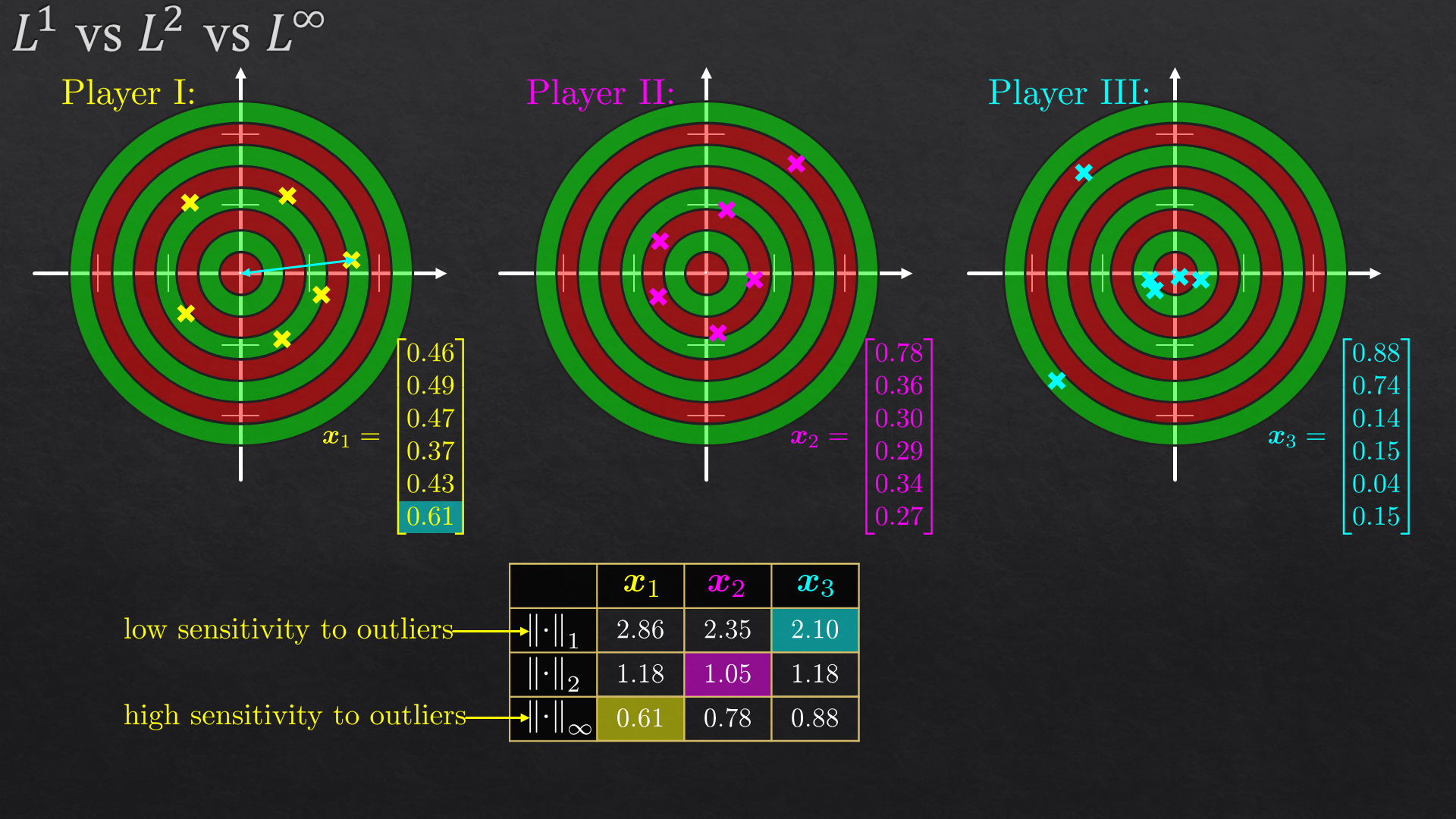

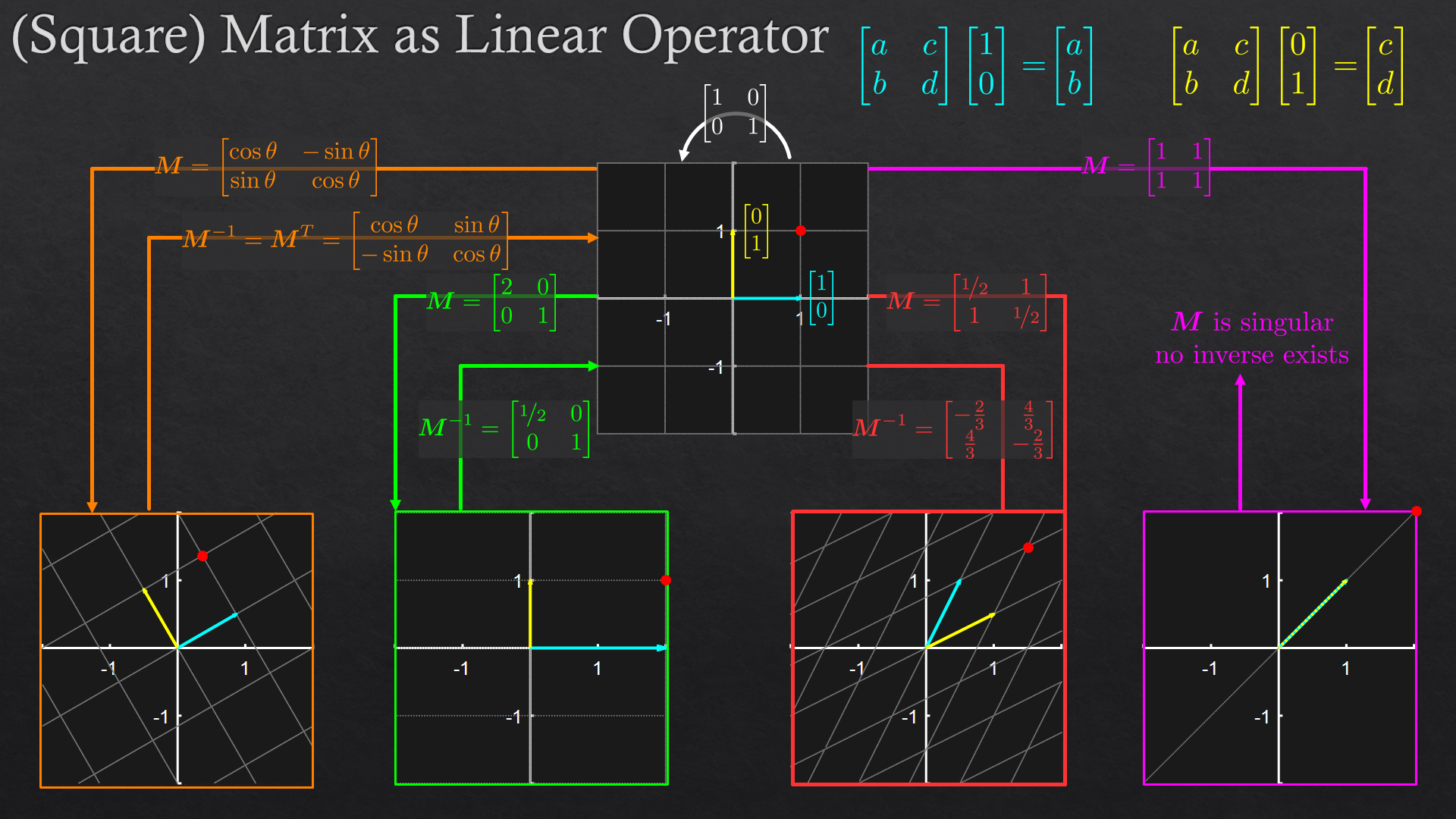

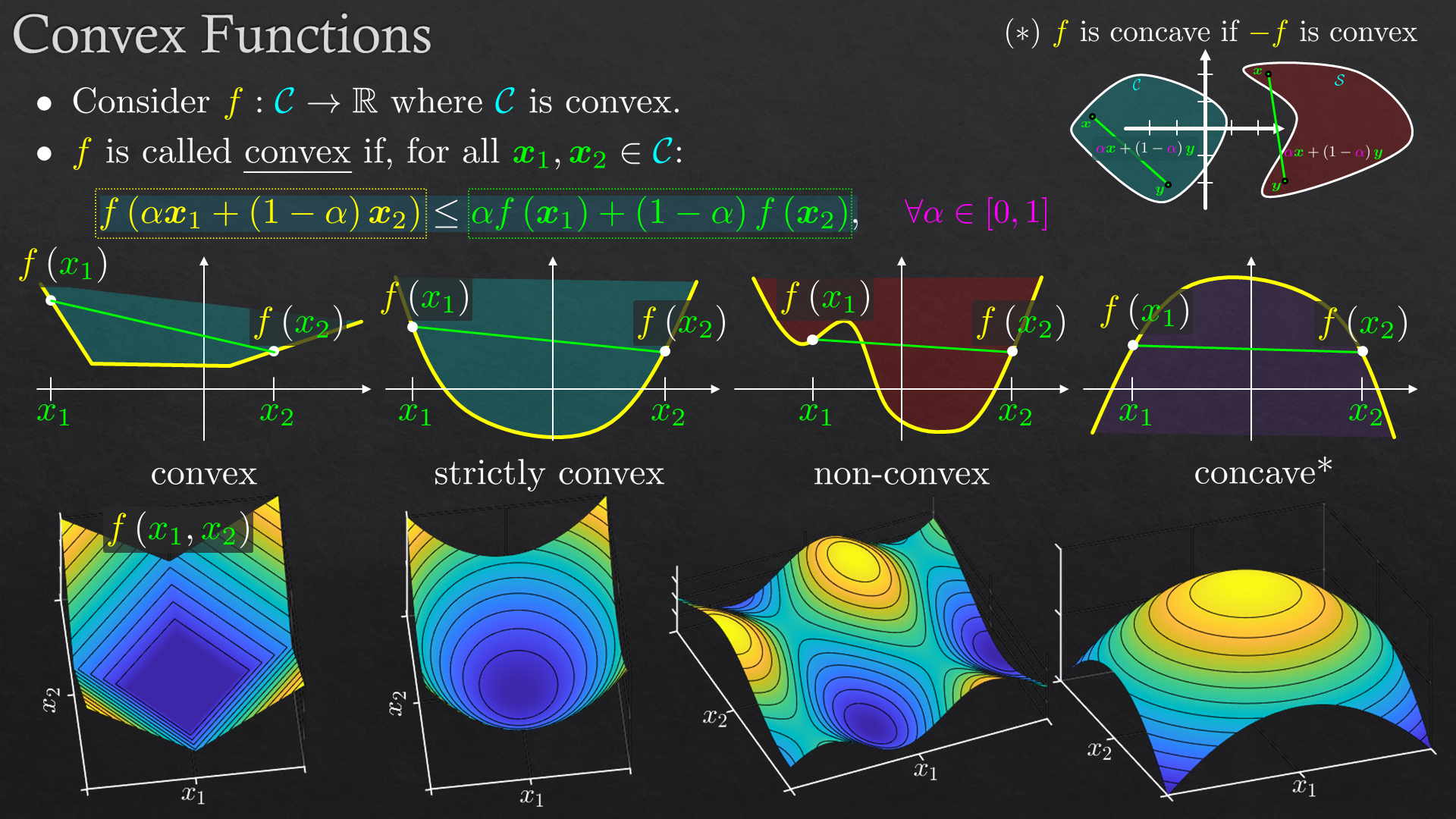

Slide Samples

Goals

- The participants will be able to match the proper approach to a given problem.

- The participants will take into consideration the advantages\disadvantages of the learned methods.

- The participants will be able to implement, adjust fine-tune, and benchmark the chosen method.

Pre Built Syllabus

We have been given this course in various lengths, targeting different audiences. The final syllabus will be decided and customized according to audience, allowed time and other needs.

| Day | Subject | Details |

|---|---|---|

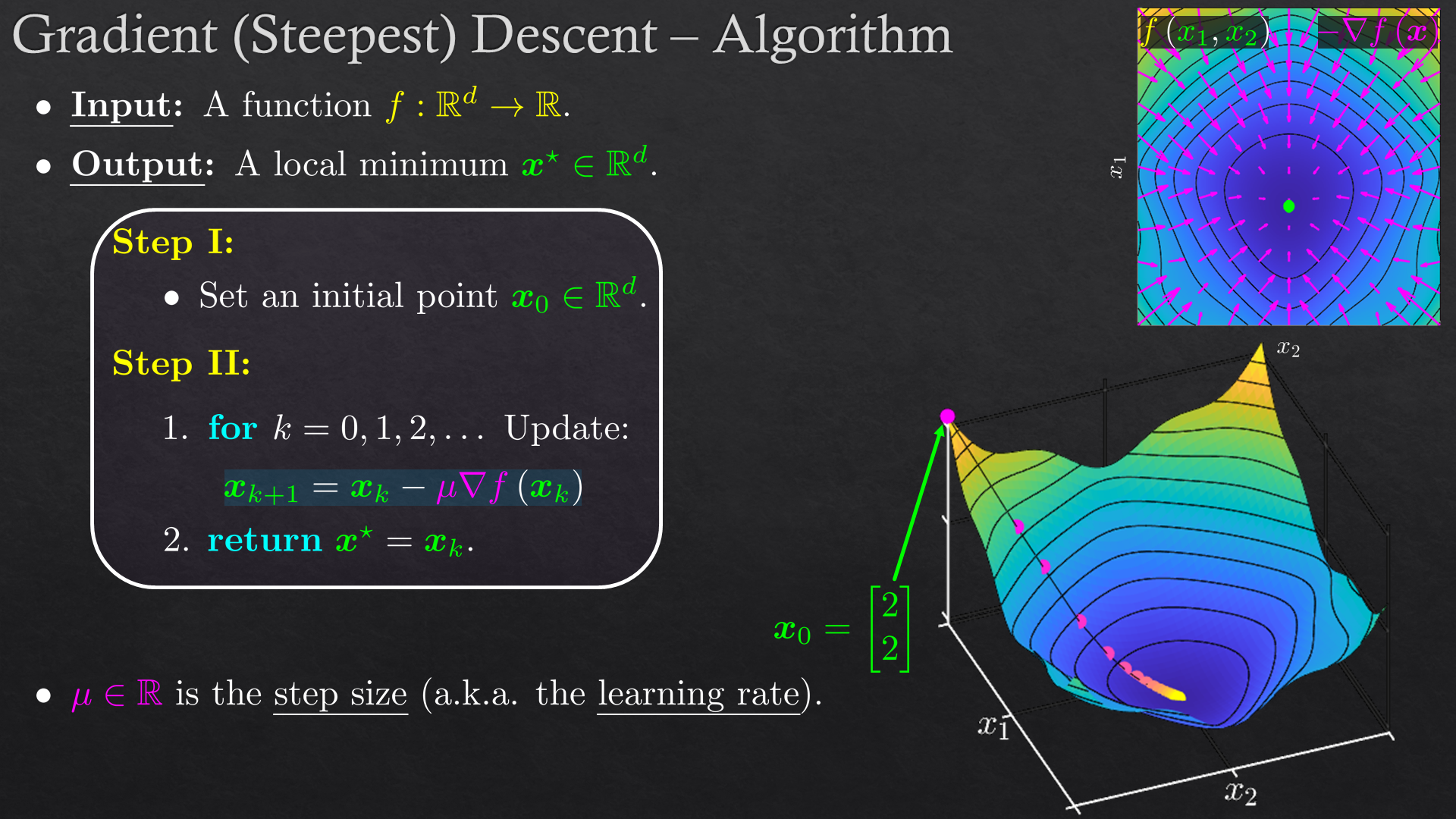

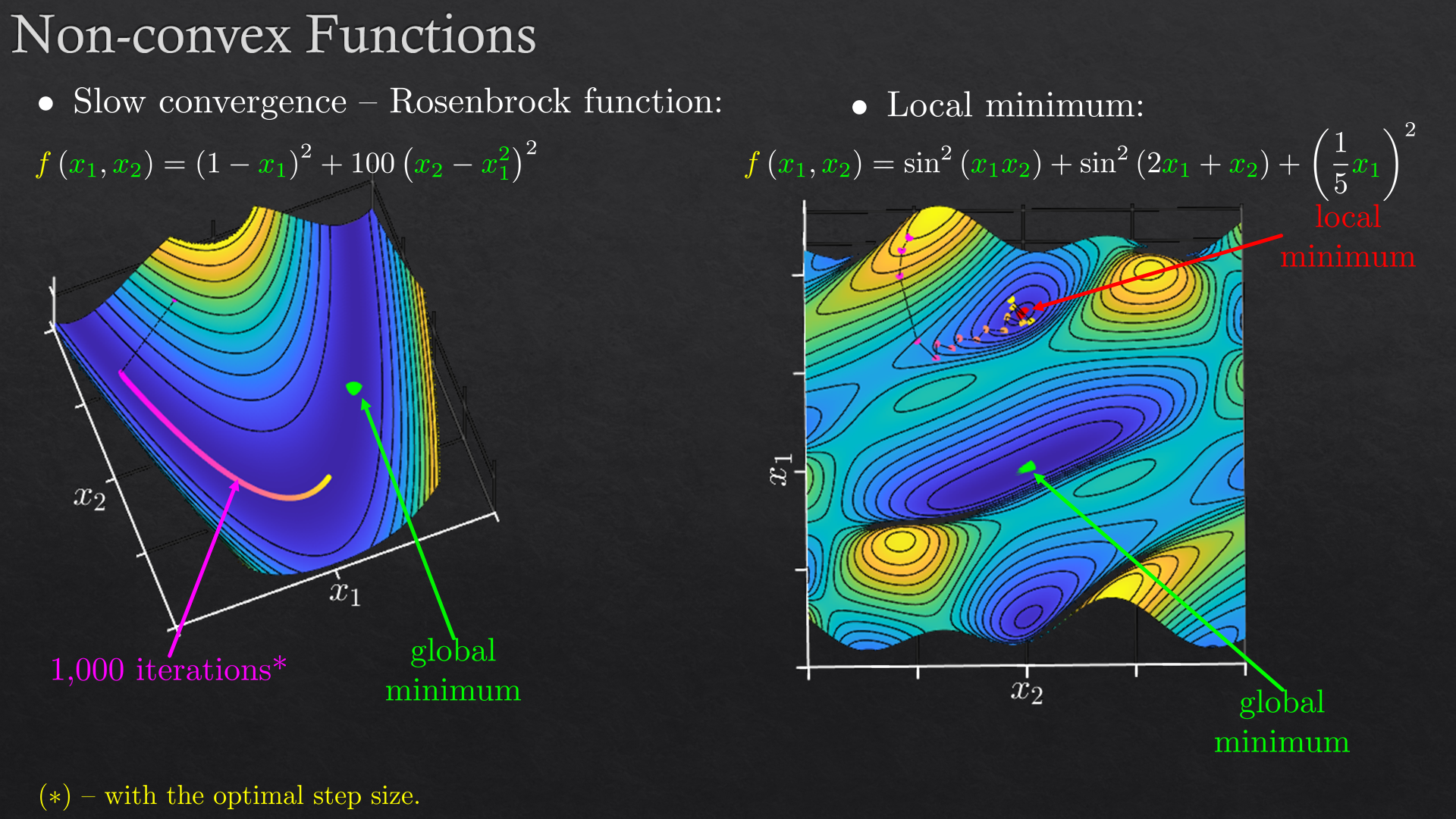

| 1 | Linear Algebra and Optimization | Vectors, norms, matrices, gradient descent, local extreme points, convexity, constrained/unconstrained optimization |

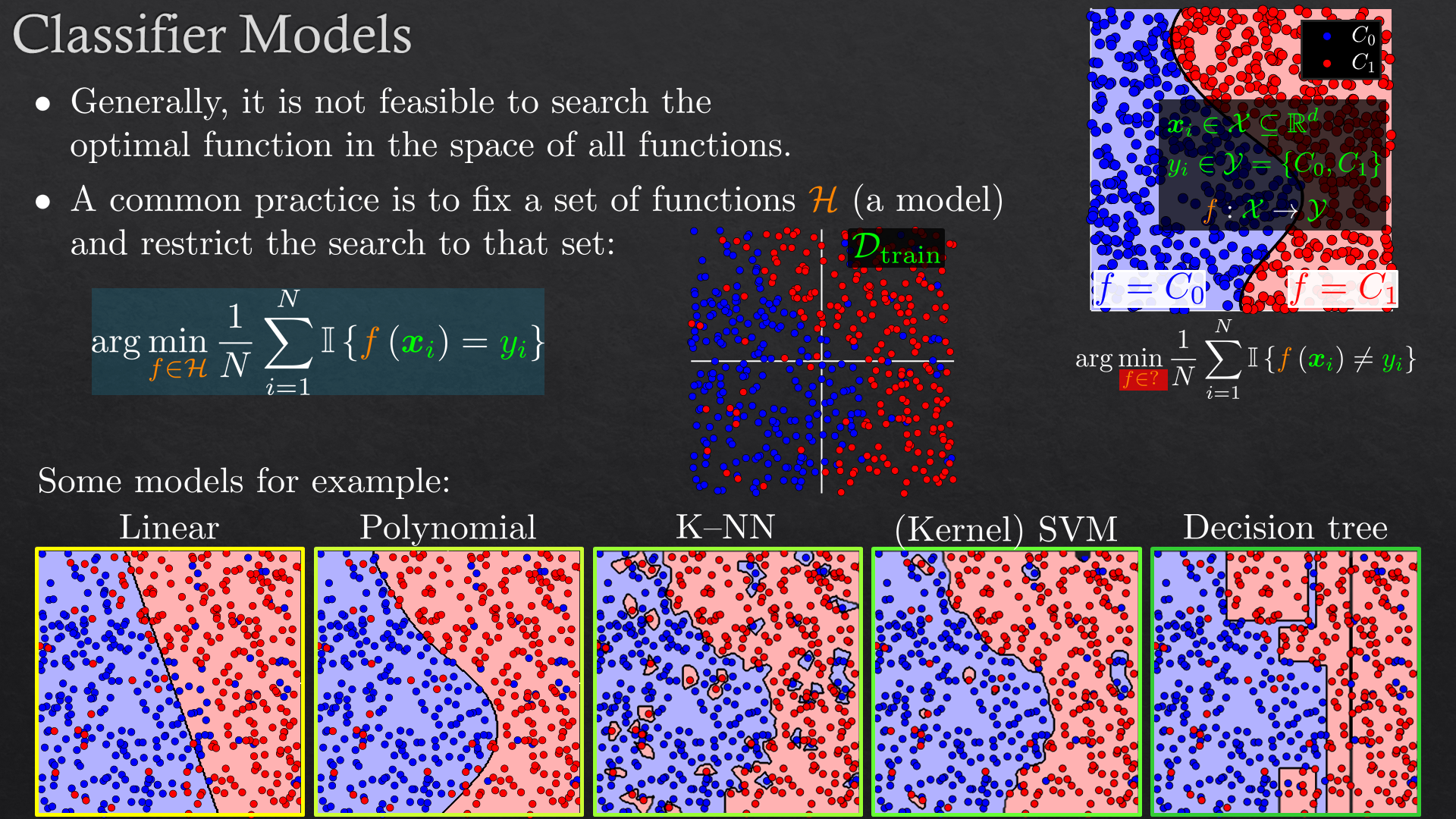

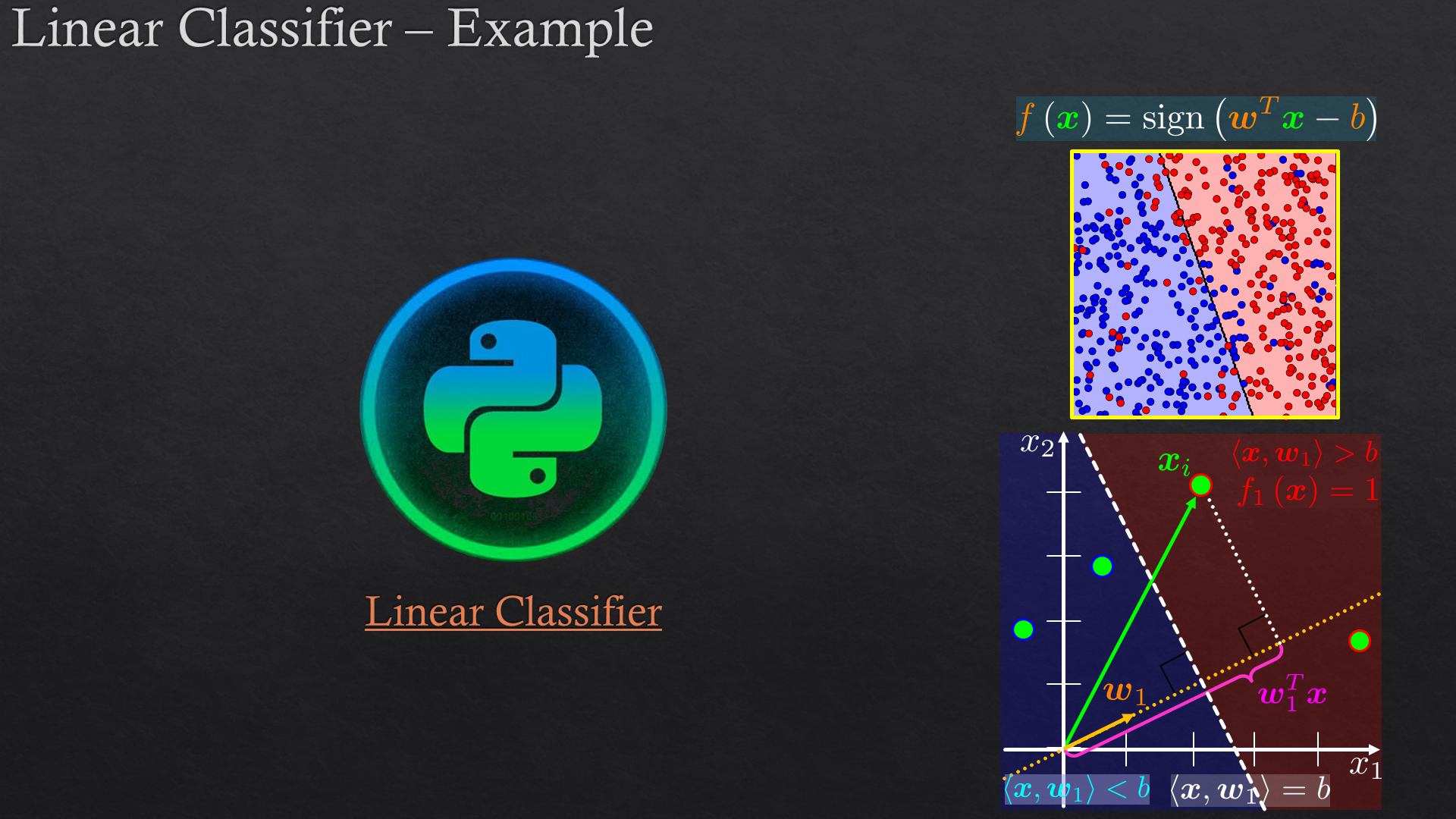

| Classification | Linear classification, support vector machine (SVM), K-nearest neighbors (K-NN) | |

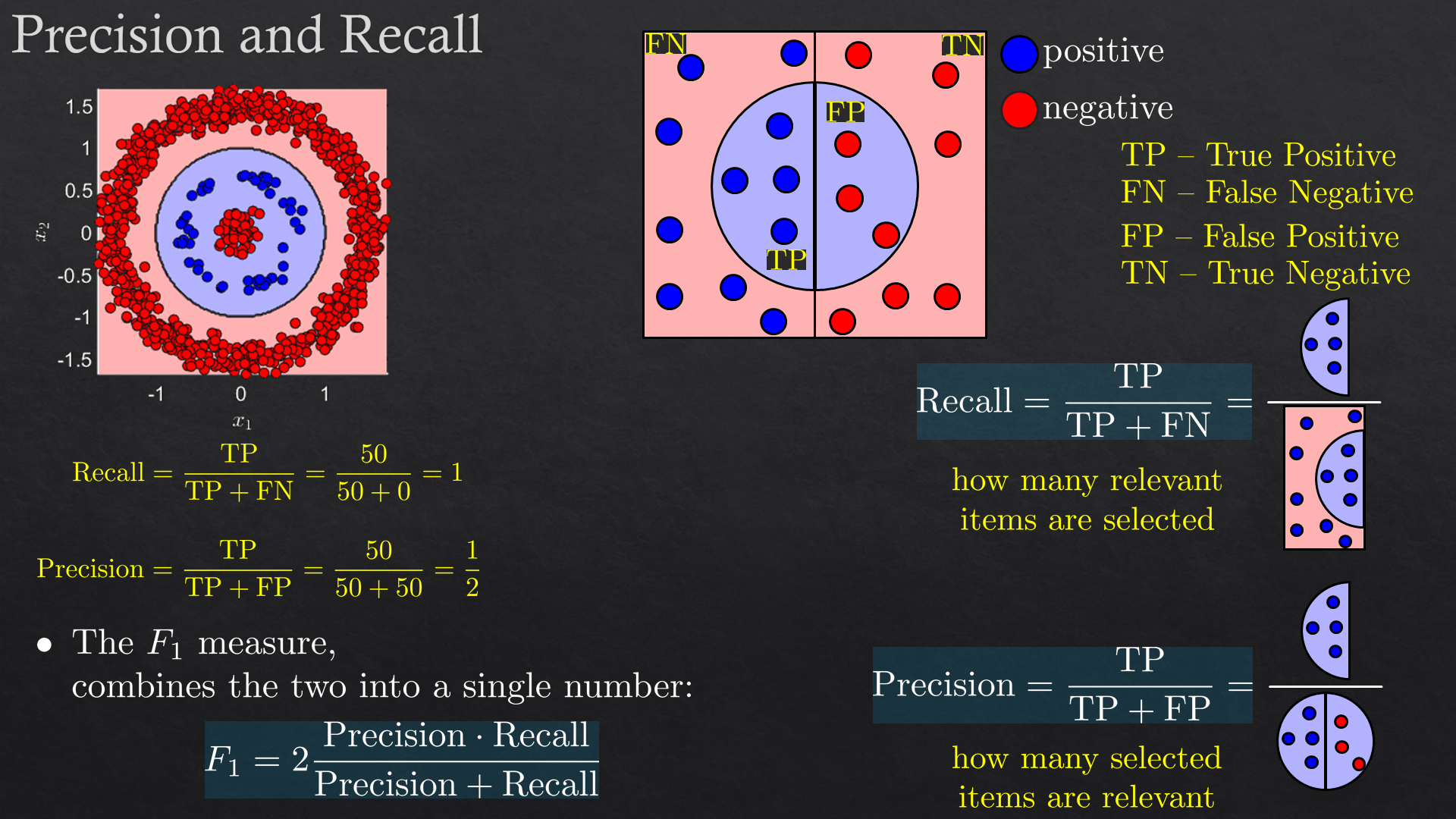

| Performance Evaluation | Overfit/underfit, cross validation, confusion matrix, loss (risk) function, Scoring (metric): accuracy, precision, recall, f1, ROC and AUC | |

| Feature Engineering | Feature transform, the kernel trick, feature selection | |

| Exercise 0 | Classification and Python: NumPy, SciPy, Pandas, Matplotlib and Seaborn |

|

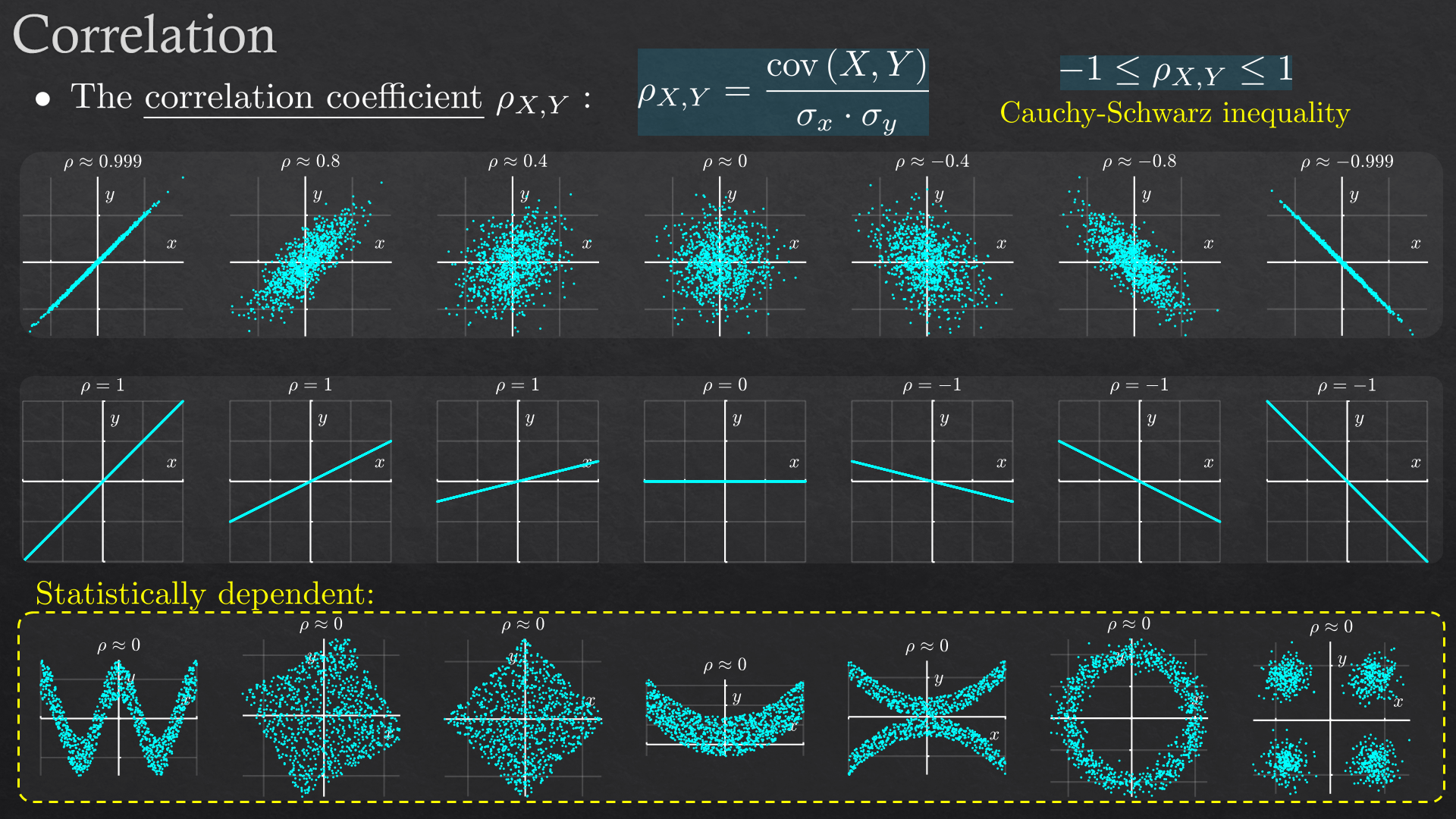

| 2 | Essential Probability | Random variable\vector (discrete\continuous), expected value and higher moments, statistical dependency and correlation, Bayes’ rule, Gaussian random vector |

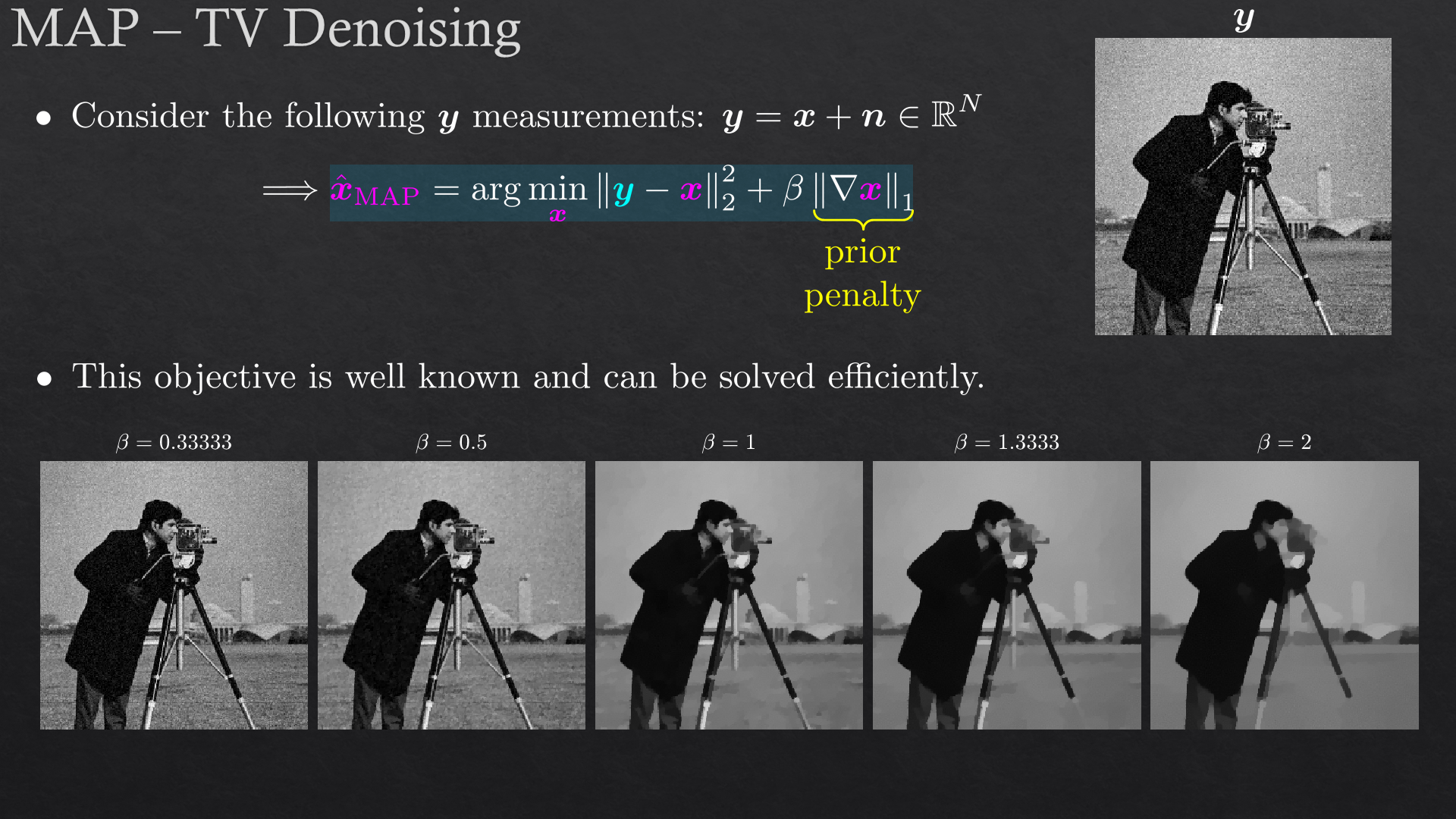

| Parametric Estimation | Maximum likelihood (ML), maximum a posteriori (MAP), method of moments | |

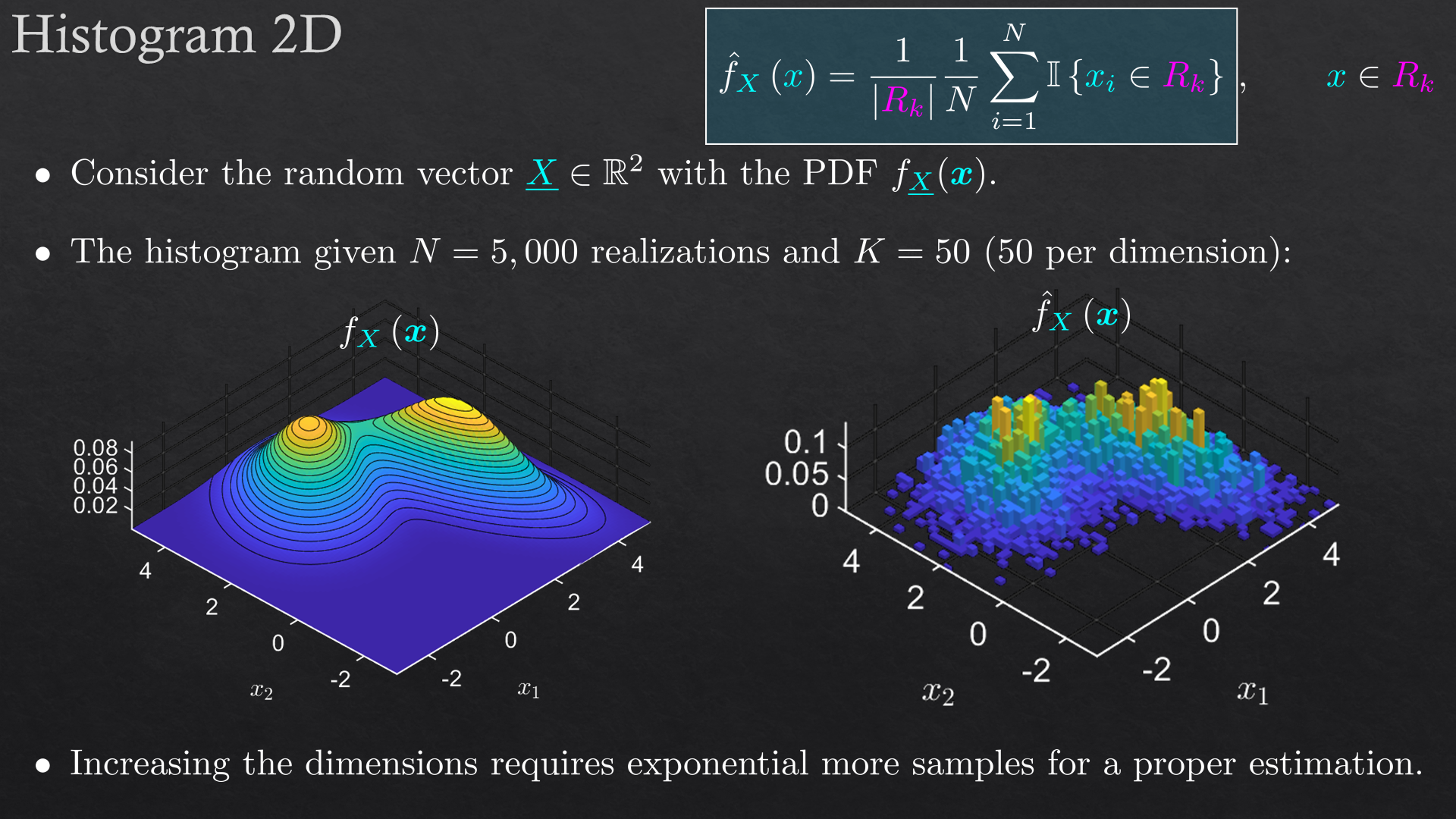

| Non Parametric Estimation | Histogram, kernel density estimation (KDE), order statistics | |

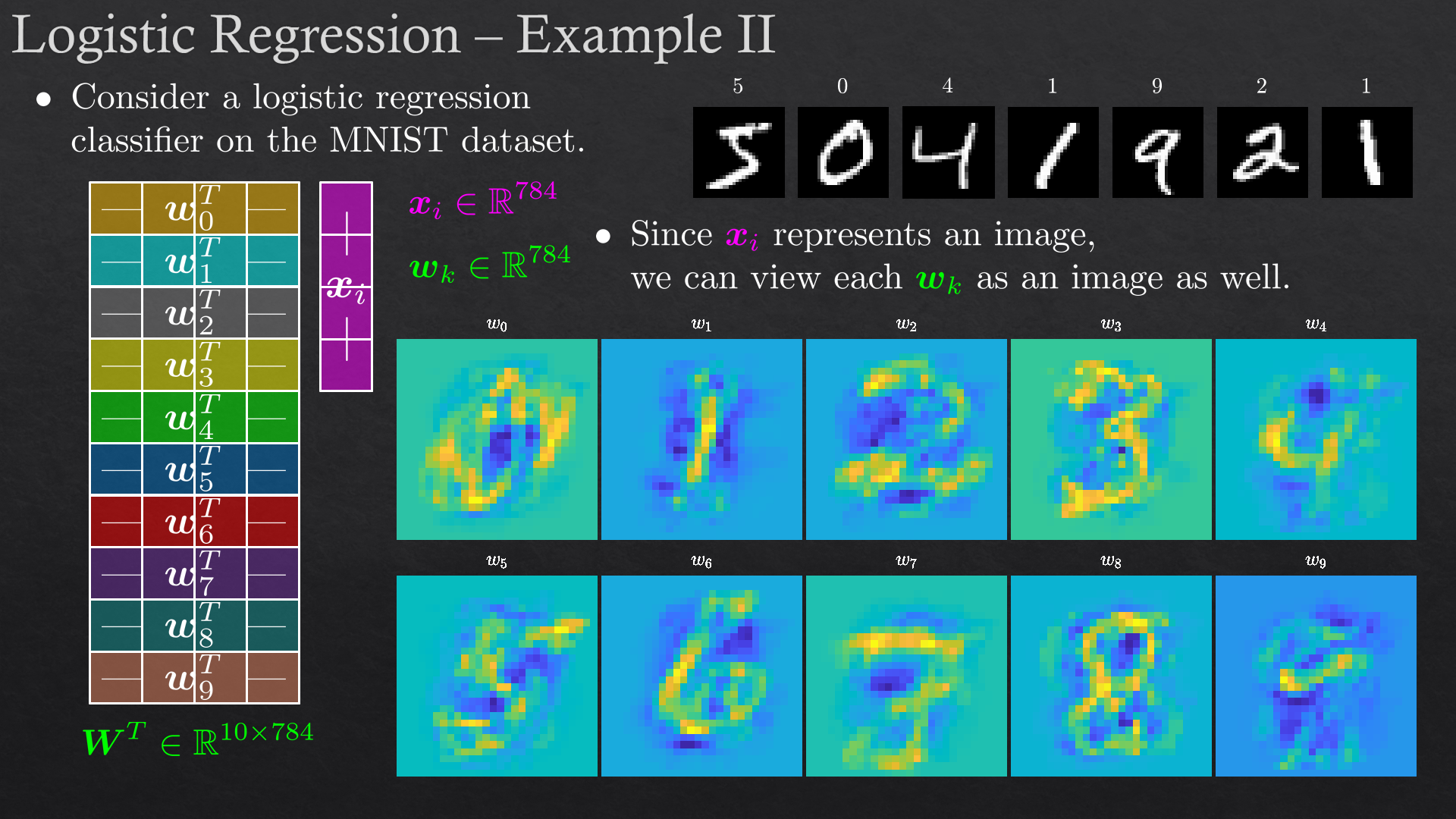

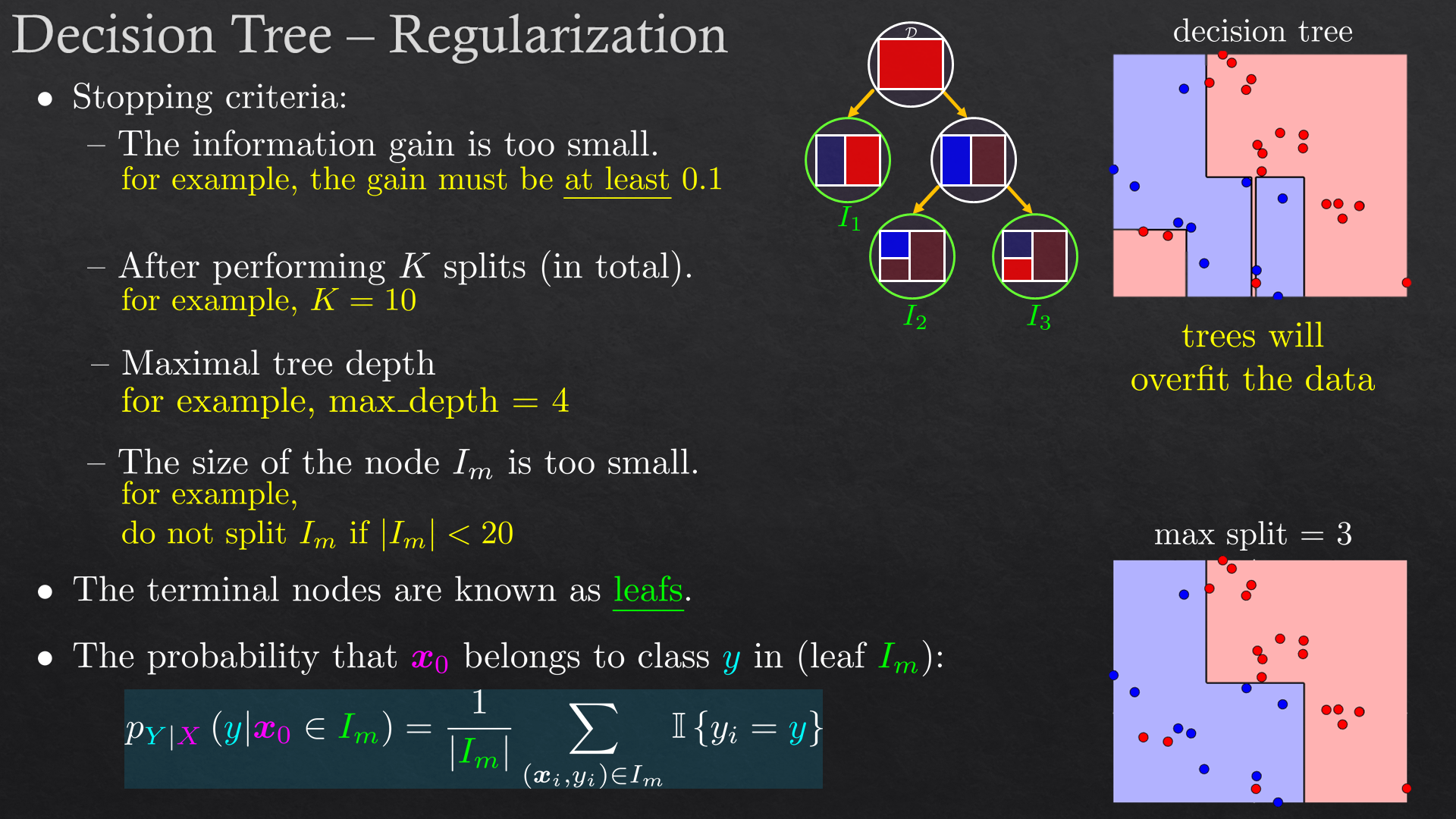

| Statistical Classification | Logistic regression, MAP classifier, decision trees | |

| Exercise 1 | Data analysis, visualization and classification | |

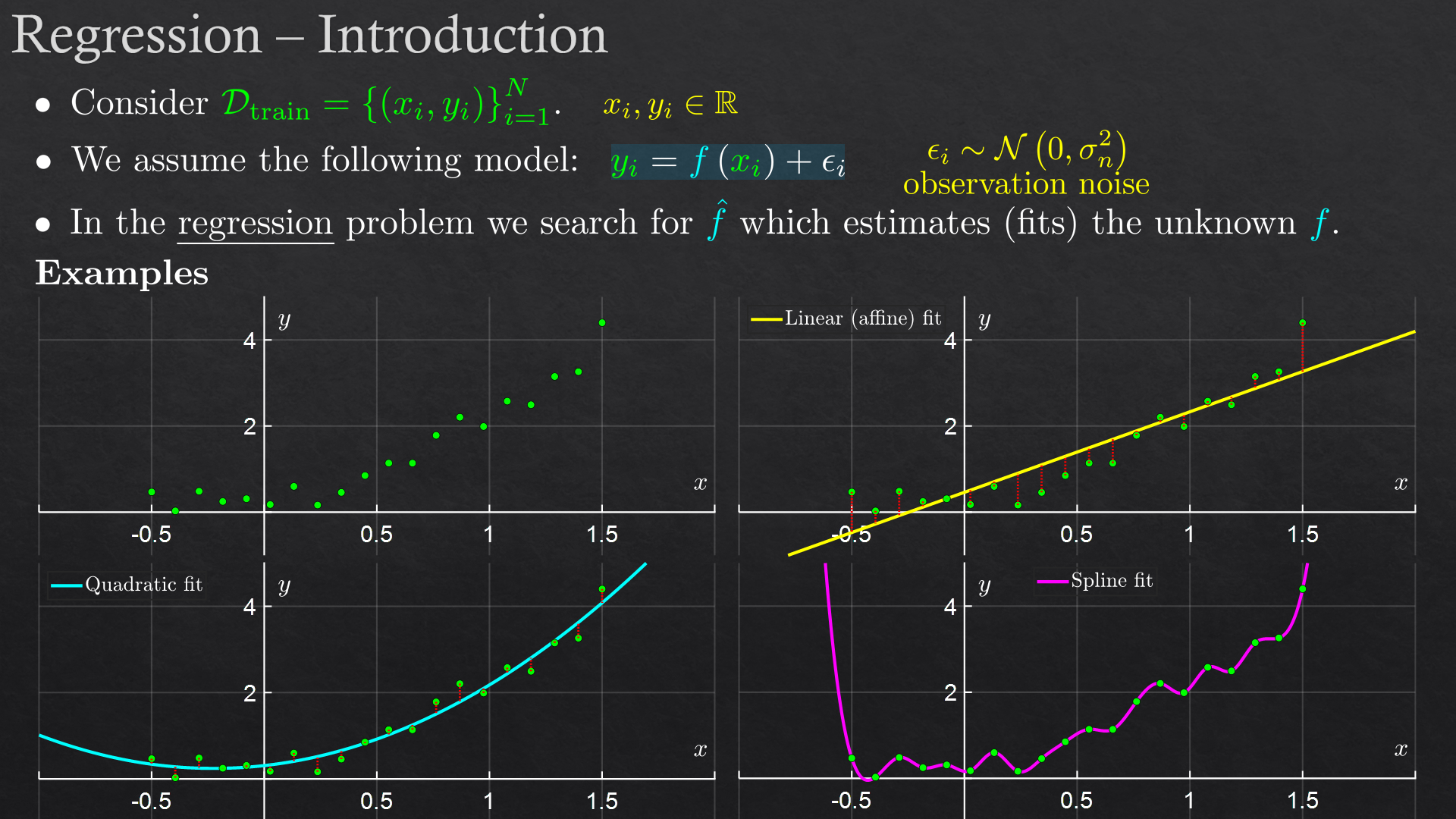

| 3 | Linear Regression | Linear least squares, polynomial fit and feature transform, R2 score, weighted linear regression, RANSAC method |

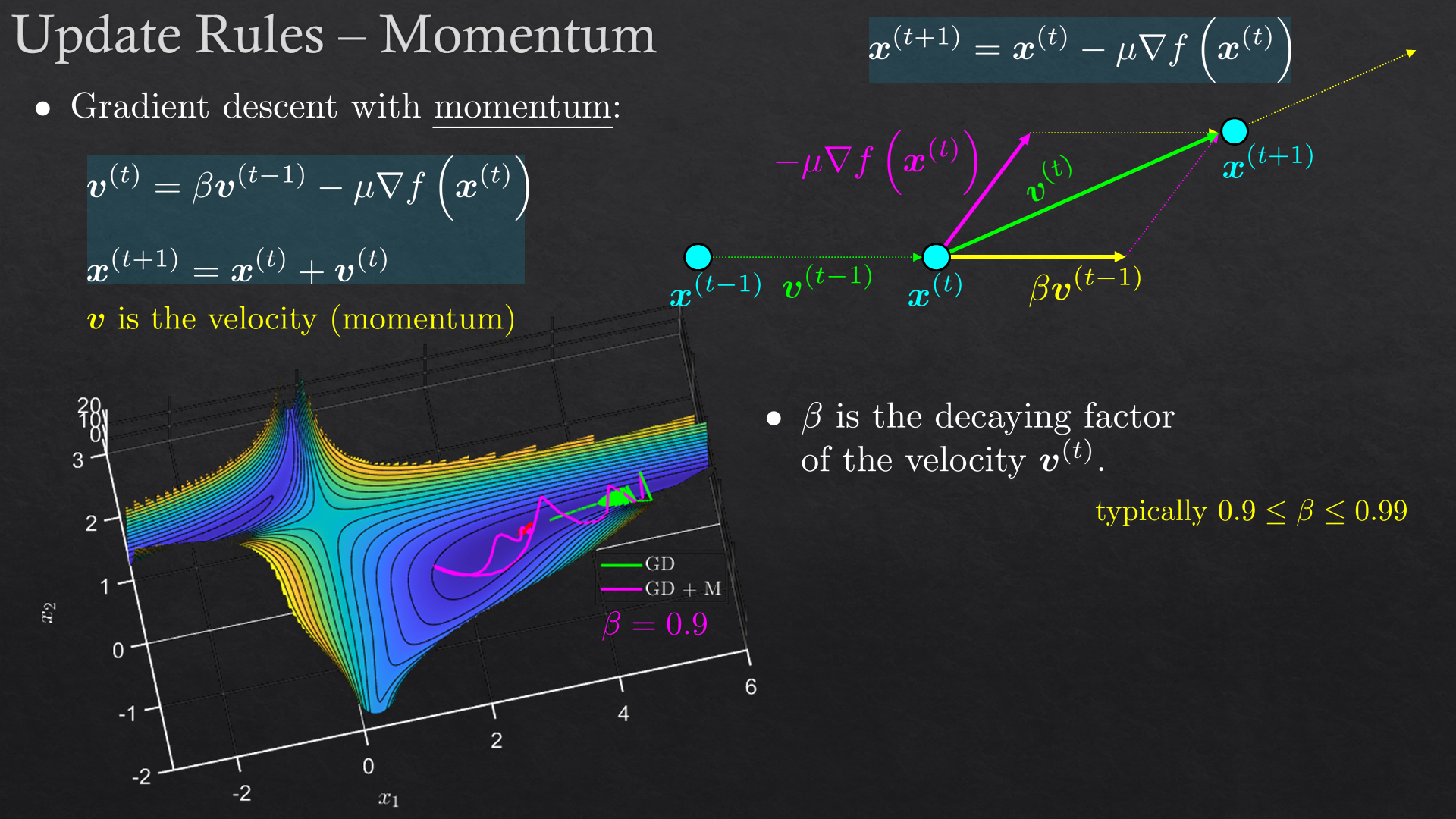

| Non Linear Regression | 1st and 2nd order methods, line-search | |

| Local and Non Parametric Regression | Kernel regression (interpolation), local polynomial regression, splines (interpolation), regression trees | |

| Exercise 2 | Data analysis, visualization and regression | |

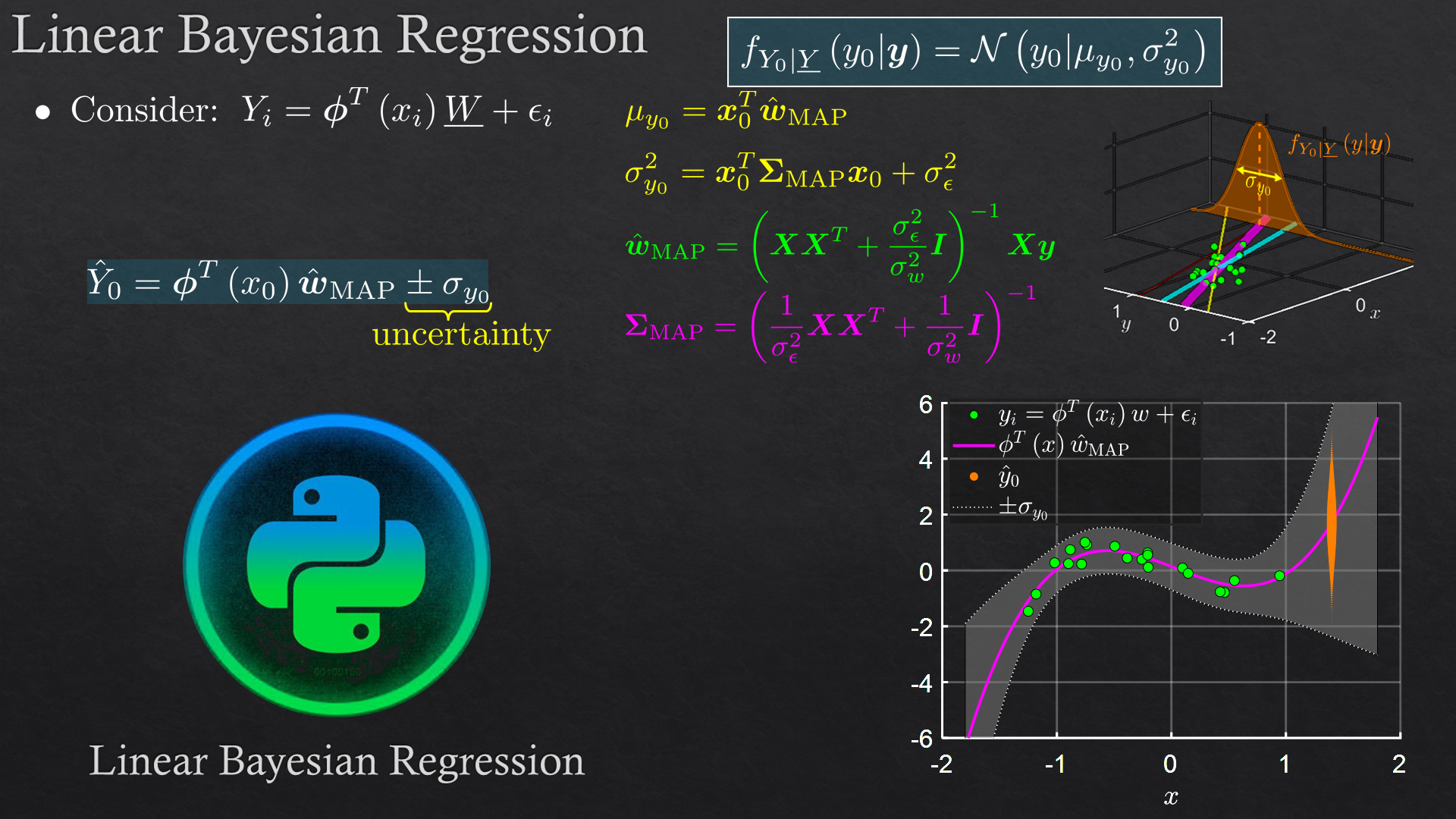

| 4 | Bayesian Regression | Bayesian linear regression, Gaussian processes (GP) |

| Robust Regression | Robust regression methods, Huber loss | |

| Boosting and Ensembles | Bagging, random forests, gradient boosting, Adaboost, feature importance | |

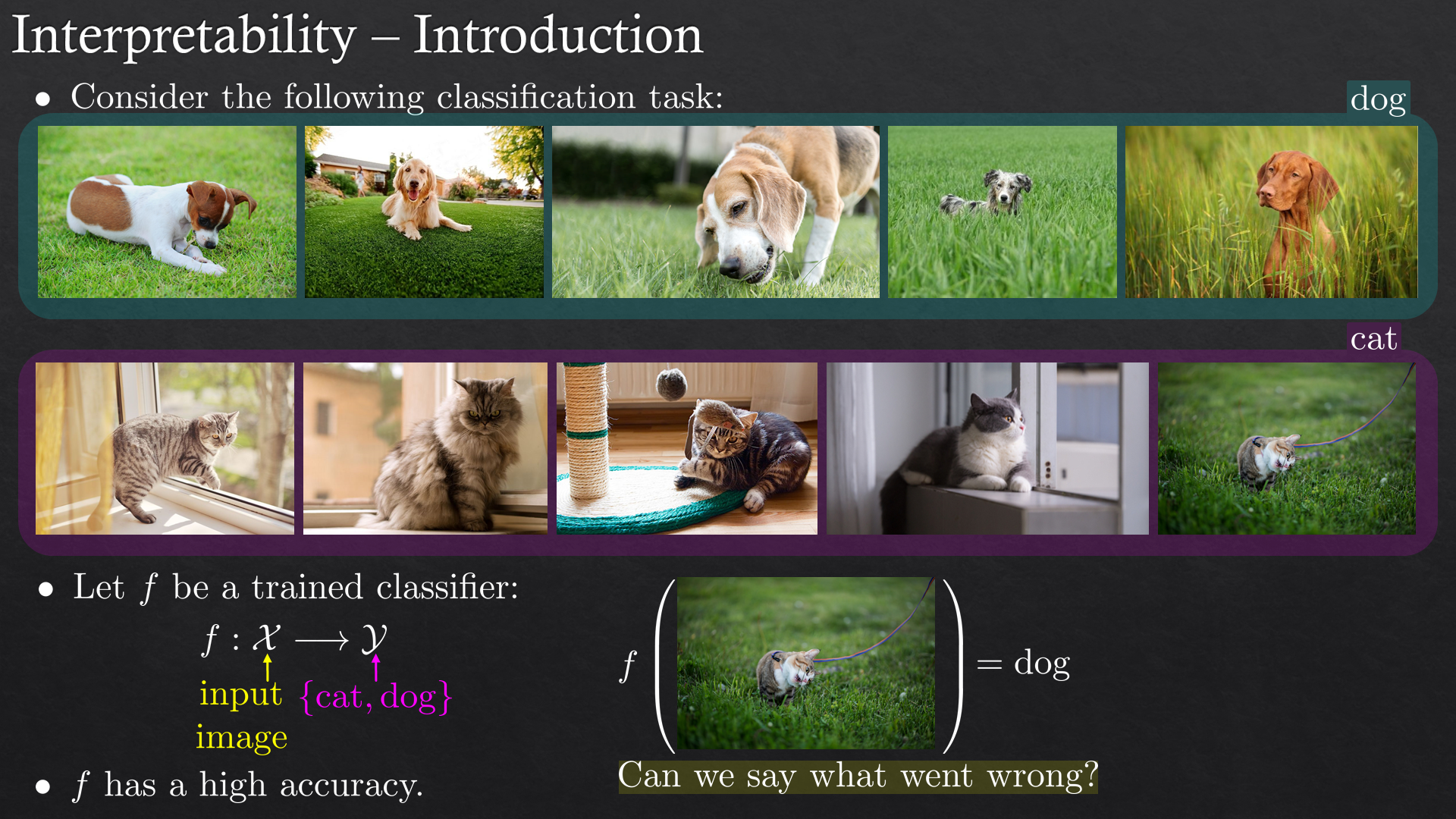

| Interpretability \ Explainablility Methods | LIME, SHAP | |

| Exercise 3 | Advanced regression and estimation | |

| 5 | Clustering I | K-means, K-medoids, Gaussian mixture models (GMM) |

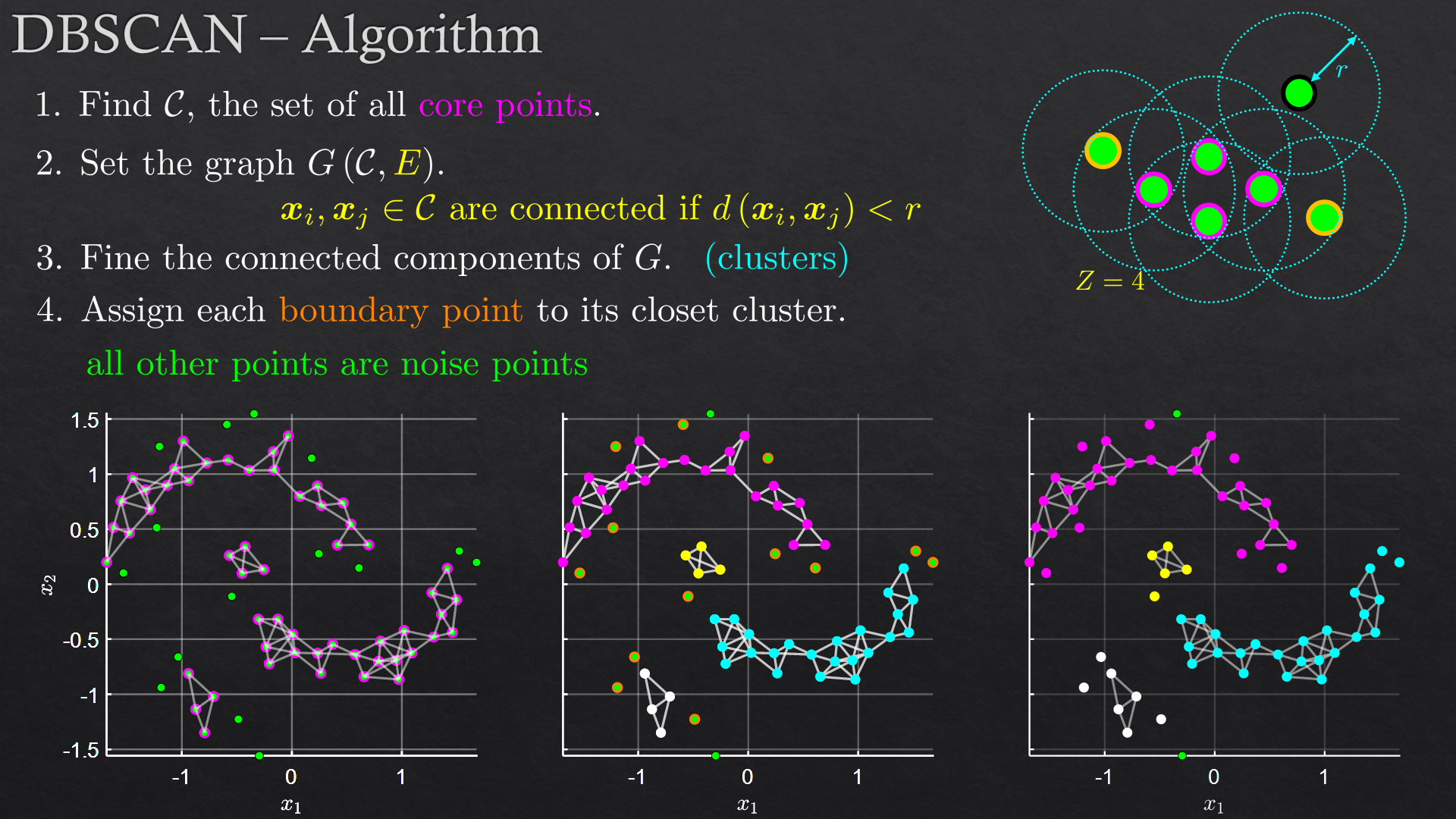

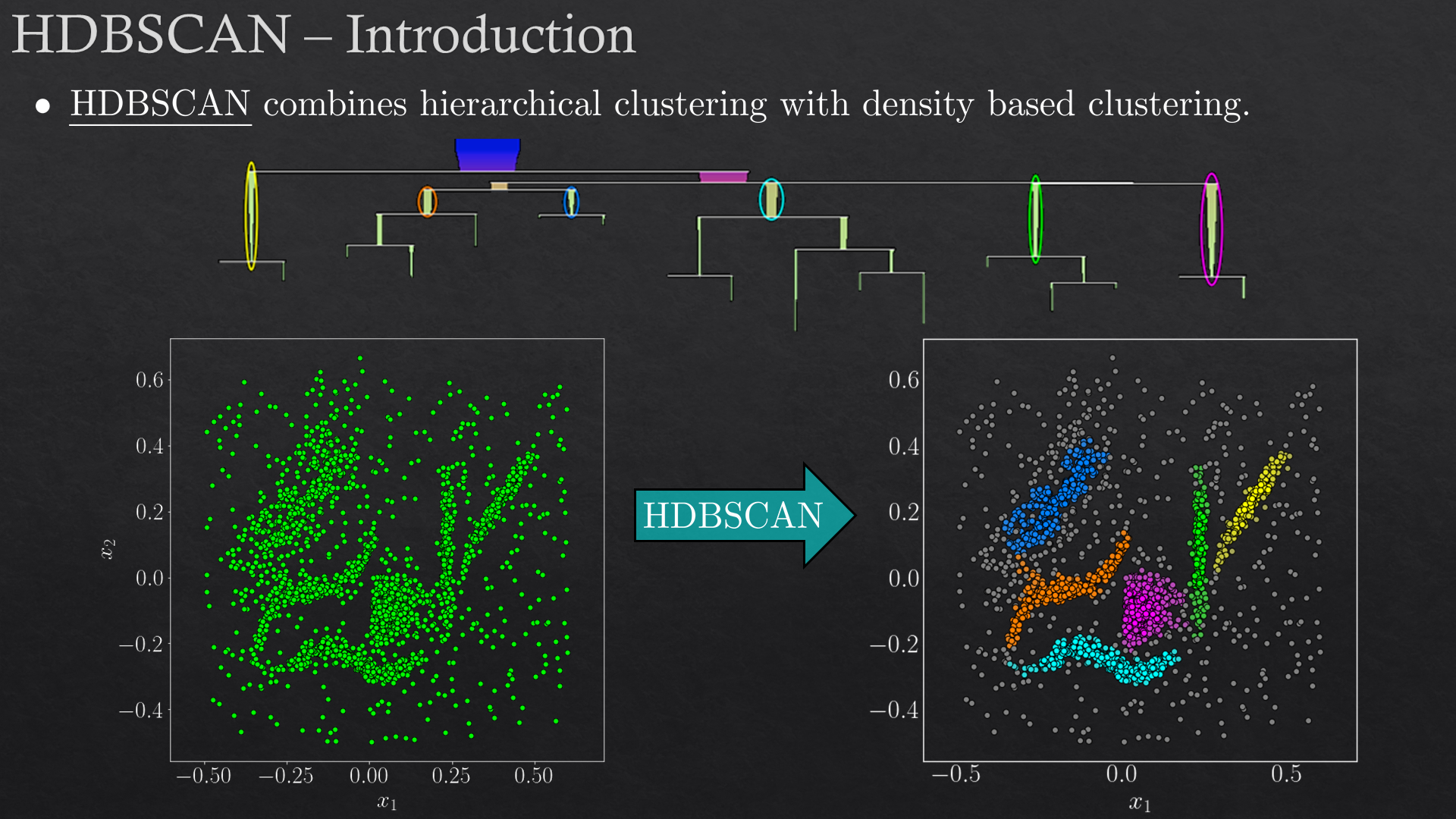

| Clustering II | DBSCAN, hierarchical clustering (Ward’s method), HDBSCAN | |

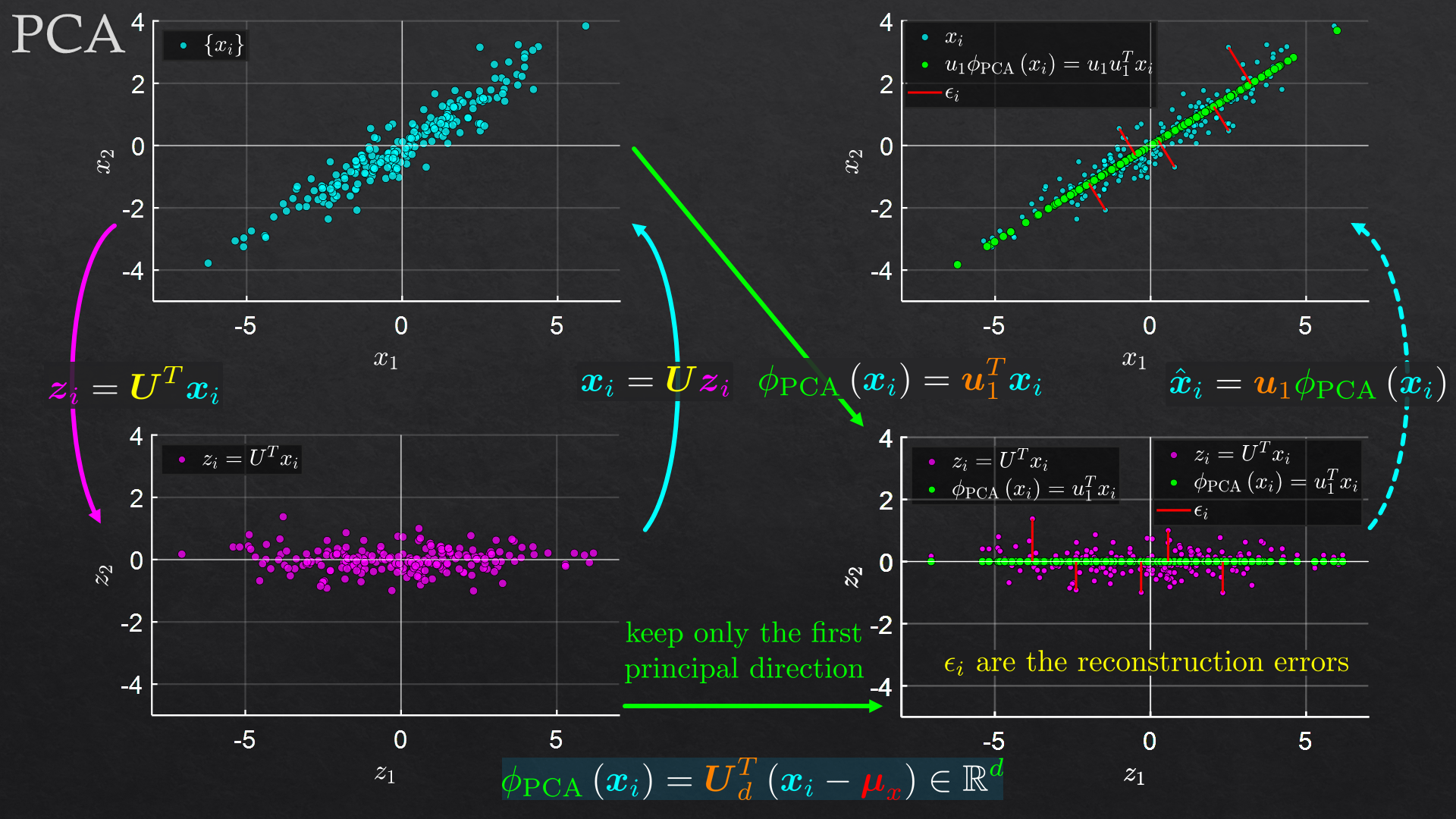

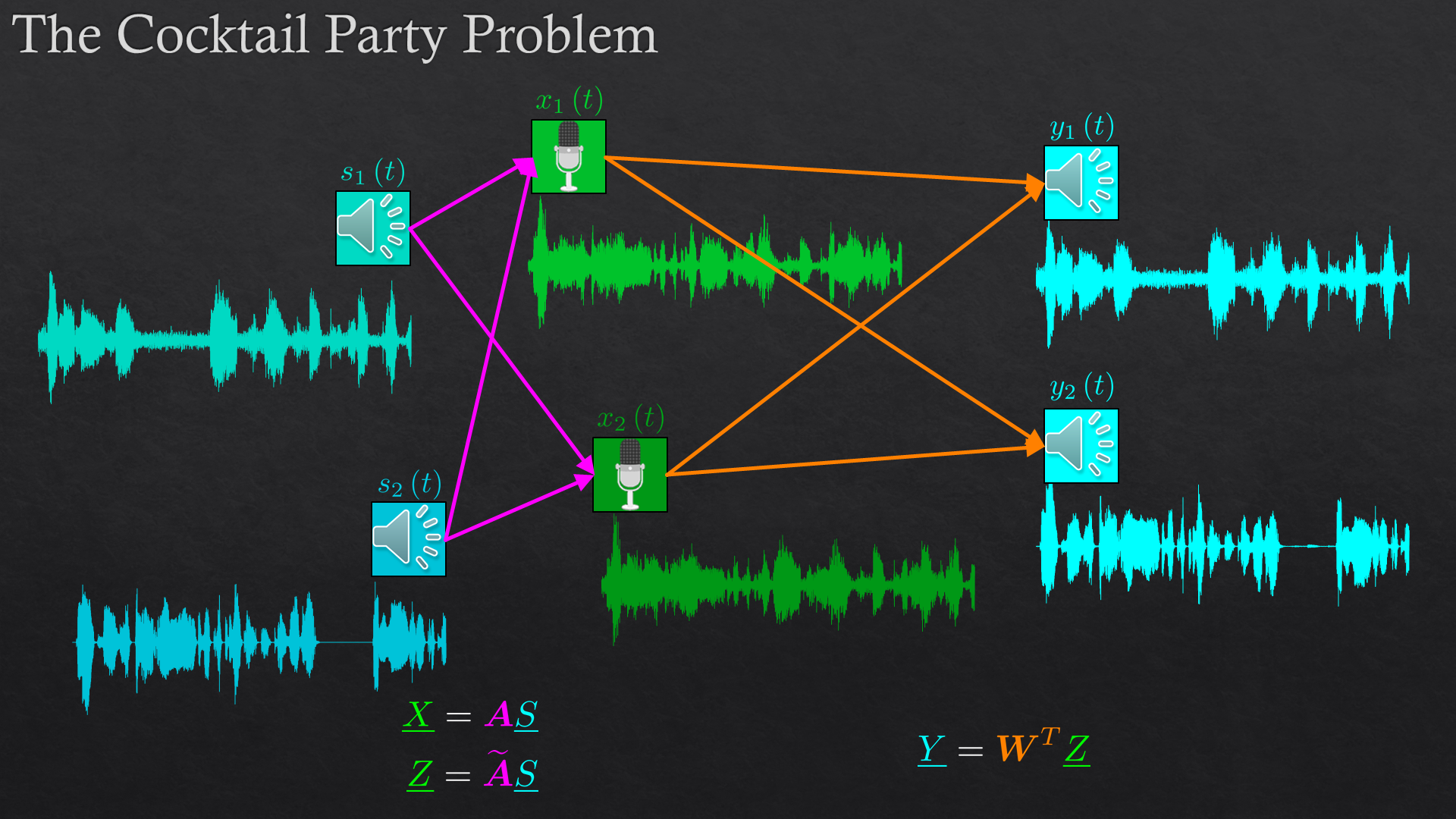

| Component Analysis | Dimensionality reduction, principal component analysis (PCA), independent component analysis (ICA) | |

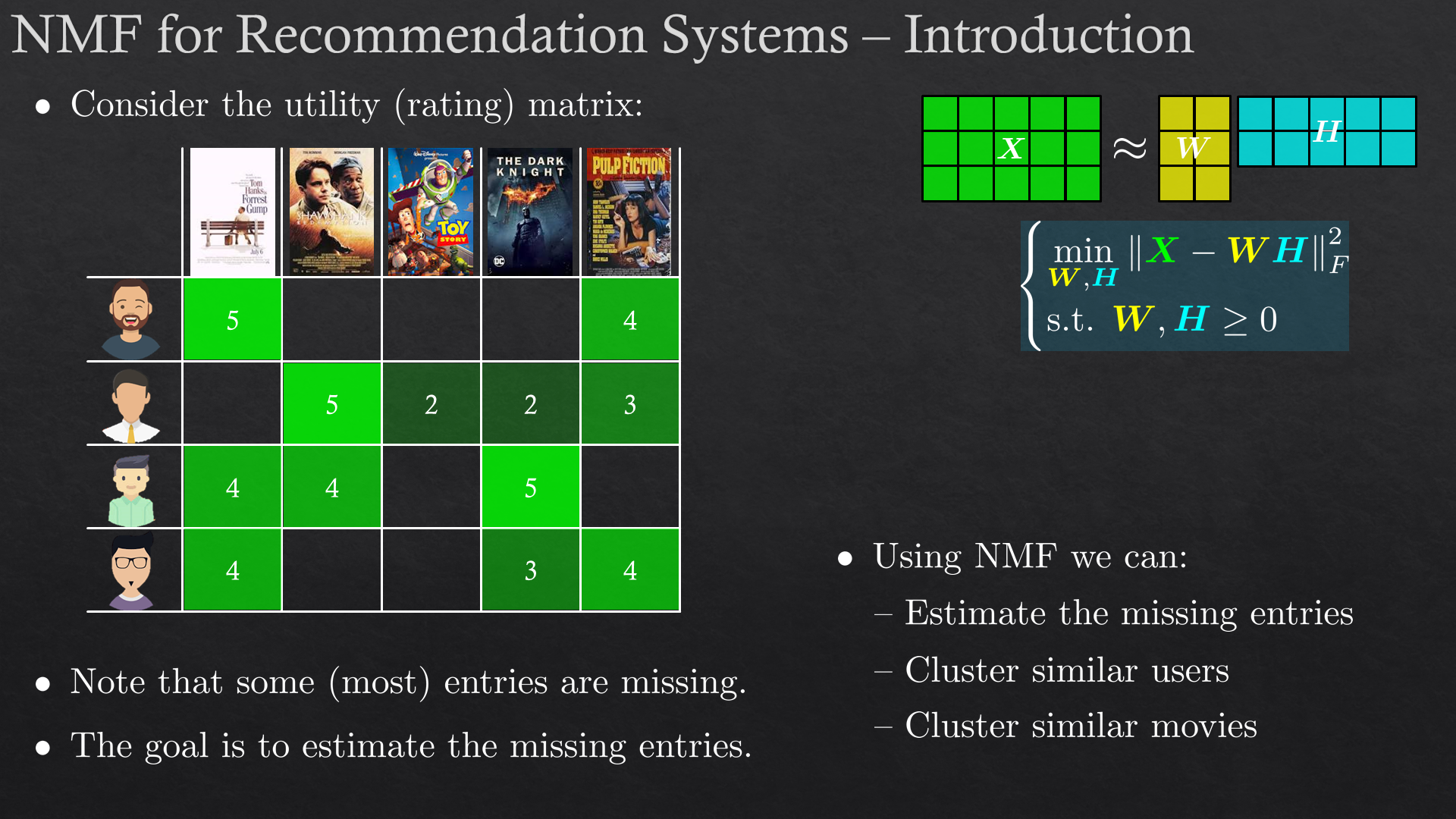

| Non Negative Matrix Factorization (NMF) | Recommendation system | |

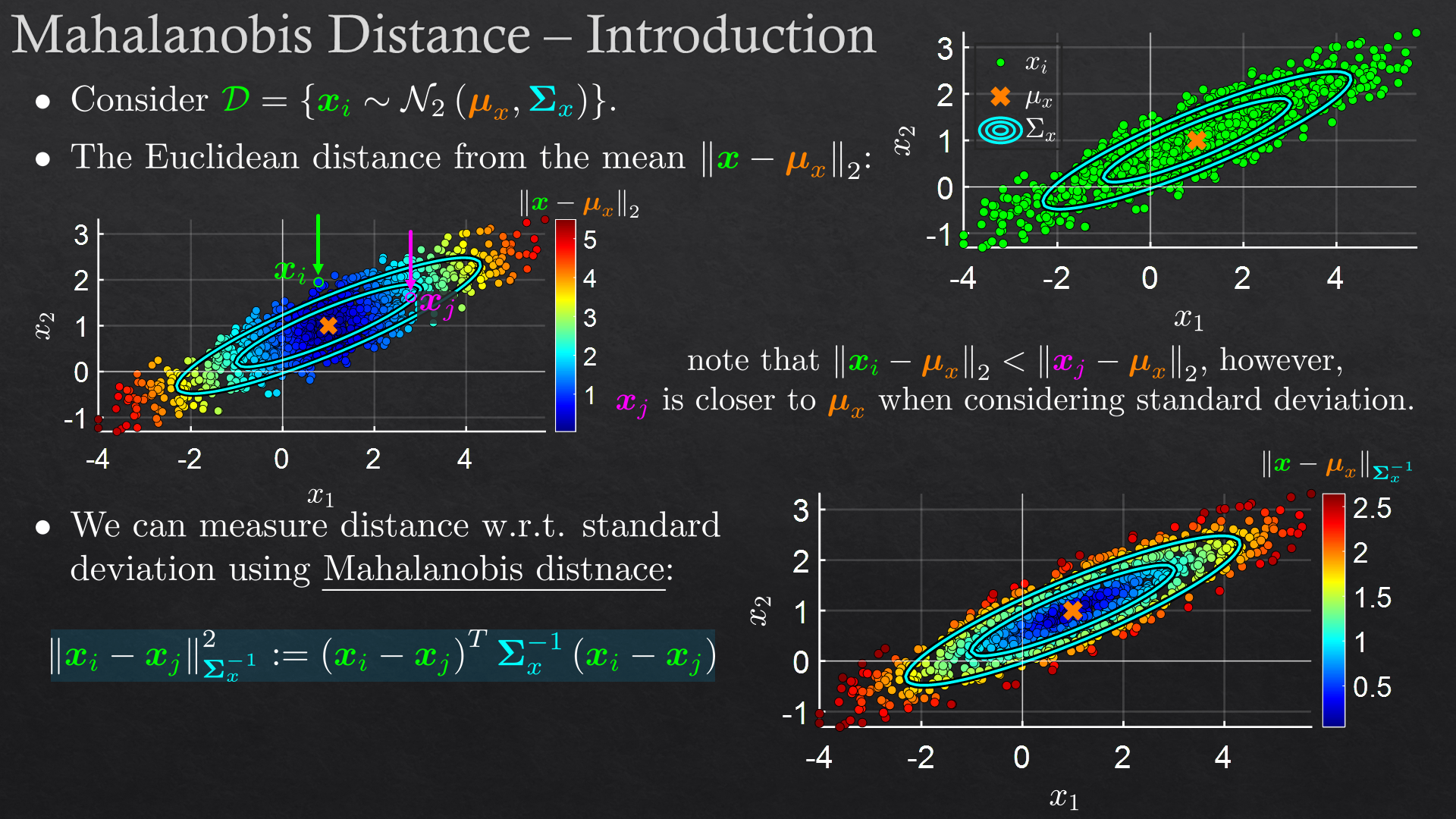

| 6 | Metric Learning | Mahalanobis distance, Fisher discriminant analysis (FDA), kernel FDA and local FDA |

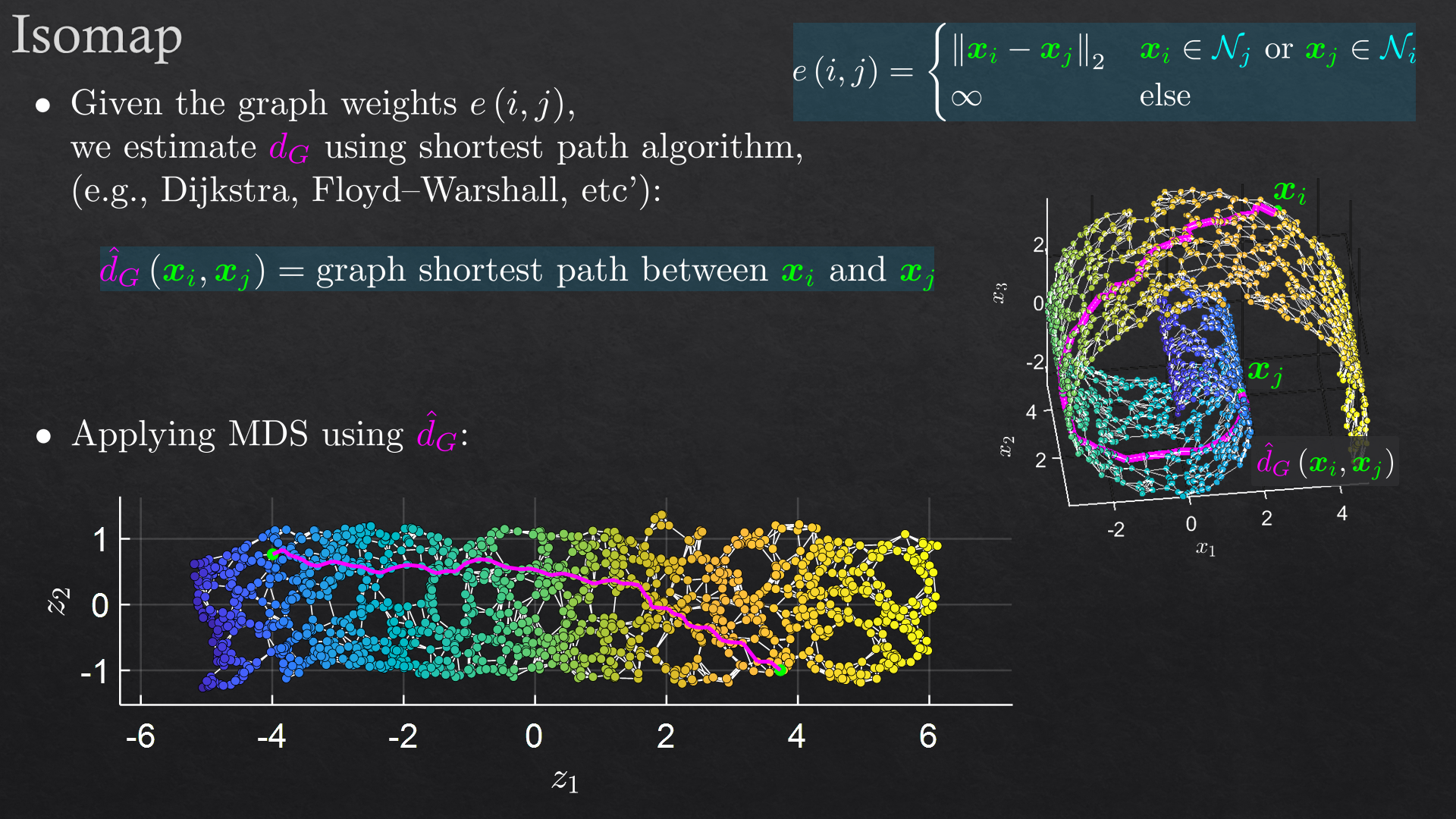

| Non Linear Dimensionality Reduction | Multidimensional scaling (MDS), Laplacian eigenmaps, Isomaps, T-SNE | |

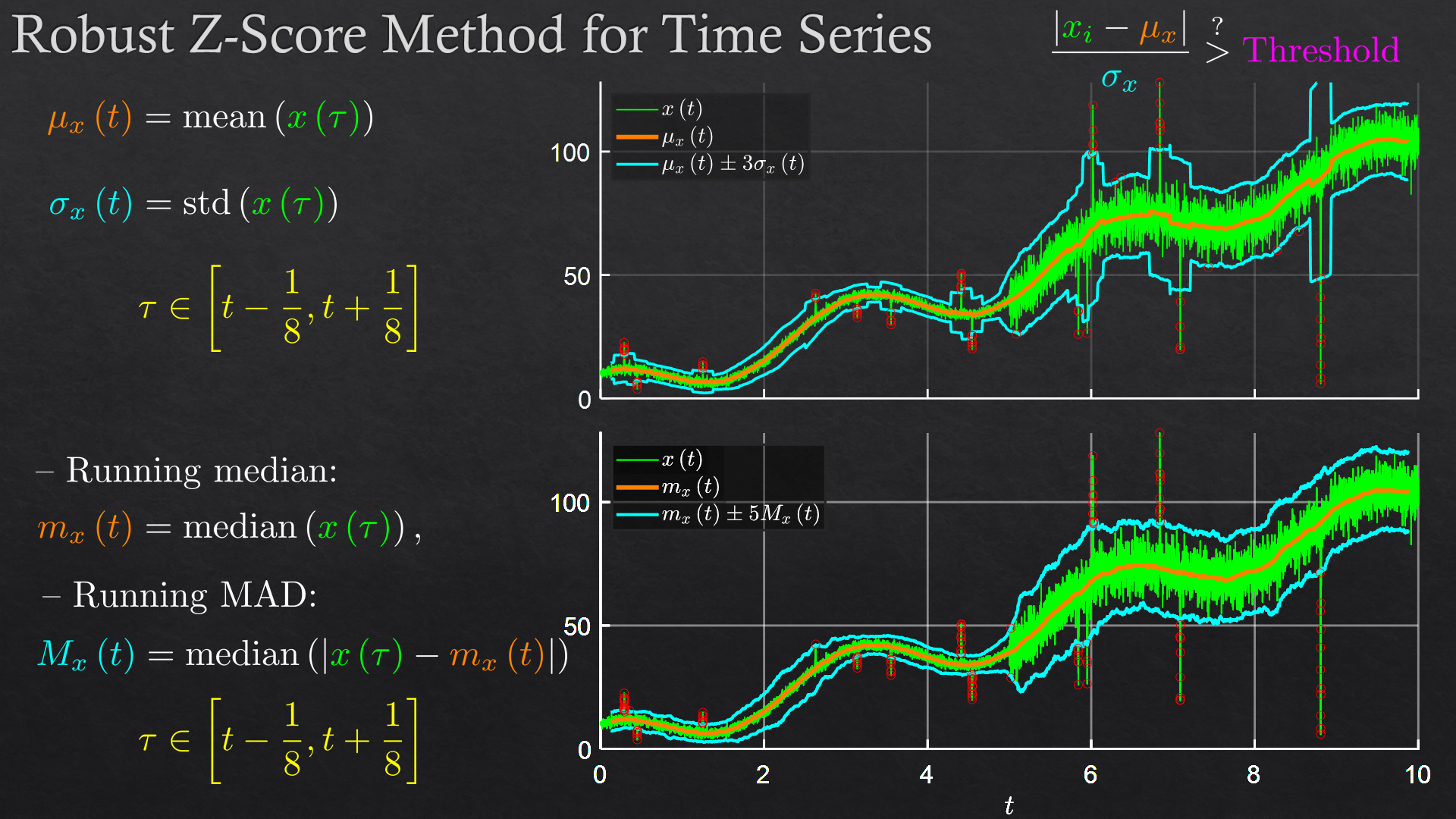

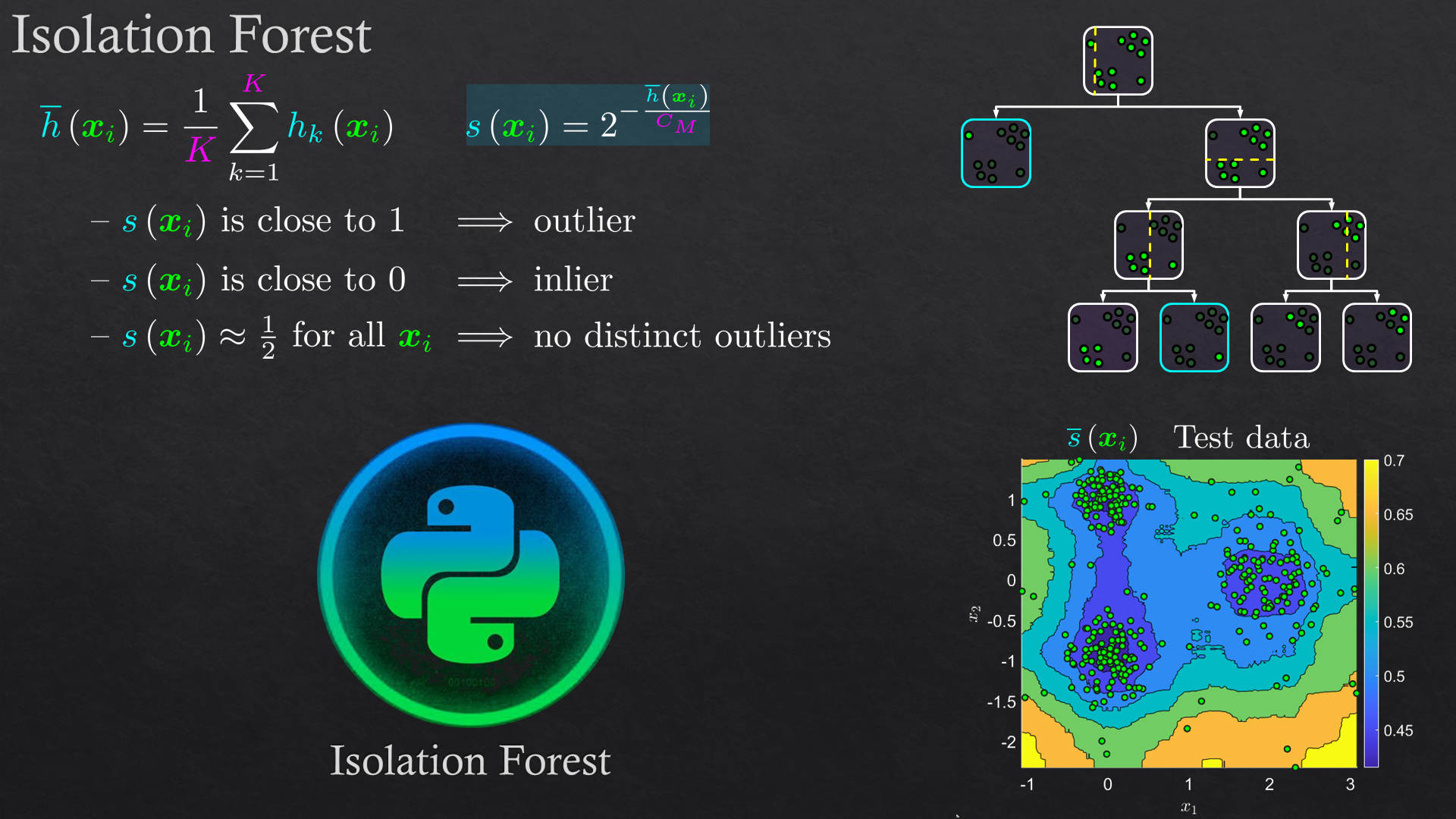

| Outlier Detection/Rejection | Robust Z-score method, local outlier factor (LOF), isolation forest | |

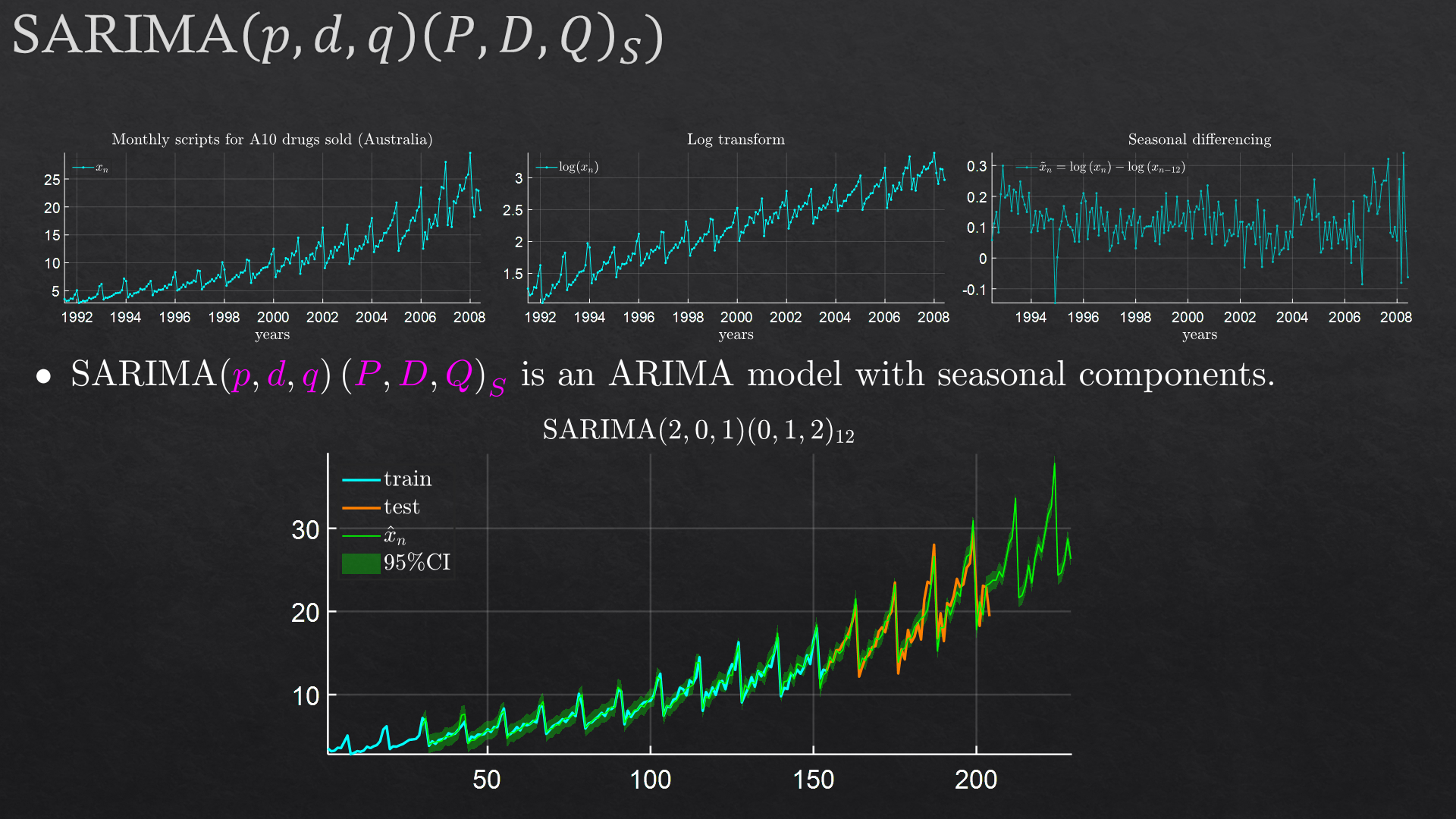

| Time Series Forecasting | Auto-regressive model (AR), ARMA model, ARIMA + SARIMA | |

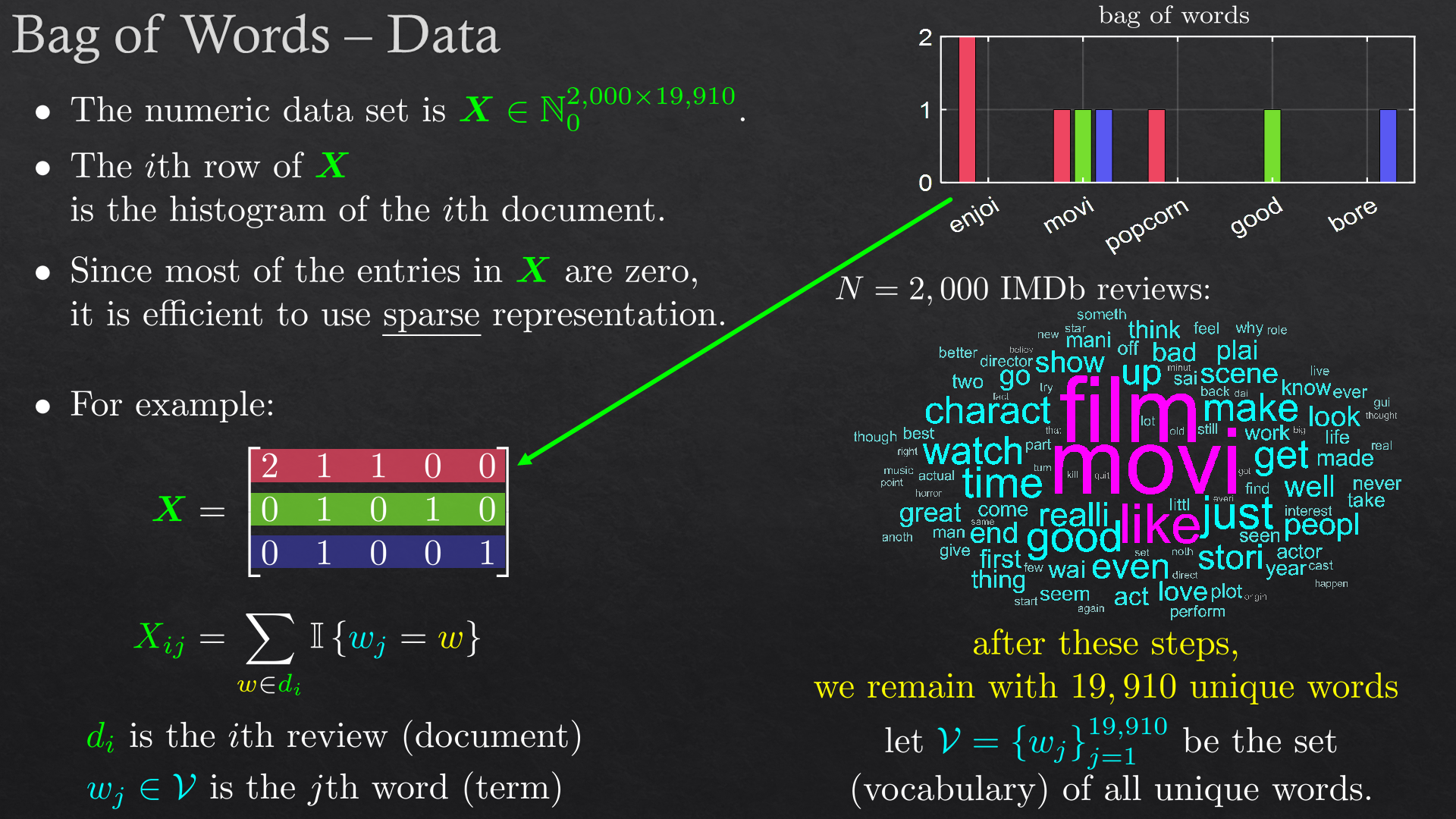

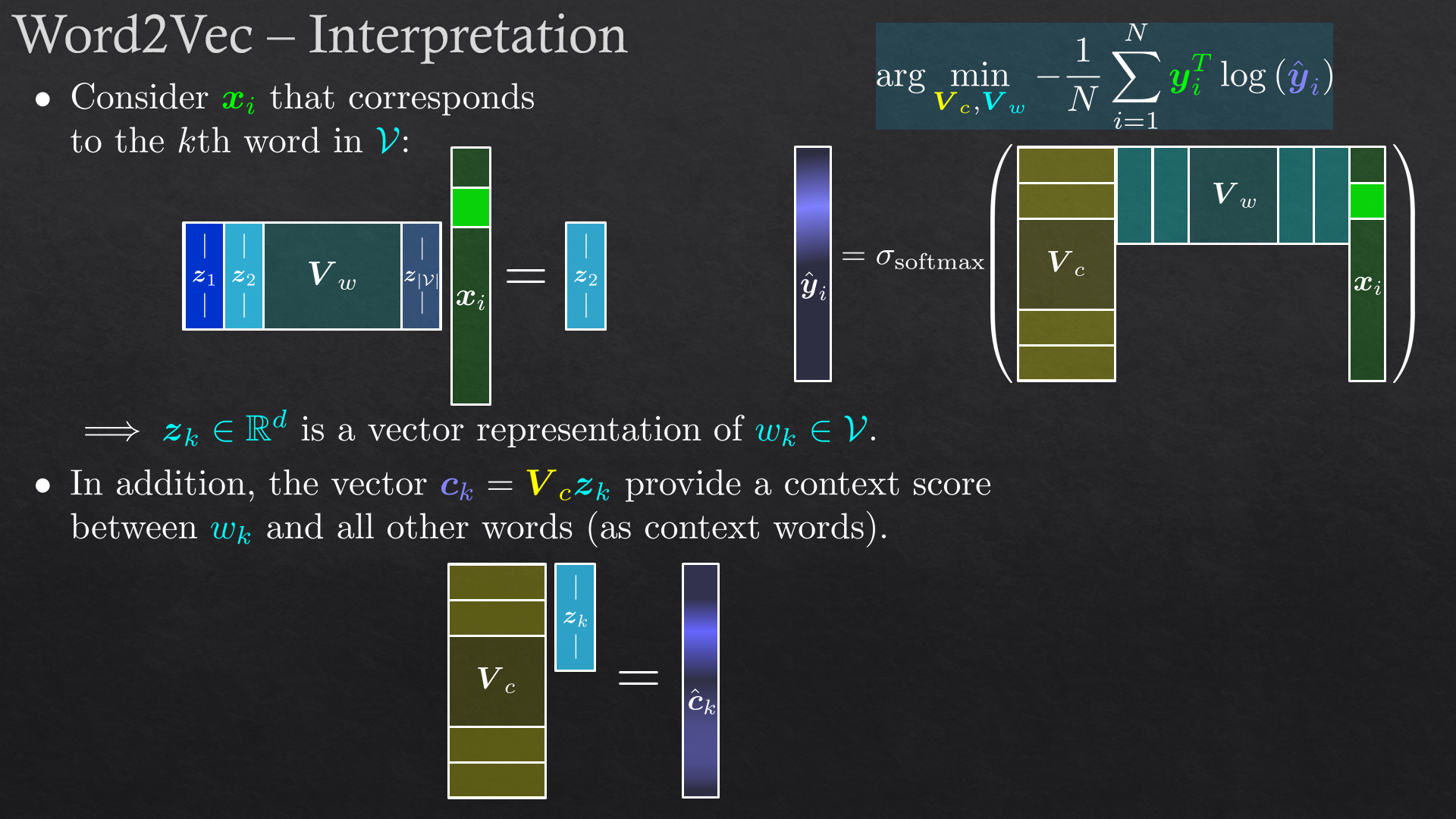

| Introduction to Natural Language Processing (NLP) | Bag of words, term frequency inverse document frequency (TF-IDF), word2vec | |

| Exercise 4 | Dimensionality reduction, feature extraction, metric selection | |

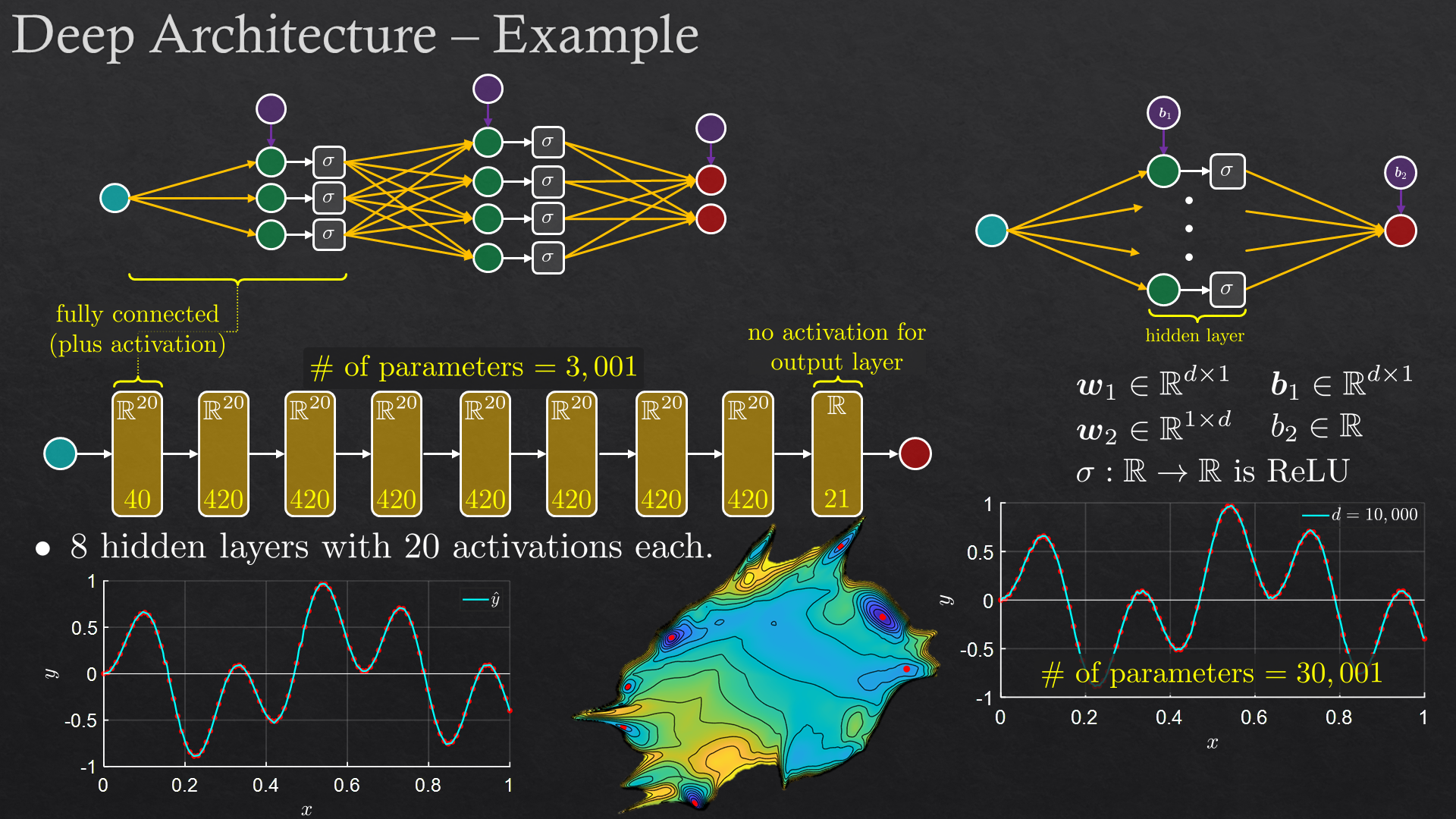

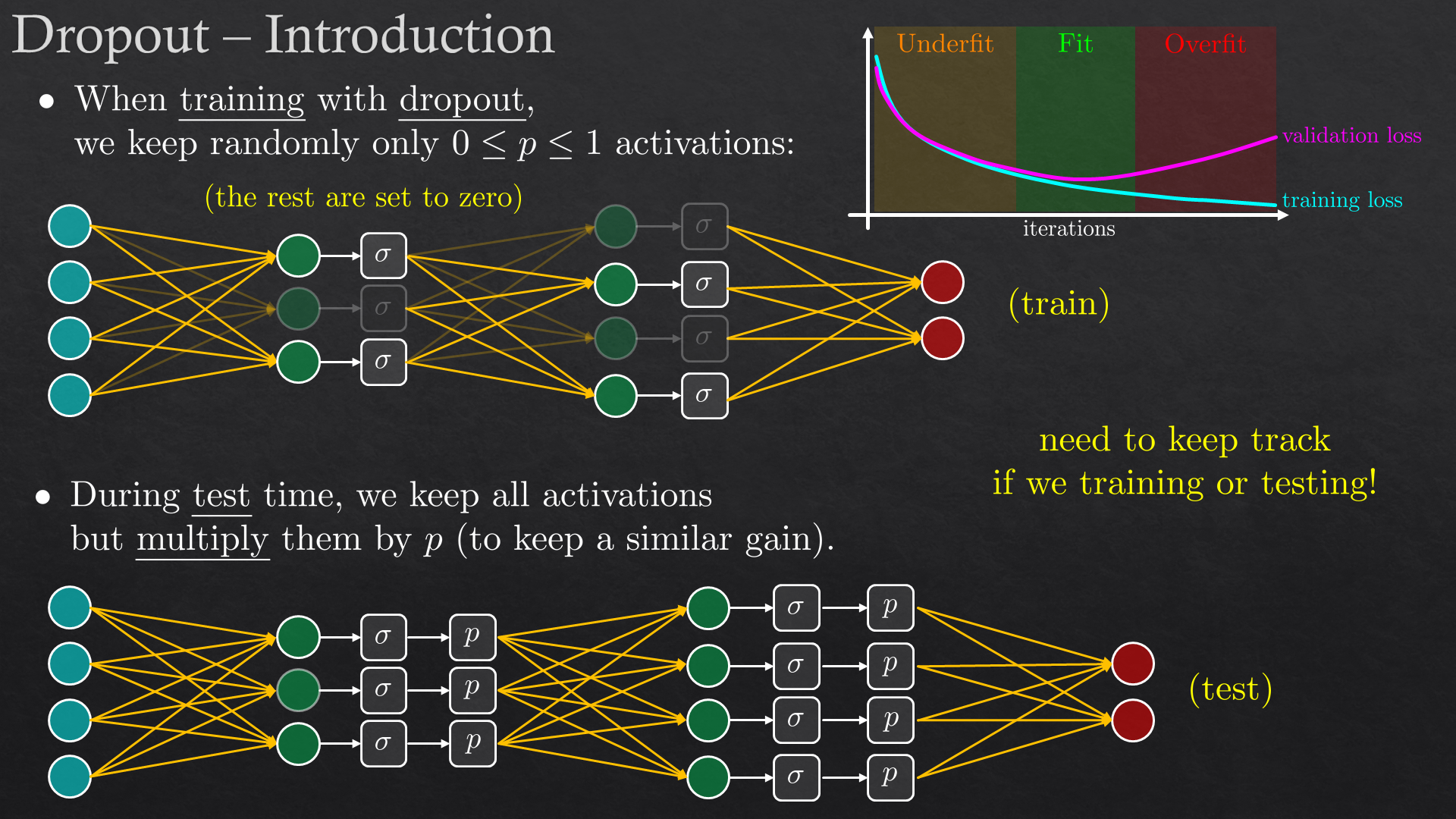

| 7 | Introduction to Deep Learning | Fully connected networks: regression, classification and activation |

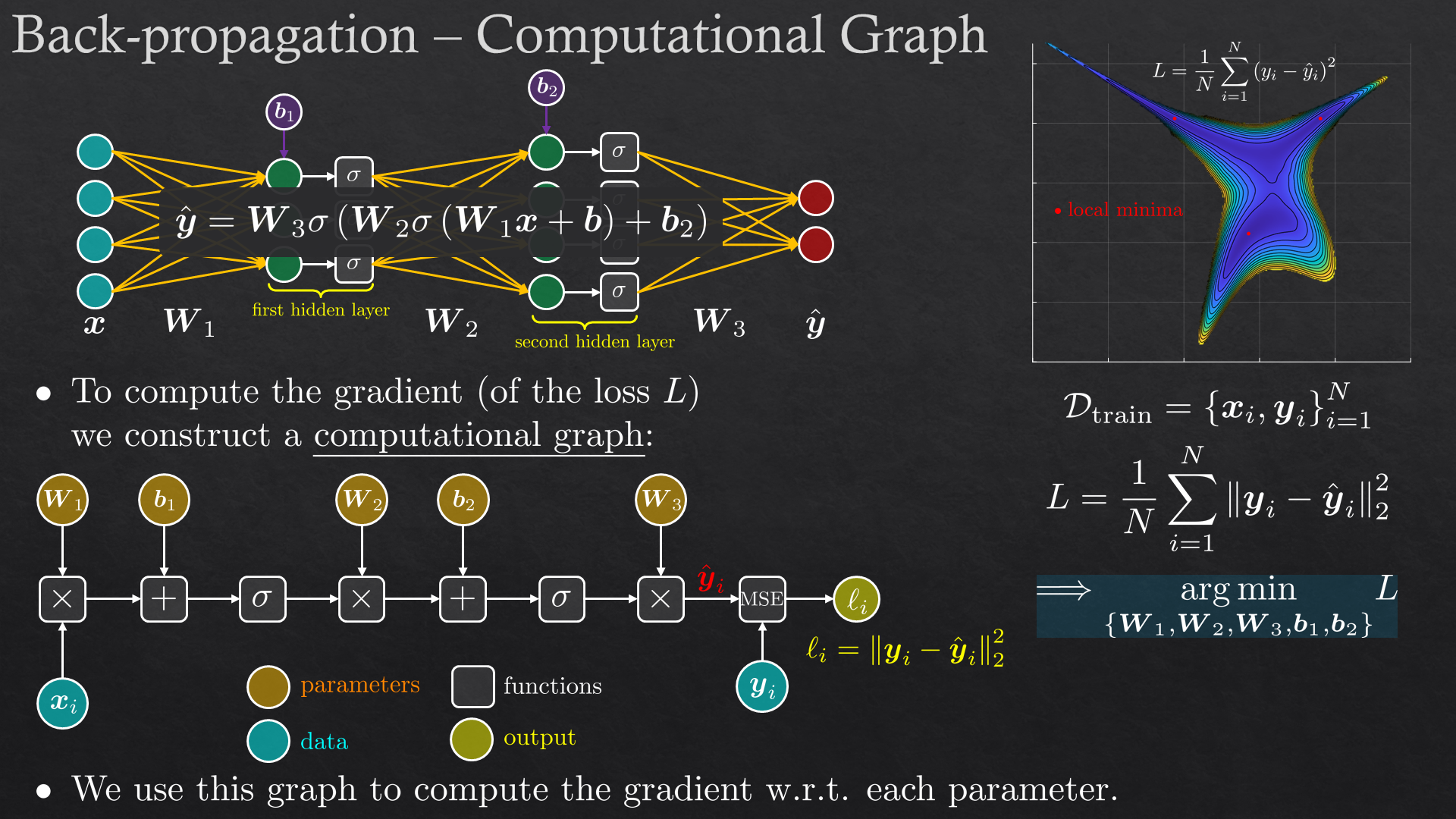

| Back-propagation | Forward pass, backward pass and the chain rule | |

| PyTorch | Tensors, autograd, modules, GPU, and tensorboard | |

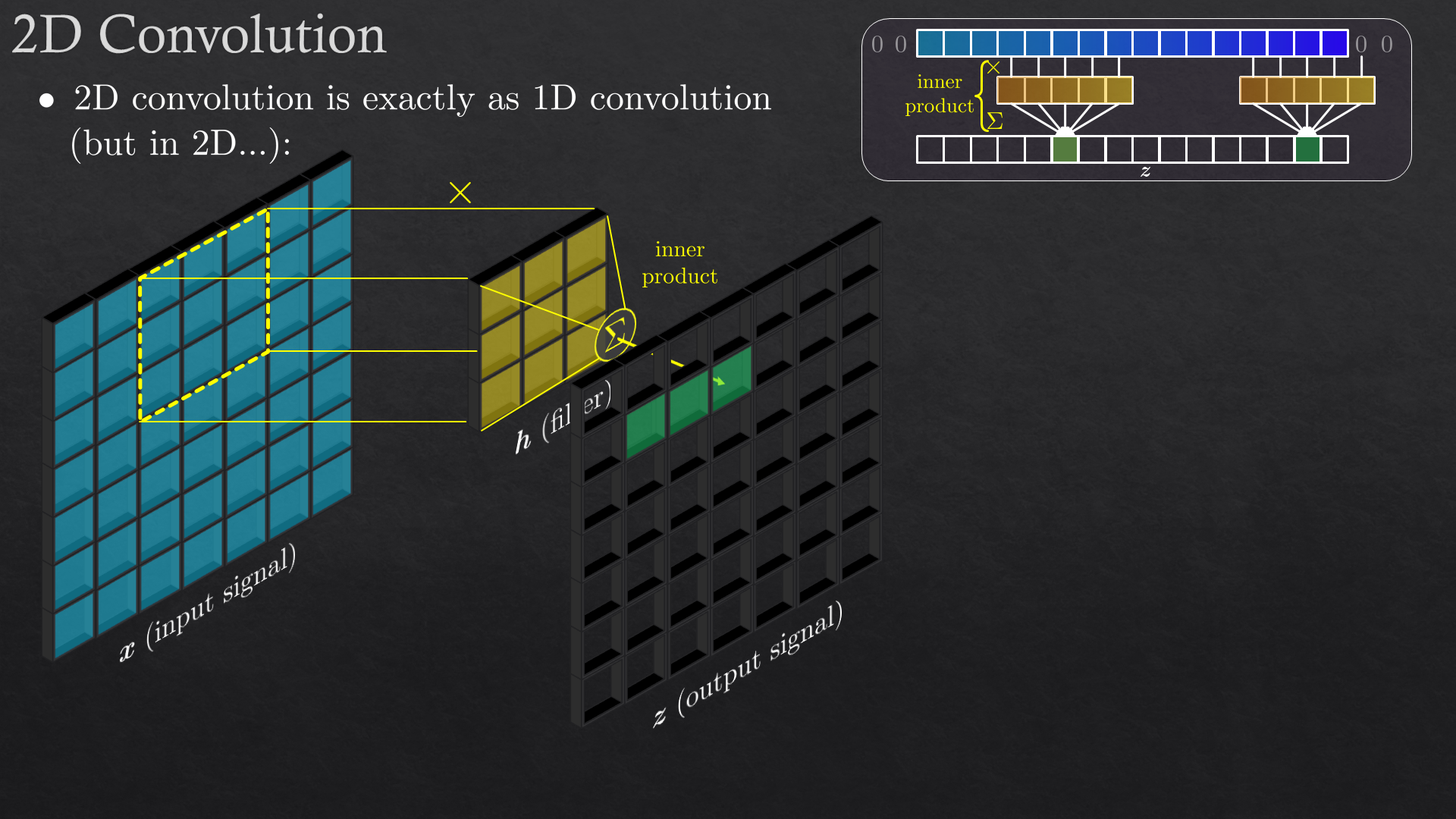

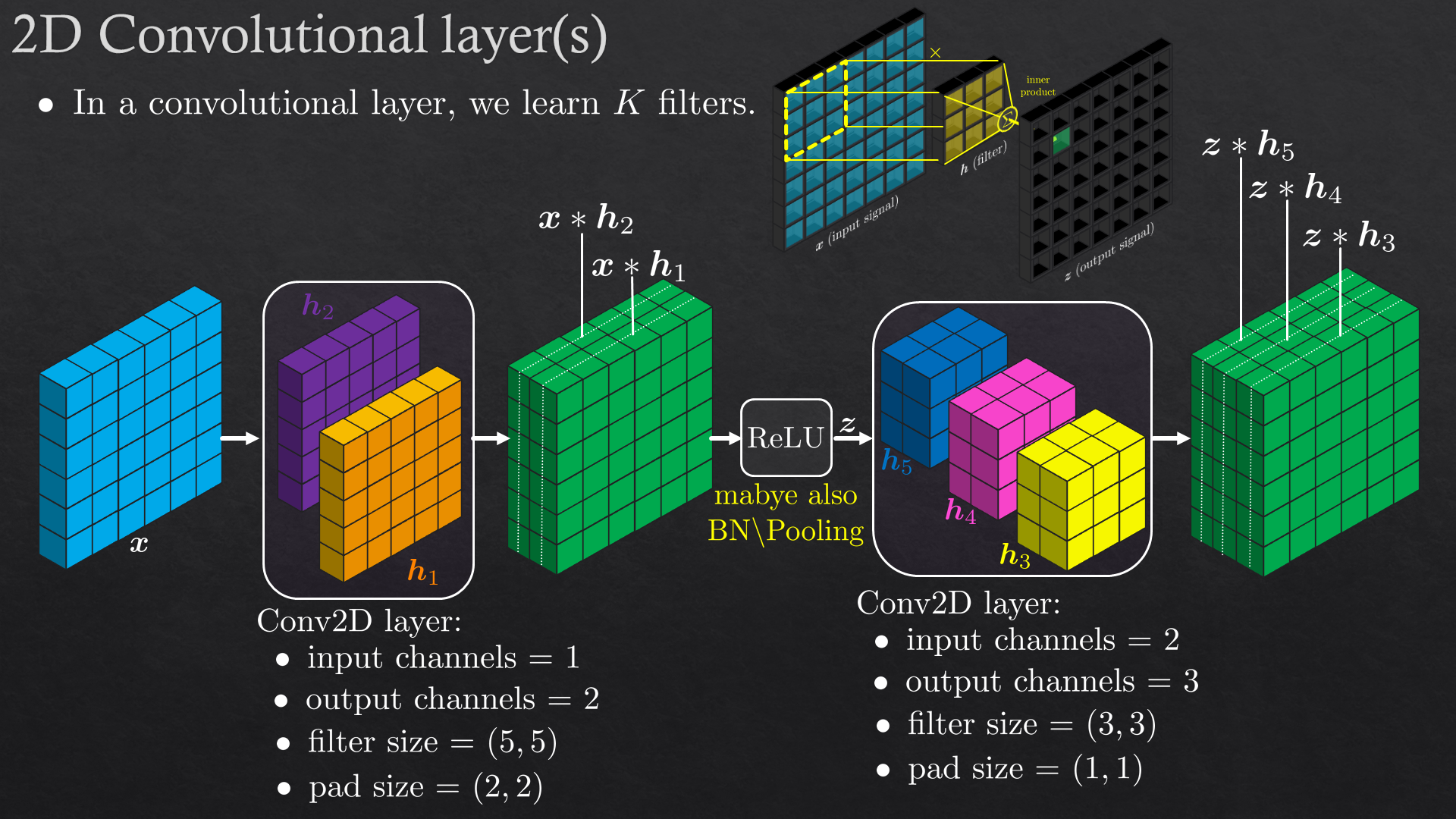

| Convolutional neural network (CNN) | Convolution, convolutional layers, pooling, batch normalization | |

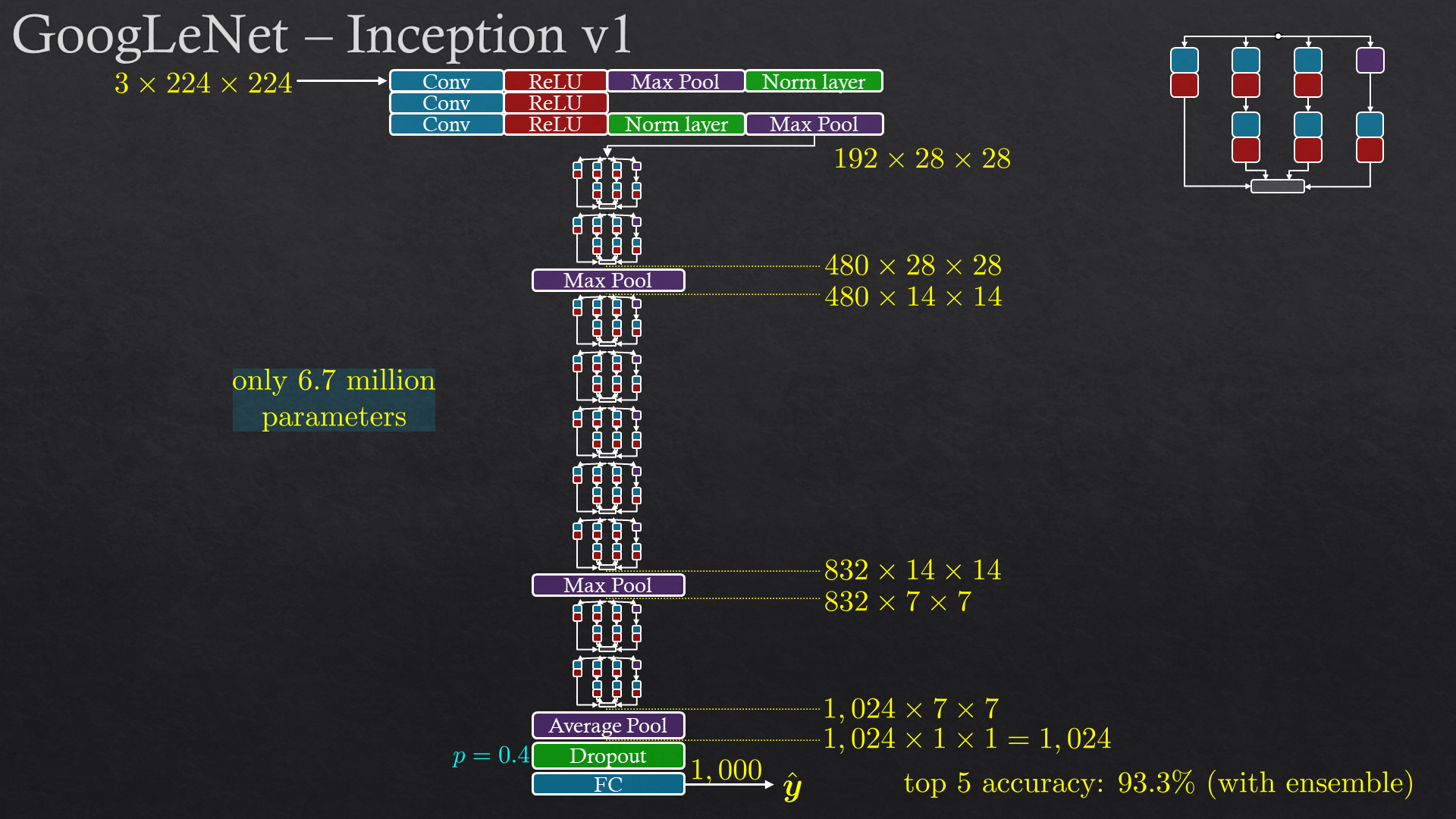

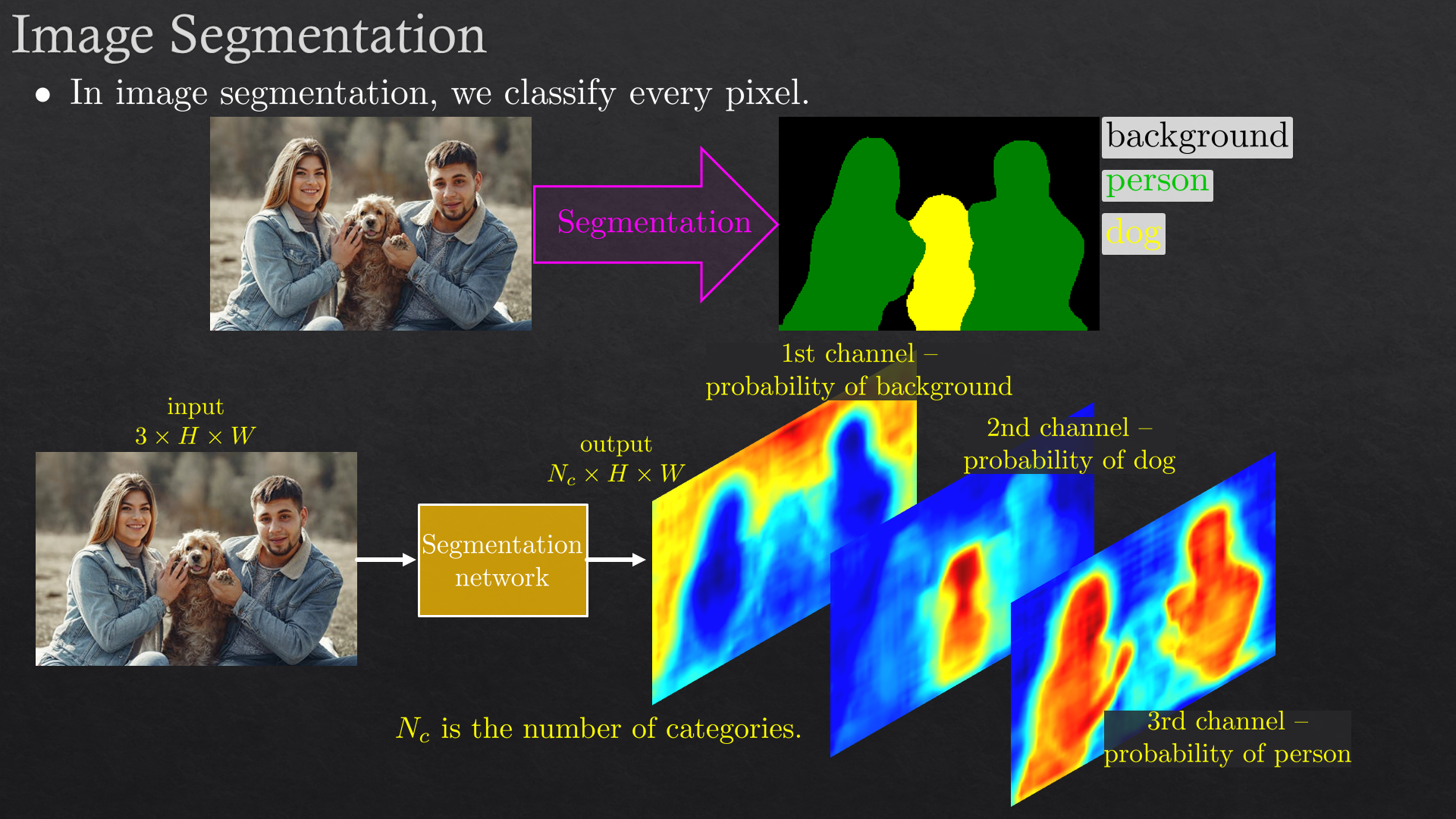

| CNN architectures | AlexNet, VGG, Inception, ResNet, transfer learning, introduction to object detection and segmentation | |

| Exercise 5 | Regression and classification (using deep learning) | |

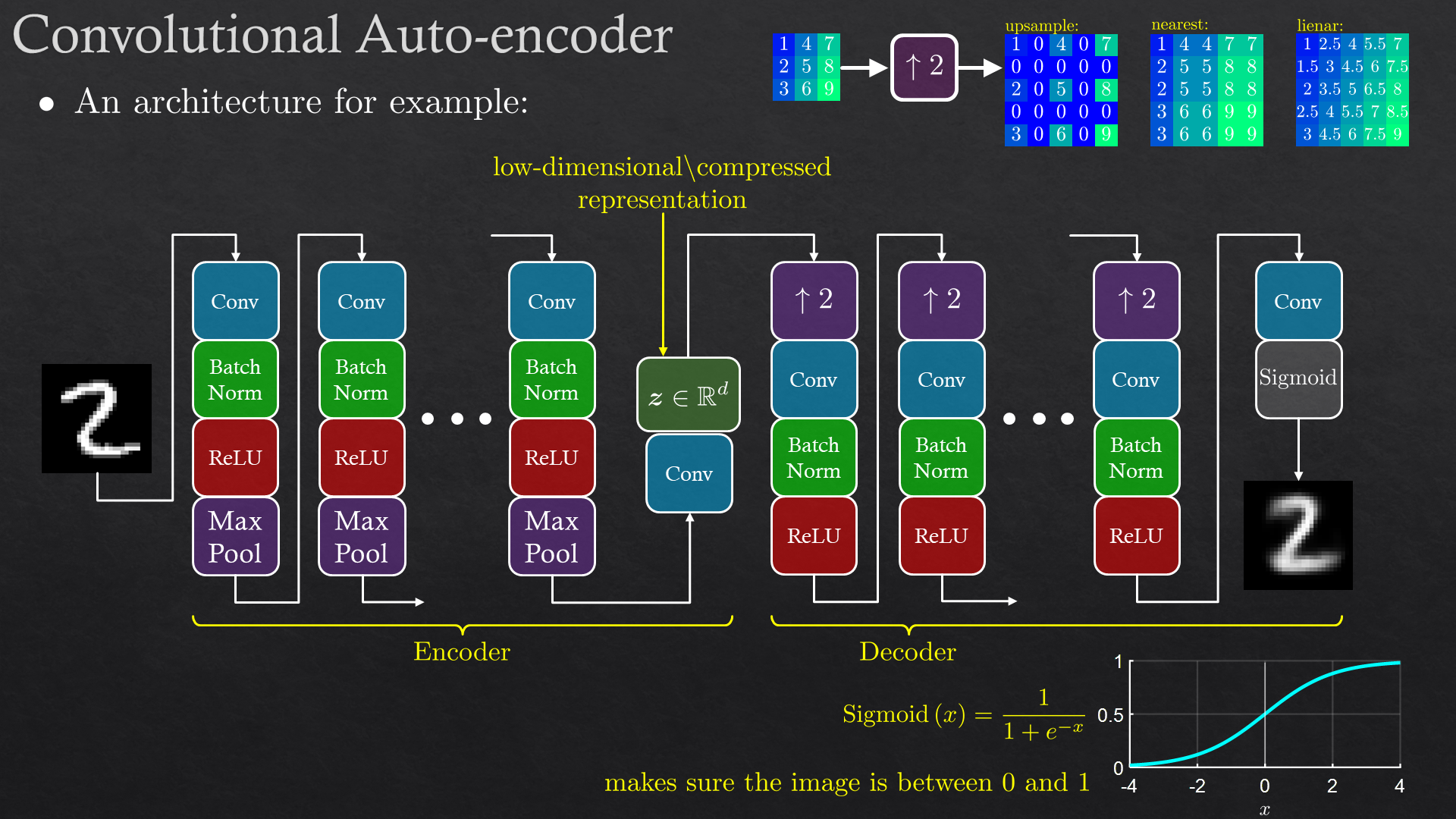

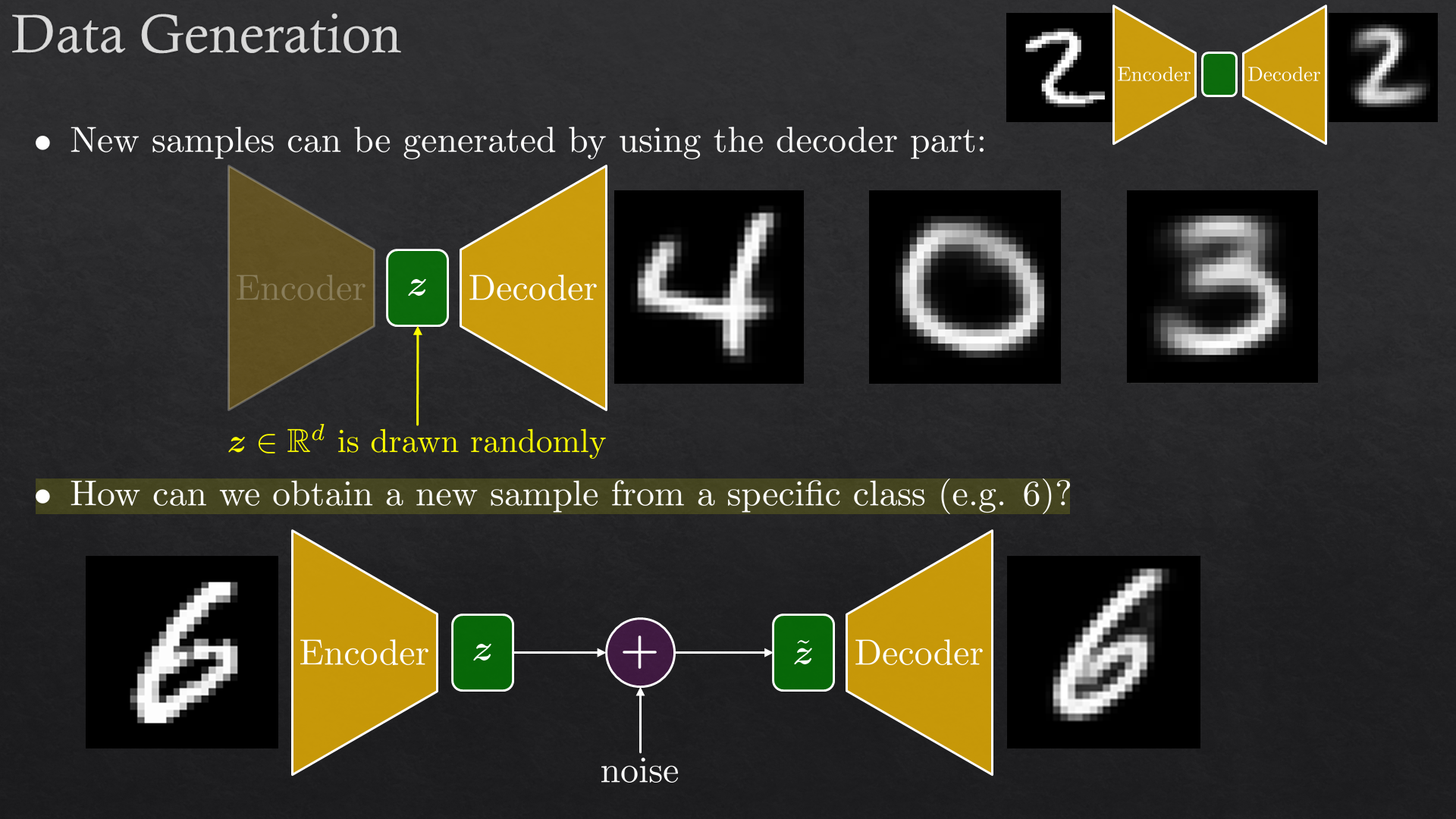

| 8 | Unsupervised (self-supervised) deep learning | Auto-encoders, introduction to GAN |

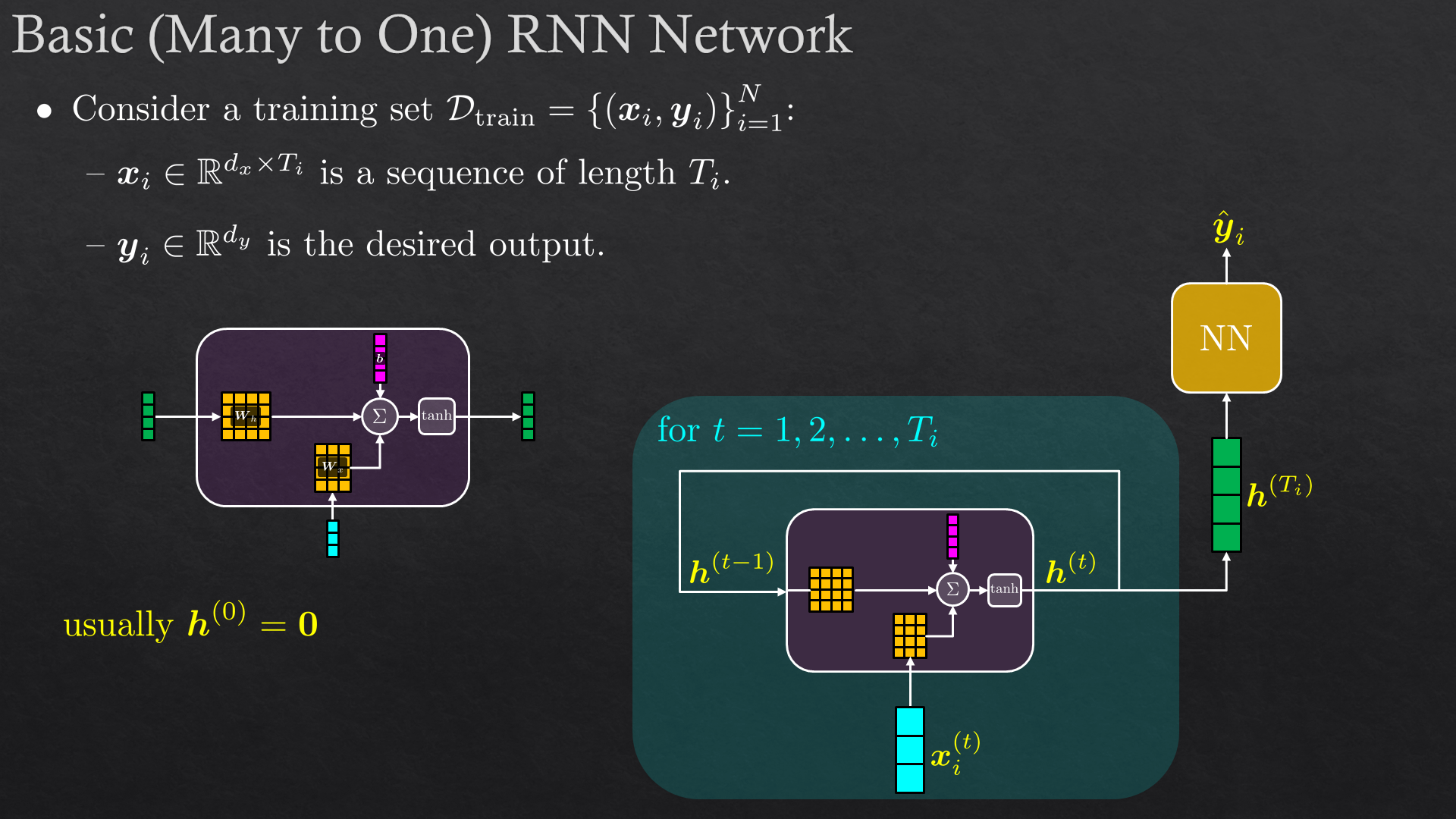

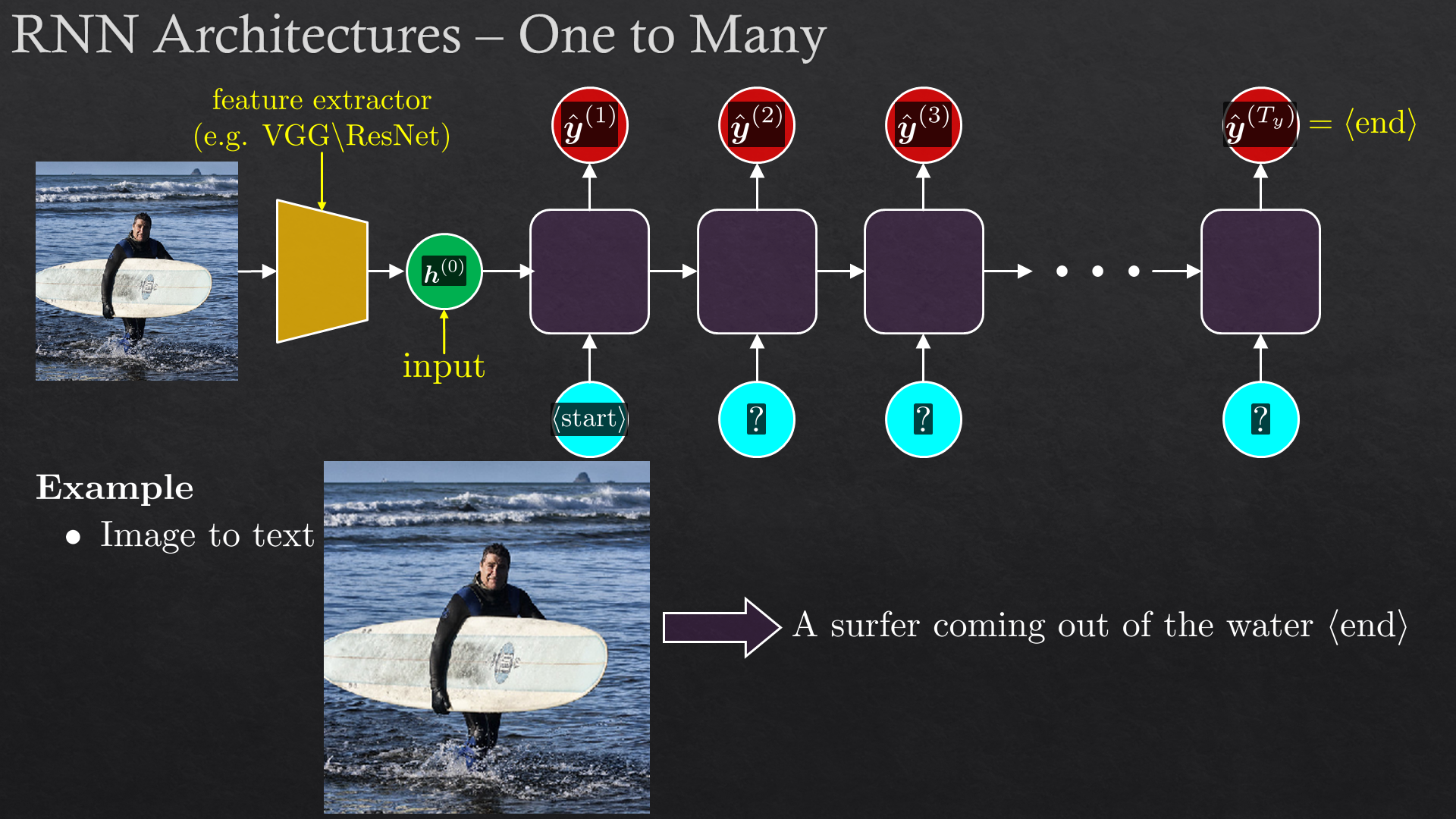

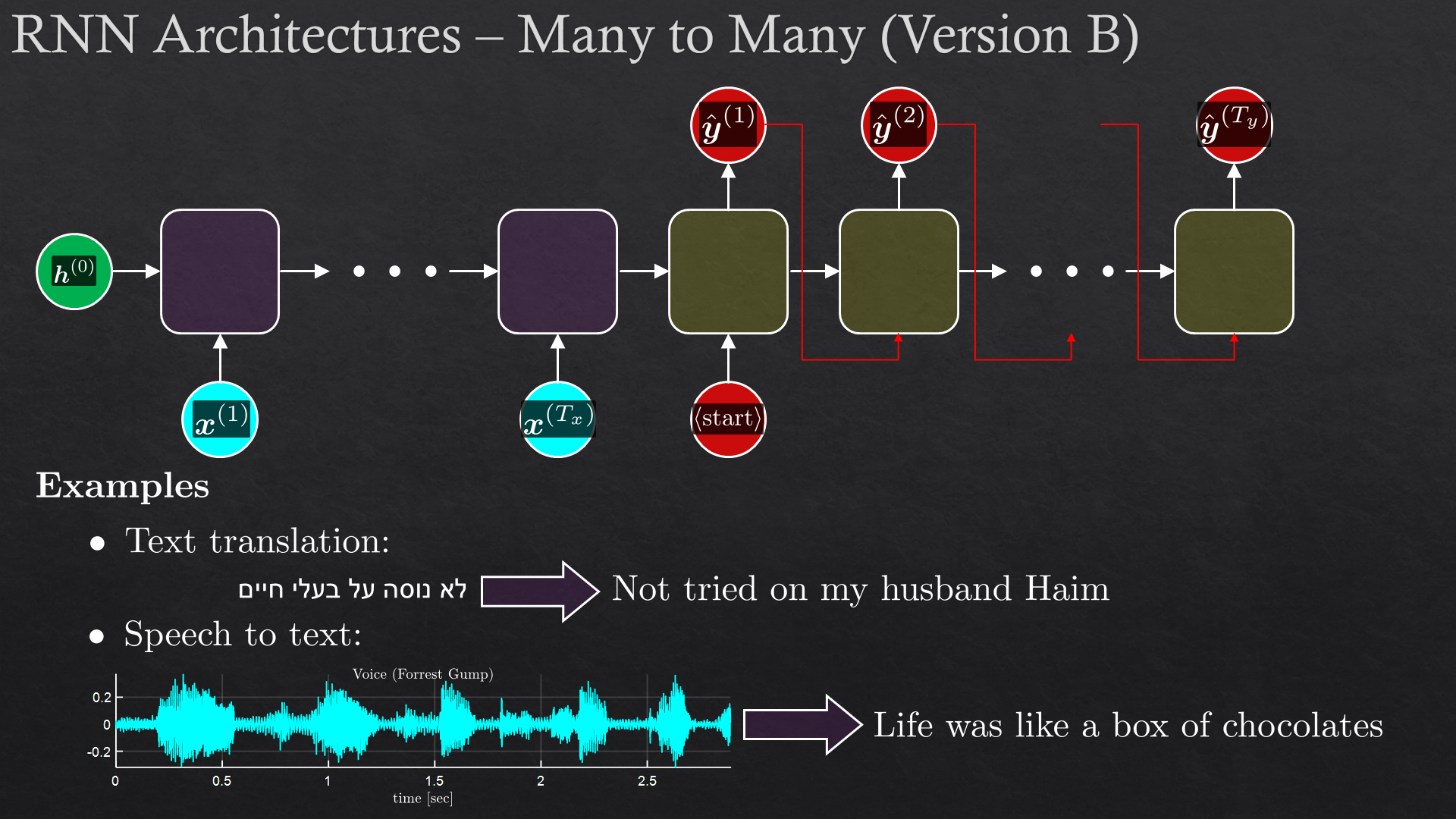

| Recurrent neural network (RNN) | Vanilla RNN, GRU, LSTM, RNN architectures, Sampling from RNN | |

| Exercise 6 | GANs and music generation with RNN |

In addition to the exercises in the syllabus, there are many more mini-exercises (within each topic).

Prerequisites

- Linear algebra

- Basic calculus

- Basic probability theory

- Experience with a scientific language (Python, MATLAB, R, etc')

- Recommended background: Signal\image processing, computer vision, optimization

In any case any of the prerequisites are not met, we can offer a half day sprint on: Linear Algebra, Calculus, Probability and Python.